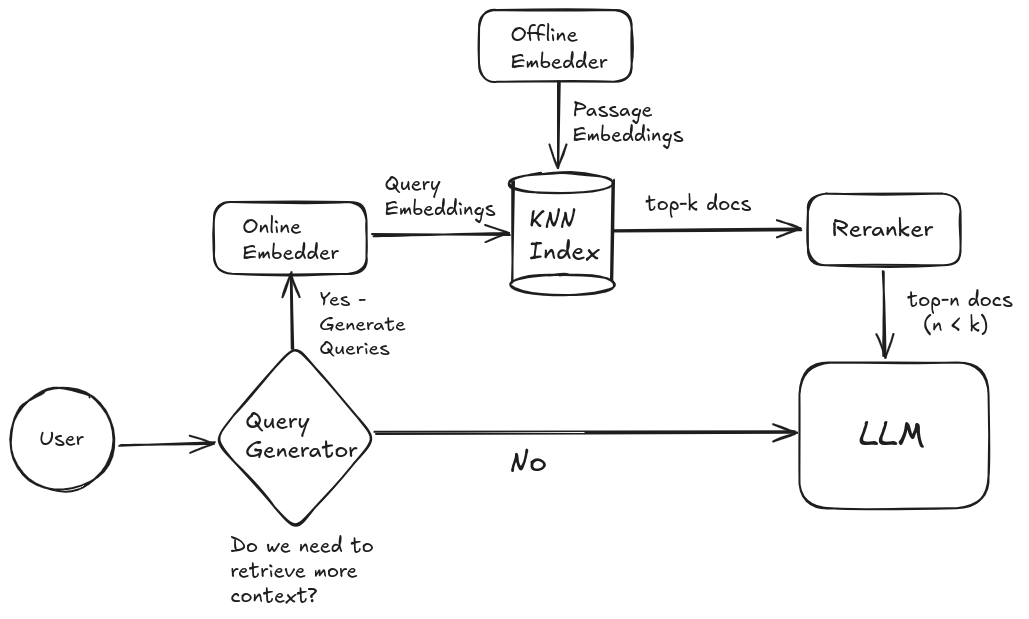

This is a demo of a RAG system using the 5 million most popular passages from a 2022 Wikipedia dump. The dataset is available here.

- Llama 3.1 70B Instruct (8 bit quantization)

- Hosted on Together API

- BAAI/bge-base-en-v1.5

- Offline Embeddings are computed on 2 RTX 4090's for one hour

- Online Embedder runs on a serverless RTX 4090 pod

- Infinity server to host the embedding model

- Faiss/AutoFaiss with HNSW algorithm

- Runs locally

- Salesforce/Llama-Rank-v1 (closed-weights)

- Hosted on Together API

- Llama 3.1 70B Instruct (8 bit quantization)

- Hosted on Together API

-

Encode the dataset and build the index using

embeddings-index.ipynb. Then copy the texts and the index to your local machine.The notebook provides code to do the following:

- Download the wikipedia dataset and dump the first 5 million rows into a json file

- Encode the texts in this json file using BAAI/bge-base-en-v1.5 and save the embeddings

- Build an index using AutoFaiss

- Search using Faiss

-

Create the relevant API keys and set the environment variables:

-

Create an endpoint on RunPod for the

BAAI/bge-base-en-v1.5embedding model:- Go to the Serverless Console

- Click on "Infinity Vector Embeddings" and select

BAAI/bge-base-en-v1.5 - Deploy the endpoint with default settings with the GPU of your choice

- Set the

Max Workersto 1 andMax Durationto 100 seconds - Copy the

API Keyto your environment andEndpoint URLto thechat.pyfile

-

Run the chatbot using

chat.py