-

Train custom NER model continually; one on top of other on three datasets.

-

In every iteration of model train, 100 samples of previous train set is added to current dataset, on which the model is training.

-

In every iteration, 20% test_set is kept out as test data of whole dataset and merge it with previous test_set, which makes complete "final_test_set" for model evaluation in that iteration.

-

After continual training, training a model on complete data (G1 + G2 + G3), at once, keep 20% data as test set.

-

Pushing each of the iterative models to huggingface-hub.

-

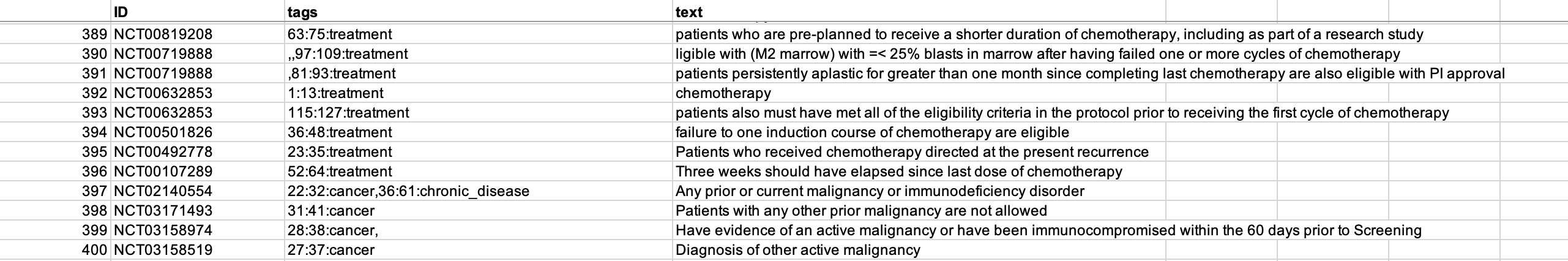

F1 scores of the entity_labels individually and overall for every iteration of training.

-

Custom NER training with SpaCy pipeline - notebook added spacy_train.ipynb

-

Fine Tuned a BertForTokenClassification model, using data transformations from [text, idx]based annotations (which would have been best in spacy) to [tokens, IOB] entity tags ids within a tokenized input and labels for training using transformers Training pipeline.

See https://www.geeksforgeeks.org/nlp-iob-tags/ for IOB tagging. -

Challenges with transformers training - when you move from index, text combination to IOB, tokens is that:

-

First off the transformers tokenizer is different from the tokenizer we woould have used for creating data in IOB format, which will cause mismatch in lenght of input and labels during training.

-

Taking care of subtokens generated by transformers tokenizer by writing rules to correctly using 'I-' and 'B-' tags for new generated tokens list.

-

-

Trained continual learning and the (G1+G2+G3) model within a loop pipeline, iterating over model_checkpoints in and model checkpoints out being pushed to huggingface-hub, which is used in model_checkpoint_in in subsequent trainig iterations.

-

Enviornment setup:

python -m venv .venv pip install -r requirements.txt

-

See - train_new.py for running more trainings and evaluating new iterative training models, provided with two separate functions - utils.py for utility functions.

-

Link to trained Hugging Face Models:

T1 - https://huggingface.co/raunak6898/bert-finetuned-ner-t1

T2 - https://huggingface.co/raunak6898/bert-finetuned-ner-t2

T3 - https://huggingface.co/raunak6898/bert-finetuned-ner-t3

T4(all data trained together) - https://huggingface.co/raunak6898/bert-finetuned-ner-all_data -

CSV for metric calculated on trained model

-

Training Code(see documented notebook - training_notebooks.ipynb, kindly ignore rendering issues) and a functional script for new training and evaluation (see train_new.py)

-

In 3 datasets, mostly clean data with no missing data, except for few entity labels index spilling over texts - Cleaned in training notebook and documented .

-

Another important finding is that, the start character for all entities are offset one to the right index, the end index is fine , so to fetch the entity text we will have to use [start_idx-1] .

- GitHub: https://github.com/rauni-iitr

- LinkedIn: https://www.linkedin.com/in/raunak-7068/