Face recognition is one of the most important biometric recognition techniques. It is relatively simple to set up and covers an extensive range of applications varying from surveillance to digital marketing.

- To design a real-time face recognition system that identifies people across the BITS Pilani university campus. Live video footage is provided as an input through the 100+ CCTV cameras installed at various vital locations across the campus.

- To design a web portal that can recognize people by their faces, mark their attendance, record their entry, and exit time.

We use the data set obtained from Student Welfare Division (SWD), BITS Pilani. It contains information like photo, ID No., name and the hostel of all the 5000+ students registered at the Pilani campus from the academic year 2014 to 2018.

Our algorithm is divided into three main parts:

- Preprocessing

- Model training

- Web application development

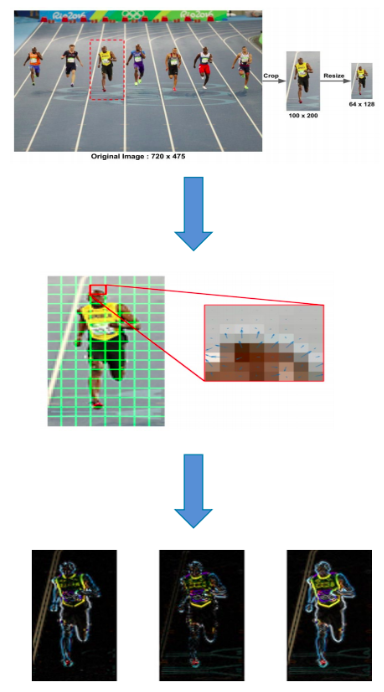

- The live video input from the CCTV camera is divided into frames at the rate of

30frames per sec.

We use two methods to detect faces in each video frame:

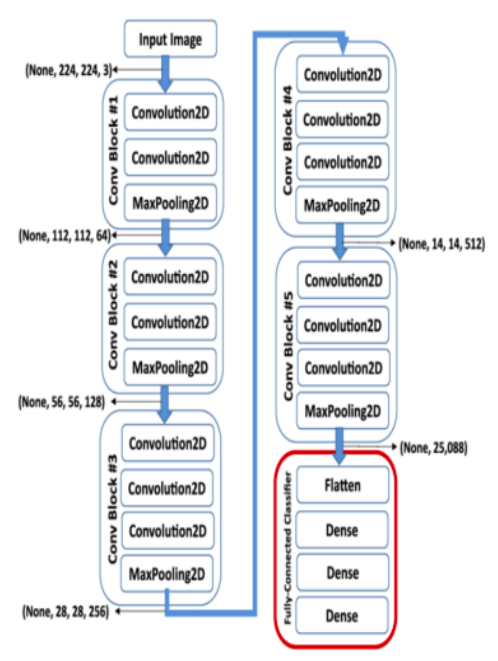

- The first method is using Convolutional Neural Networks (CNNs) for face detection. It generates a

bounding boxaround the face. - To further increase the accuracy, we detect the face using a HOG (Histogram of Gradients) based detector.

Sixty-eight landmark points are identified on the face using the Dlib python library. These landmark points are crucial for the next phase.

-

The goal is to warp and transform the input image (coordinates) onto an output coordinate space, such that, each face in the output coordinate space:

- Be centred in the image.

- Be rotated such that the eyes lie on a horizontal line (i.e., the face is rotated such that the eyes lie along the same y-coordinates).

- Be scaled such that the size of the faces is approximately identical.

-

The above recognized facial landmark points are used for alignment purposes. We perform affine transformation using the above-recognised landmark points. Facial recognition algorithms perform better on aligned faces.

- Our model proposes a solution that uses only one image per individual to detect the identity.

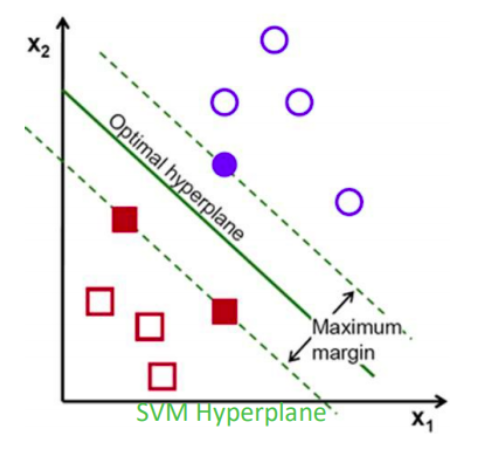

- 128-dimensional embeddings are generated for each of the images using the entire data pre-processing step which is then fed into the SVM for training.

- The model is trained using a triplet loss function.

- Finally, after training the model and generating the embeddings, we recognize different faces using a

Support Vector Machine (SVM)based classifier. - A test image’s embeddings are generated in the above fashion and finally compared with the other embeddings for successful classification.

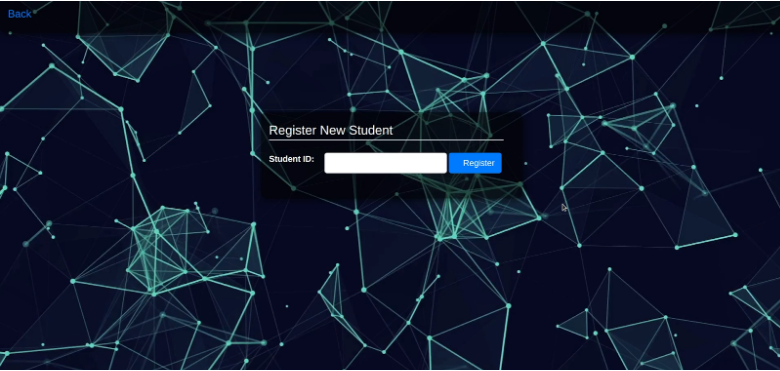

We built a web platform where students can register themselves and mark their attendance once registered.

The possible reasons for the errors could be:

- Change in the person’s face over time - considerable facial change from the photo used in training.

- Two or more similar looking people - If there are multiple people with similar faces, then the model may wrongly classify a person as someone else.

- Lack of training data - Deep learning networks are known to increase their accuracy in increasing the data. Since we have only one image per person, therefore there is scope for the model to be trained more efficiently.

- Clone the project and download the source code.

- Once inside the folder, run the following command:

python3 app.py - Go to:

localhost:5000in your browser - First Register yourself by clicking on

Register Image - Mark your attendance by clicking on

Start Surveillance Image