© 2023, Anyscale Inc. All Rights Reserved

LLMs have gained immense popularity in recent months. An entirely new ecosystem of pre-trained models and tools has emerged that streamline the process of building LLM-based applications.

Here, you will build question answering (QA) service designed to run locally. You will learn how to scale your application using Ray and Anyscale to run it in the cloud. Finally, you will learn how to use modern tools to run your application on the production-grade platform.

- Use libraries like Ray, HuggingFace, and LangChain to build LLM-based applications based on open-source code, models, and data.

- Learn about scaling LLM fine-tuning and inference, along with trade-offs.

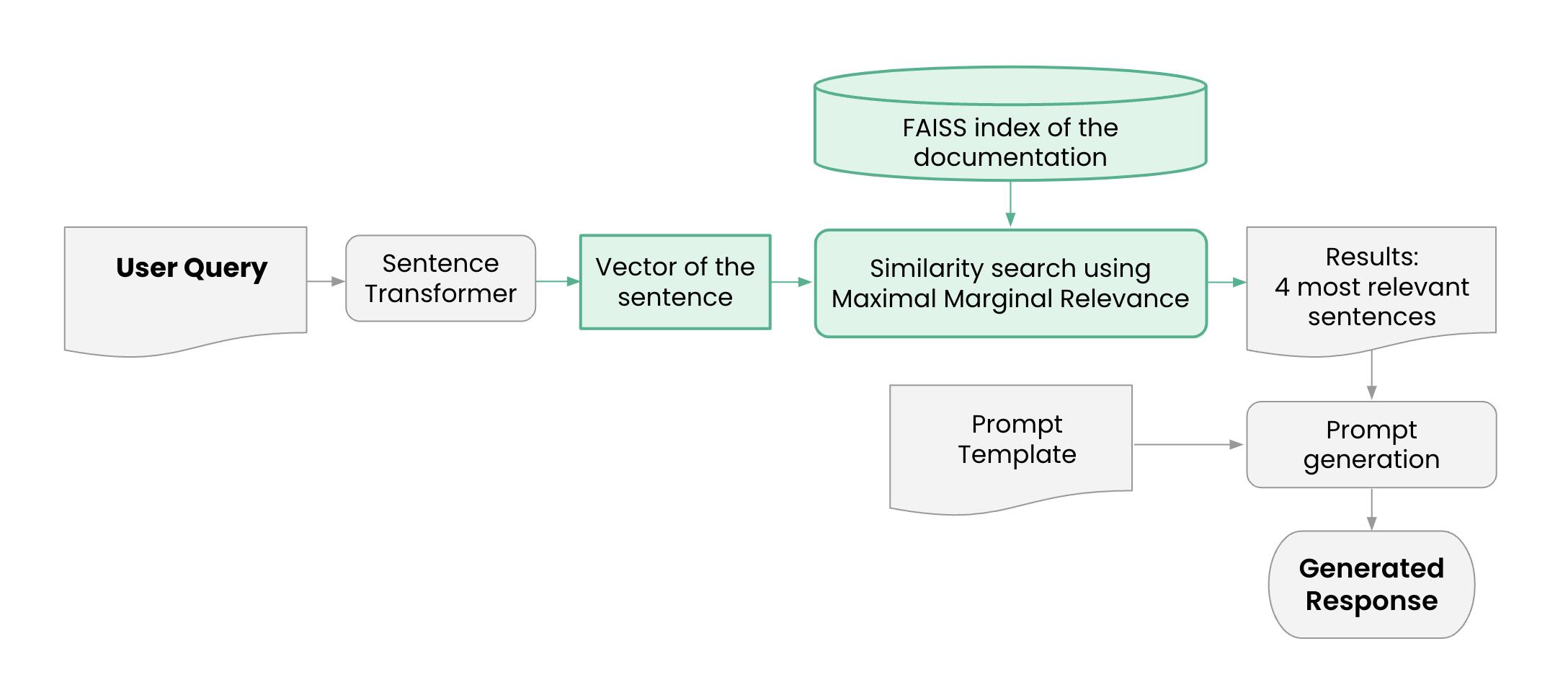

- Use embedding models and vector stores.

- Learn about using modern deployment tools to run your application online and continually improve it.

You can learn and get more involved with the Ray community of developers and researchers:

-

Official Ray site Browse the ecosystem and use this site as a hub to get the information that you need to get going and building with Ray.

-

Join the community on Slack Find friends to discuss your new learnings in our Slack space.

-

Use the discussion board Ask questions, follow topics, and view announcements on this community forum.

-

Join a meetup group Tune in on meet-ups to listen to compelling talks, get to know other users, and meet the team behind Ray.

-

Open an issue Ray is constantly evolving to improve developer experience. Submit feature requests, bug-reports, and get help via GitHub issues.

-

Become a Ray contributor We welcome community contributions to improve our documentation and Ray framework.