Airflow + Spark Docker environment

Local containerized environment for development using Docker, Airflow and Spark.

Table of Contents

- General Info

- Useful Articles

- Technologies Used

- Screenshots

- Setup

- Usage

- Project Status

- Room for Improvement

- Contact

General Information

- This project aims to provide a quick and easy way to provision a local development environment with Airflow and Spark for data enthusiasts.

- Here we use as a base the docker-compose.yaml available on the Airflow website. We extend the image to install our requirements and modify the services in docker-compose.yaml according to our needs.

Useful Articles

- Running Airflow in Docker

- Building the Airflow image

- Docker Image for Apache Airflow

- Airflow Architecture Overview

- Apache Spark packaged by Bitnami

Technologies Used

- Tech 1 - Docker/Docker compose

- Tech 2 - Airflow 2.3.4 - Docker image

- Tech 3 - Spark latest - Docker image

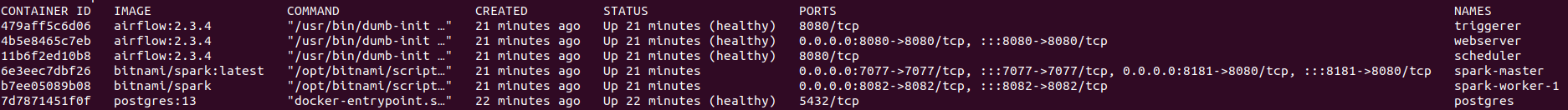

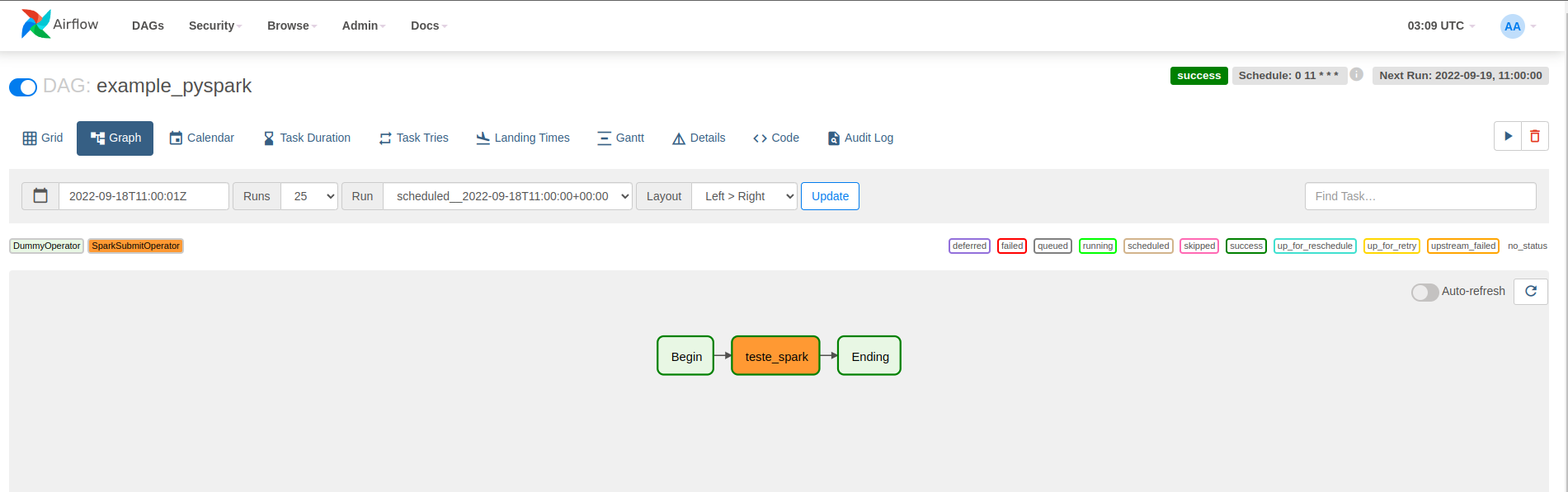

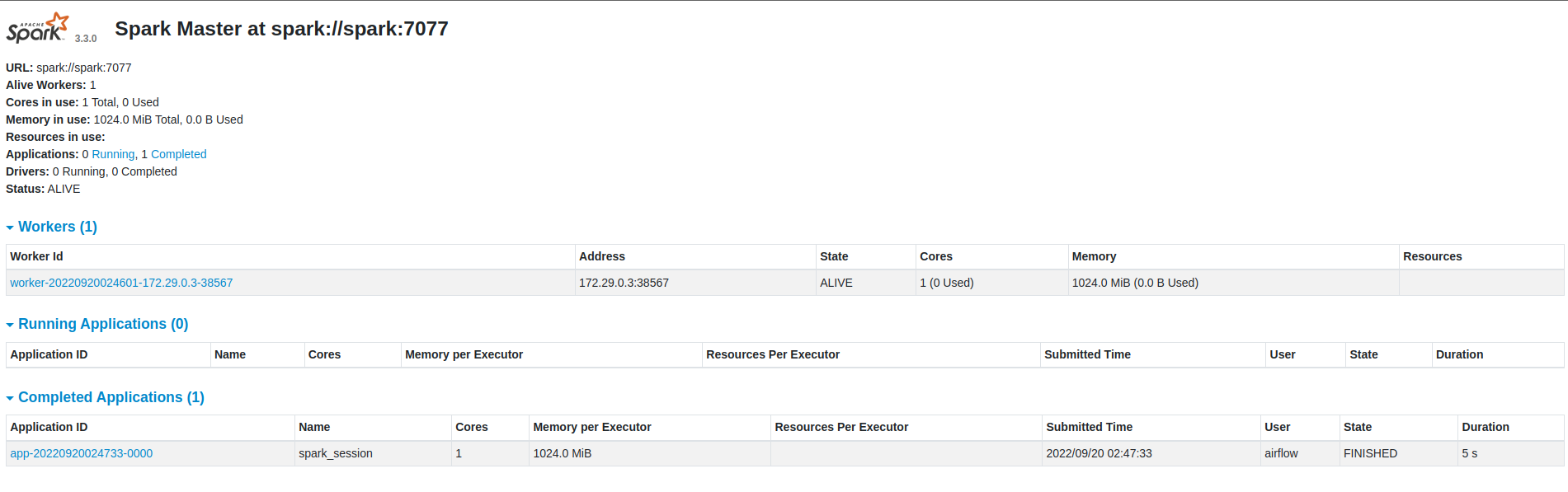

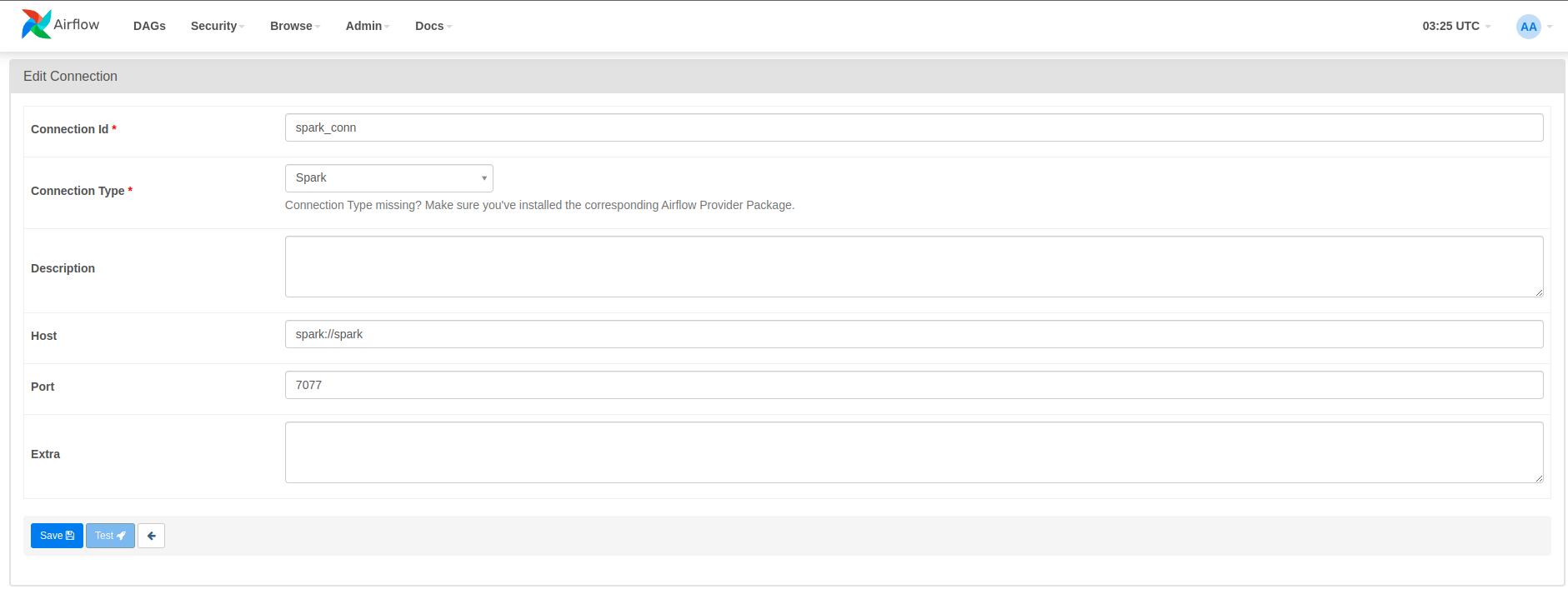

Screenshots

Setup

Usage

git clone git@github.com:razevedo1994/airflow_and_spark_docker_environment.git

mkdir -p ./logs ./plugins

echo -e "AIRFLOW_UID=$(id -u)" > .env

chmod +x ./build_environment.sh ./reset_environment.sh

./build_environment.sh

http://localhost:8080/

- To access Spark Master UI:

http://localhost:8181/

Attention: If you want to clean up your environment run ./reset_environment.sh . But be careful, this command will delete all your images and containers.

Project Status

Project is: in progress

Room for Improvement

--

Contact

Created by Rodrigo Azevedo - feel free to contact me!