Detecting Not-Suitable-For-Work (NSFW) content is a high demand task in computer vision. While there are many types of NSFW content, here we focus on the pornographic images and videos.

The Yahoo Open-NSFW model originally developed with the Caffe framework has been a favourite choice, but the work is now discontinued and Caffe is also becoming less popular. Please see the description on the Yahoo project page for the context, definitions, and model training details.

This Open-NSFW 2 project provides a TensorFlow 2 implementation of the Yahoo model, with references to its previous third-party TensorFlow 1 implementation.

A simple API is provided for making predictions on images and videos.

Python 3.8 or above is required. Tested for 3.8 and 3.9.

The best way to install Open-NSFW 2 with its dependencies is from PyPI:

python3 -m pip install --upgrade opennsfw2Alternatively, to obtain the latest version from this repository:

git clone git@github.com:bhky/opennsfw2.git

cd opennsfw2

python3 -m pip install .Quick examples for getting started are given below. For more details, please refer to the API section.

For images:

import opennsfw2 as n2

# To get the NSFW probability of a single image.

image_path = "path/to/your/image.jpg"

nsfw_probability = n2.predict_image(image_path)

# To get the NSFW probabilities of a list of images.

# This is better than looping with `predict_image` as the model will only be instantiated once

# and batching is used during inference.

image_paths = [

"path/to/your/image1.jpg",

"path/to/your/image2.jpg",

# ...

]

nsfw_probabilities = n2.predict_images(image_paths)For video:

import opennsfw2 as n2

# The video can be in any format supported by OpenCV.

video_path = "path/to/your/video.mp4"

# Return two lists giving the elapsed time in seconds and the NSFW probability of each frame.

elapsed_seconds, nsfw_probabilities = n2.predict_video_frames(video_path)For users familiar with NumPy and TensorFlow / Keras:

import numpy as np

import opennsfw2 as n2

from PIL import Image

# Load and preprocess image.

image_path = "path/to/your/image.jpg"

pil_image = Image.open(image_path)

image = n2.preprocess_image(pil_image, n2.Preprocessing.YAHOO)

# The preprocessed image is a NumPy array of shape (224, 224, 3).

# Create the model.

# By default, this call will search for the pre-trained weights file from path:

# $HOME/.opennsfw2/weights/open_nsfw_weights.h5

# If not exists, the file will be downloaded from this repository.

# The model is a `tf.keras.Model` object.

model = n2.make_open_nsfw_model()

# Make predictions.

inputs = np.expand_dims(image, axis=0) # Add batch axis (for single image).

predictions = model.predict(inputs)

# The shape of predictions is (num_images, 2).

# Each row gives [sfw_probability, nsfw_probability] of an input image, e.g.:

sfw_probability, nsfw_probability = predictions[0]Apply necessary preprocessing to the input image.

- Parameters:

pil_image(PIL.Image): Input as a Pillow image.preprocessing(Preprocessingenum, defaultPreprocessing.YAHOO): See preprocessing details.

- Return:

- NumPy array of shape

(224, 224, 3).

- NumPy array of shape

Enum class for preprocessing options.

Preprocessing.YAHOOPreprocessing.SIMPLE

Create an instance of the NSFW model, optionally with pre-trained weights from Yahoo.

- Parameters:

input_shape(Tuple[int, int, int], default(224, 224, 3)): Input shape of the model, this should not be changed.weights_path(Optional[str], default$HOME/.opennsfw/weights/open_nsfw_weights.h5): Path to the weights in HDF5 format to be loaded by the model. The weights file will be downloaded if not exists. IfNone, no weights will be downloaded nor loaded to the model. Users can provide path if the default is not preferred. The environment variableOPENNSFW2_HOMEcan also be used to indicate where the.opennsfw2directory should be located.

- Return:

tf.keras.Modelobject.

End-to-end pipeline function from the input image to the predicted NSFW probability.

- Parameters:

image_path(str): Path to the input image file. The image format must be supported by Pillow.preprocessing: Same as that inpreprocess_image.weights_path: Same as that inmake_open_nsfw_model.grad_cam_path(Optional[str], defaultNone): If notNone, e.g.,cam.jpg, a Gradient-weighted Class Activation Mapping (Grad-CAM) overlay plot will be saved, which highlights the important region(s) of the (preprocessed) input image that lead to the prediction.grad_cam_height(int, default512): Height of the plot, only valid ifgrad_cam_pathis notNone.grad_cam_width(int, default512): Width of the plot, only valid ifgrad_cam_pathis notNone.alpha(float, default0.5): Opacity of the Grad-CAM layer of the plot, only valid ifgrad_cam_pathis notNone.

- Return:

nsfw_probability(float): The predicted NSFW probability of the image.

End-to-end pipeline function from the input images to the predicted NSFW probabilities.

- Parameters:

image_paths(Sequence[str]): List of paths to the input image files. The image format must be supported by Pillow.batch_size(int, default16): Batch size to be used for model inference.preprocessing: Same as that inpreprocess_image.weights_path: Same as that inmake_open_nsfw_model.grad_cam_paths(Optional[Sequence[str]], defaultNone): If notNone, the corresponding Grad-CAM plots for the input images will be saved. See the description inpredict_image.grad_cam_height: Same as that inpredict_image.grad_cam_width: Same as that inpredict_image.alpha: Same as that inpredict_image.

- Return:

nsfw_probabilities(List[float]): Predicted NSFW probabilities of the images.

End-to-end pipeline function from the input video to predictions.

- Parameters:

video_path(str): Path to the input video source. The video format must be supported by OpenCV.frame_interval(int, default8): Prediction will be done on every this number of frames, starting from frame 1, i.e., if this is 8, then prediction will only be done on frame 1, 9, 17, etc.output_video_path(Optional[str], defaultNone): If notNone, e.g.,out.mp4, an output MP4 video with the same frame size and frame rate as the input video will be saved via OpenCV. The predicted NSFW probability is printed on the top-left corner of each frame. Be aware that the output file size could be much larger than the input file size. This output video is for reference only.preprocessing: Same as that inpreprocess_image.weights_path: Same as that inmake_open_nsfw_model.

- Return:

- Tuple of

List[float], each with length equals to the number of video frames.elapsed_seconds: Video elapsed time in seconds at each frame.nsfw_probabilities: NSFW probability of each frame. For anyframe_interval > 1, all frames without a prediction will be assumed to have the NSFW probability of the previous predicted frame.

- Tuple of

This implementation provides the following preprocessing options.

YAHOO: The default option which was used in the original Yahoo's Caffe and the later TensorFlow 1 implementations. The key steps are:- Resize the input Pillow image to

(256, 256). - Store the image as JPEG in memory and reload it again to a NumPy image (this step is mysterious, but somehow it really makes a difference).

- Crop the centre part of the NumPy image with size

(224, 224). - Swap the colour channels to BGR.

- Subtract the training dataset mean value of each channel:

[104, 117, 123].

- Resize the input Pillow image to

SIMPLE: A simpler and probably more intuitive preprocessing option is also provided, but note that the model output probabilities will be different. The key steps are:- Resize the input Pillow image to

(224, 224). - Convert to a NumPy image.

- Swap the colour channels to BGR.

- Subtract the training dataset mean value of each channel:

[104, 117, 123].

- Resize the input Pillow image to

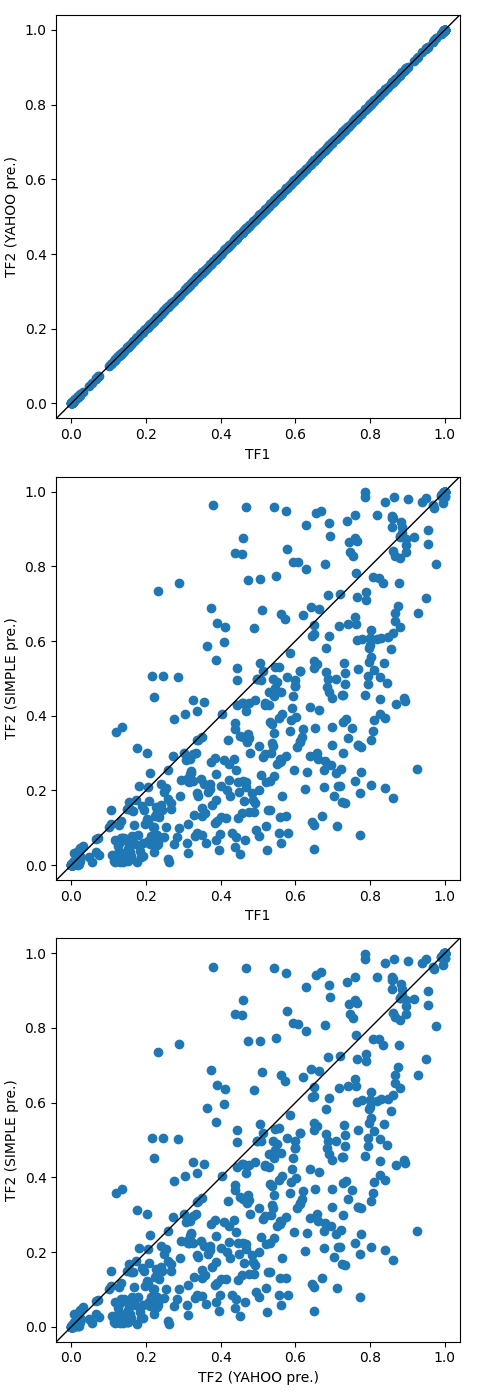

Using 521 private test images, the NSFW probabilities given by three different settings are compared:

- TensorFlow 1 implementation with

YAHOOpreprocessing. - TensorFlow 2 implementation with

YAHOOpreprocessing. - TensorFlow 2 implementation with

SIMPLEpreprocessing.

The following figure shows the result:

The current TensorFlow 2 implementation with YAHOO preprocessing

can totally reproduce the well-tested TensorFlow 1 result,

with small floating point errors only.

With SIMPLE preprocessing the result is different, where the model tends

to give lower probabilities over the current test images.

Note that this comparison does not conclude which preprocessing method is

"better", it only shows their discrepancies. However, users that prefer the

original Yahoo result should go for the default YAHOO preprocessing.