This is the official repo for the paper Information-Theoretic Probing with Minimum Description Length.

Read the official blog post for the details!

To measure how well pretrained representations (BERT, ELMO) encode some linguistic property, it is common to use accuracy of a probe, i.e. a classifier trained to predict the property from the representations. However, such probes often fail to adequately reflect differences in representations, and can show different results depending on a setting.

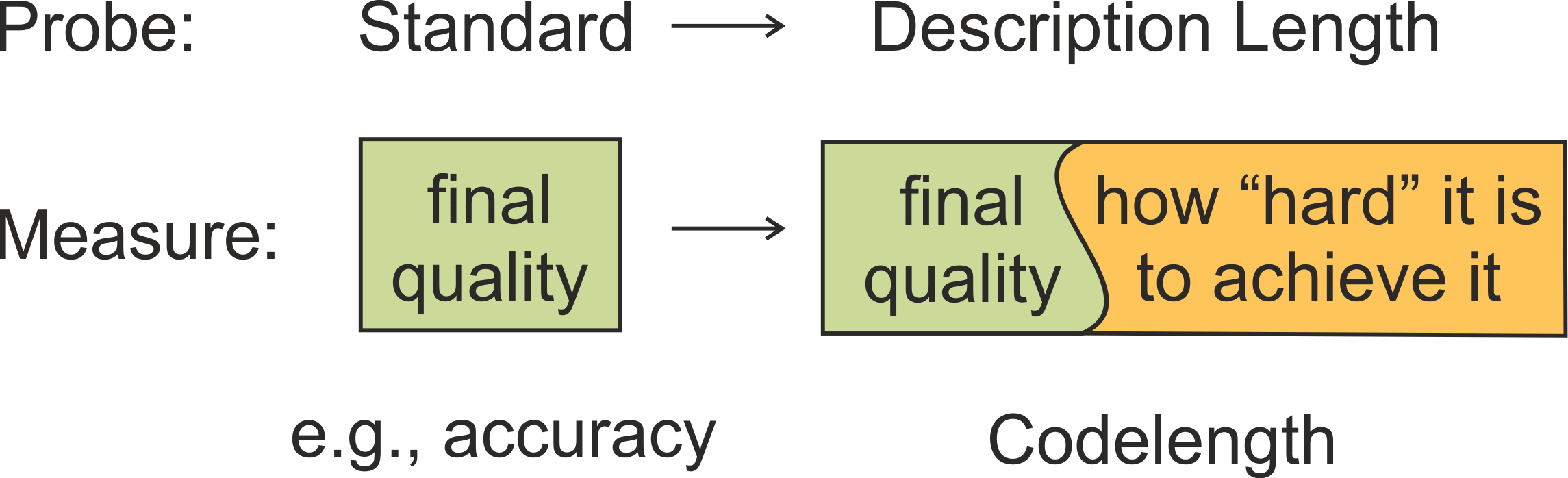

We look at this task from the information-theoretic perspective. Our idea can be summarized in two sentences.

Formally, as an alternative to the standard probes,

-

we propose information-theoretic probing which measures minimum description length (MDL) of labels given representations;

-

we show that MDL characterizes both probe quality and the amount of effort needed to achieve it;

-

we explain how to easily measure MDL on top of standard probe-training pipelines;

-

we show that results of MDL probes are more informative and stable than those of standard probes.

Interested? More details in the blog post or the paper.

This repo provides code to reproduce our experiments.

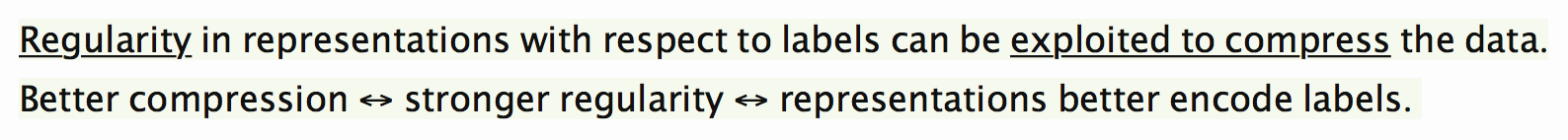

The control tasks paper argued that standard probe accuracy can be similar when probing for genuine linguistic labels and probing for random synthetic tasks (control tasks). To see reasonable differences in accuracy, the authors had to constrain the probe model size.

In our experiments, we show that MDL results are more informative, stable and do not require manual search for settings.

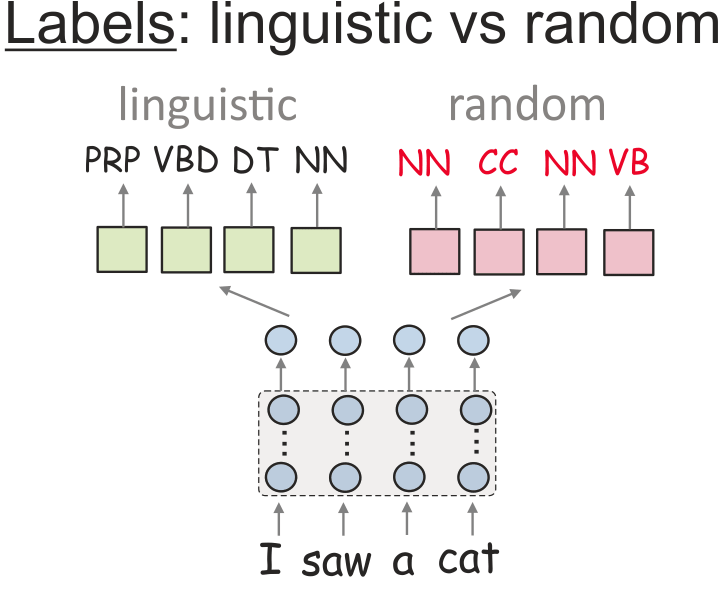

Several papers argued that accuracy of a probe does not sufficiently favour pretrained representations over randomly initialized ones. In this part, we conduct experiments with pretrained and randomly initialized ELMO for 7 tasks from the edge probing paper: PoS tagging, syntactic constituent and dependency labeling, named entity recognition, semantic role labeling, coreference resolution, and relation classification.

Here we also show that MDL results are more informative.