This repo contains code to experiment with different background jobs fairness strategies.

The problem of fair background jobs processing usually occurs in multi-tenant applications using shared queues to process tasks (e.g., using Sidekiq). Imagine you have an named queue to process some tasks, say, outgoing E-mail or SMS notifications. This single queue serves all the tenants, big and small. Whenever a large tenant triggers a massive notifications delivery (User.find_each { UserMailer.notify_smth.deliver_later }) and enqueues thousands of jobs. Delivering notifications takes some time (hundreds of milliseconds or even seconds), the jobs processor would be occupied by this tenant; others would experience noticable delays.

There are multiple ways to mitigate this problem and we research them in this repo.

Since this is a pure academical research, we avoid using any specific executor. Instead, we use Ruby 3 Ractors for concurrent jobs execution. However, we assume that a background job executor has a fixed predefined set of queues (like Sidekiq); thus, we cannot use a queue-per-tenant approach, we need to introduce the fairness at the client-side.

We perform the following experiment for each strategy.

Given N numbers each representing the number of jobs to enqueue per tenant, we enqueue N background jobs (bulk jobs). Each bulk jobs enqueues N[i] jobs.

Then we wait for all jobs to complete.

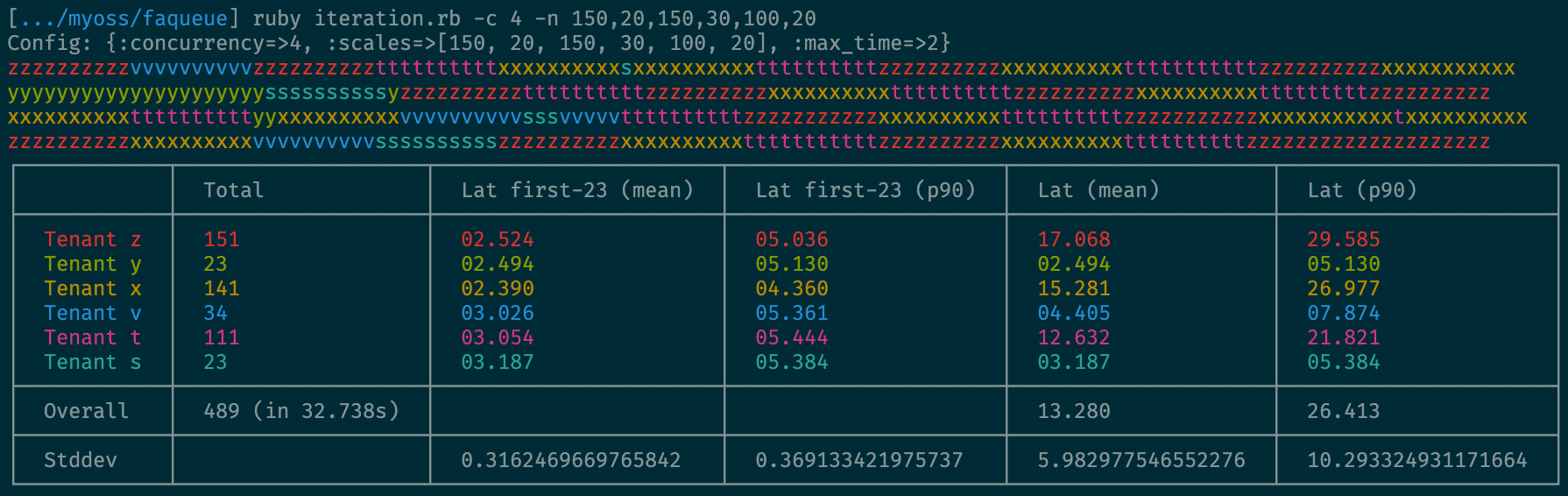

We measure the latency for each executed job and calculate the following statistical data:

- Mean and p90 latency per tenant.

- Mean and p90 latency of the first K jobs (head) per tenant, where K = min(N[i]).

- Standard deviation for the calculated means and percentiles.

The most important metrics is a standard deviation of the heads means/percentiles. Why so? We're interested in minimizing delays caused by large tenants. In other words, we want jobs from all tenants to be executed at the same speed. On the other hand, it's OK for latency to grow if we enqueue thousands of jobs, but that should not affect those who enqueue dozens.

Run a specific strategy emulation like this:

ruby baseline.rb -c 16 -n 300,20,500,200,1000,120You will see a visualization of executed jobs (each color represents a particular tenant) and the final statistics information (see below).

To learn about available CLI options use -h switch:

$ ruby baseline.rb -h

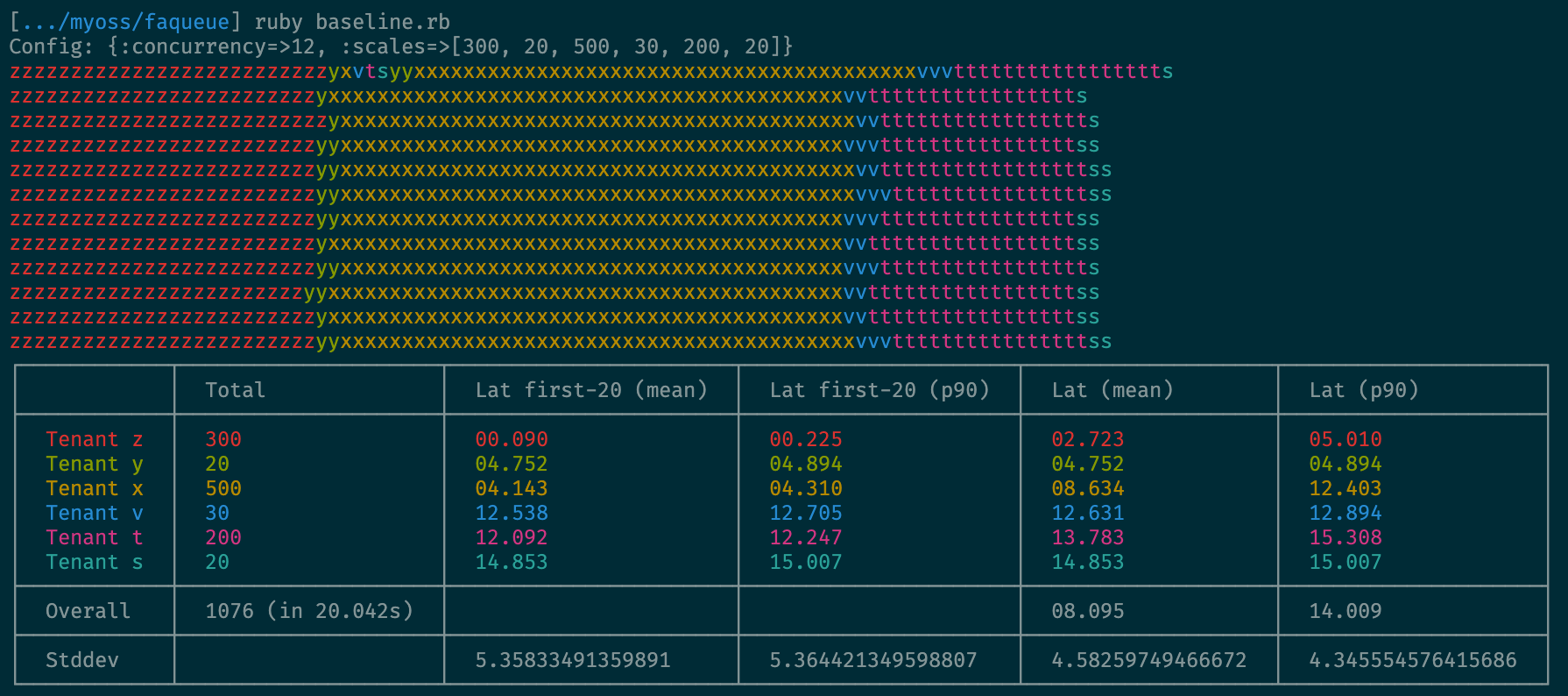

Baseline: just a queue, totally unfair

-n, --number=SCALES The total number of jobs to enqueue per tenant (comma-separated)

-c, --concurrency=CONCURRENCY The concurrency factor (depends on implementation)This is the default behavior: do not care about the fairness.

This strategy is described here.

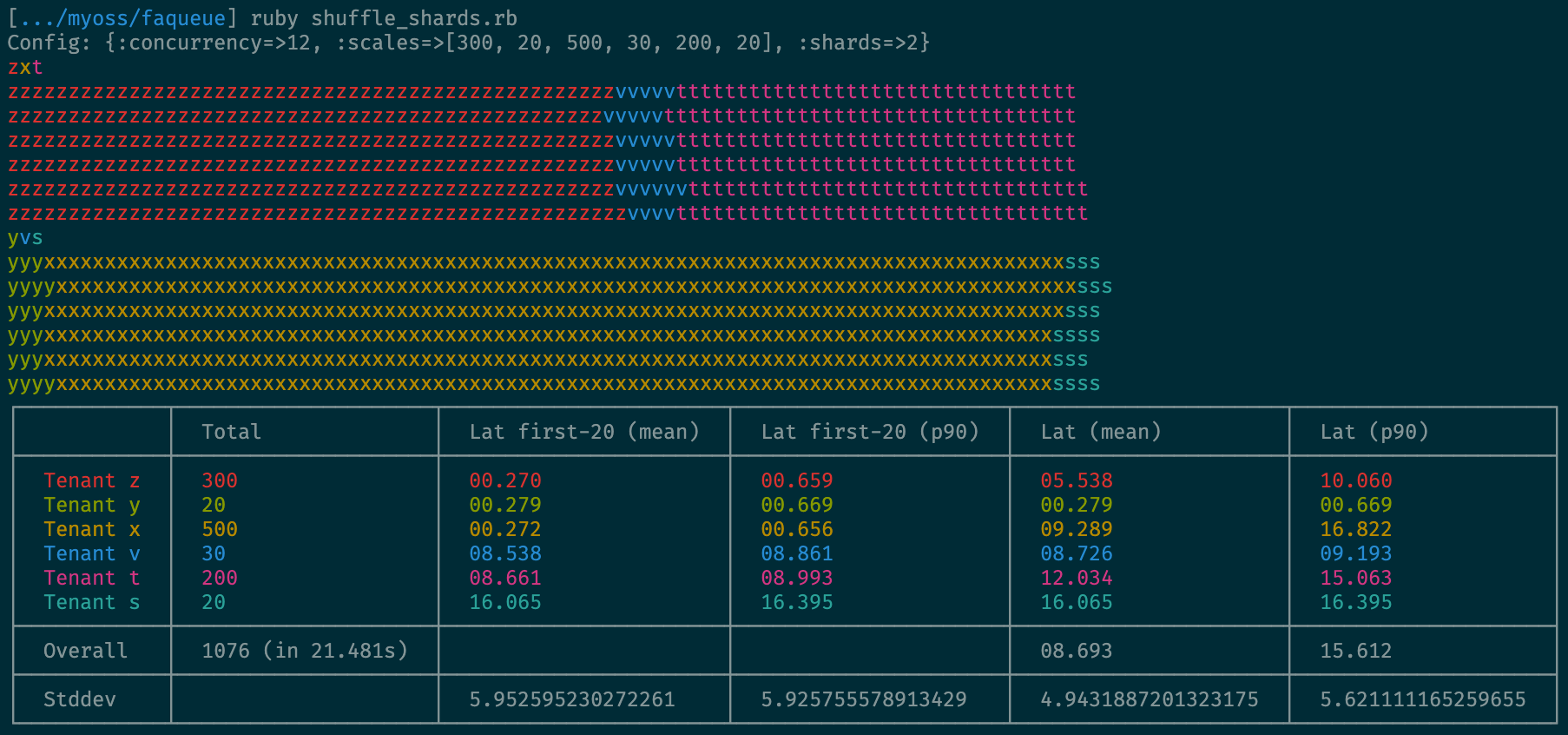

With two shards:

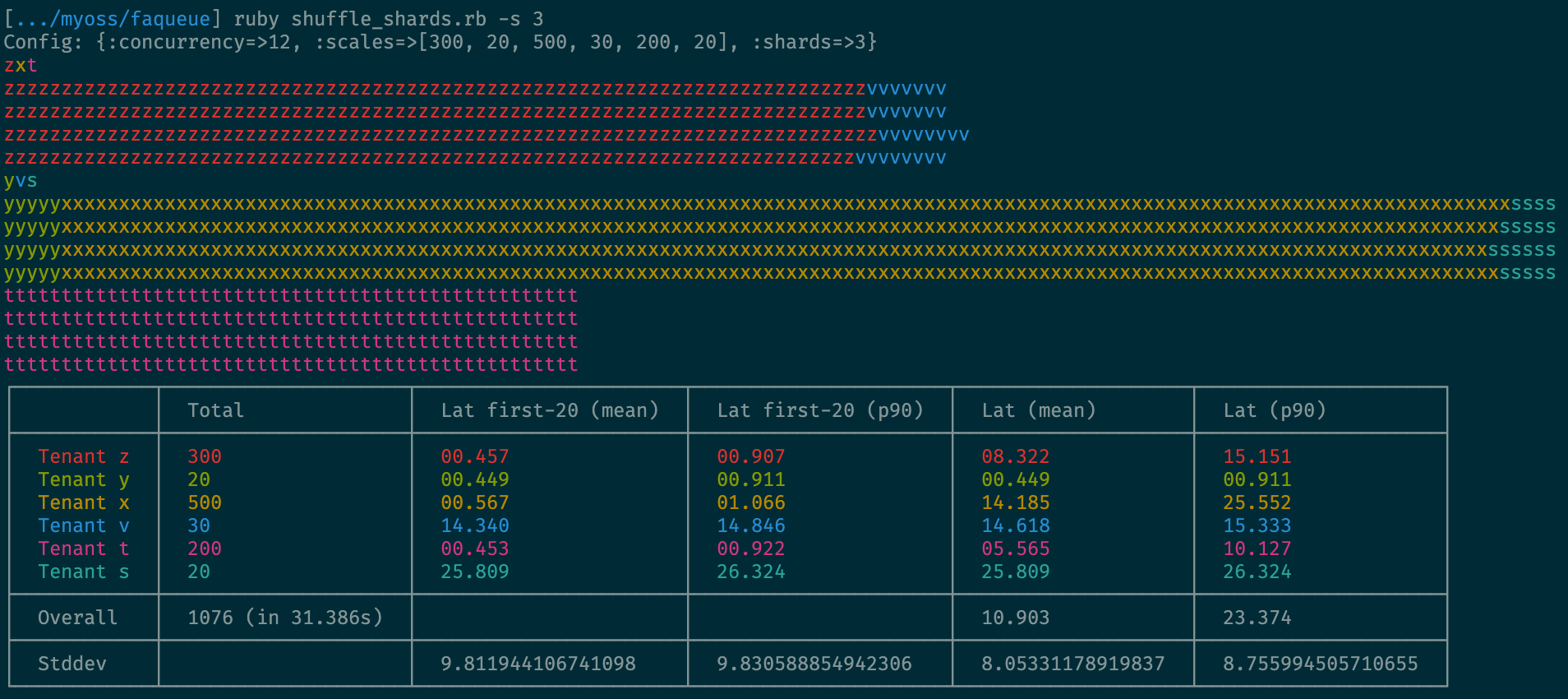

With three shards:

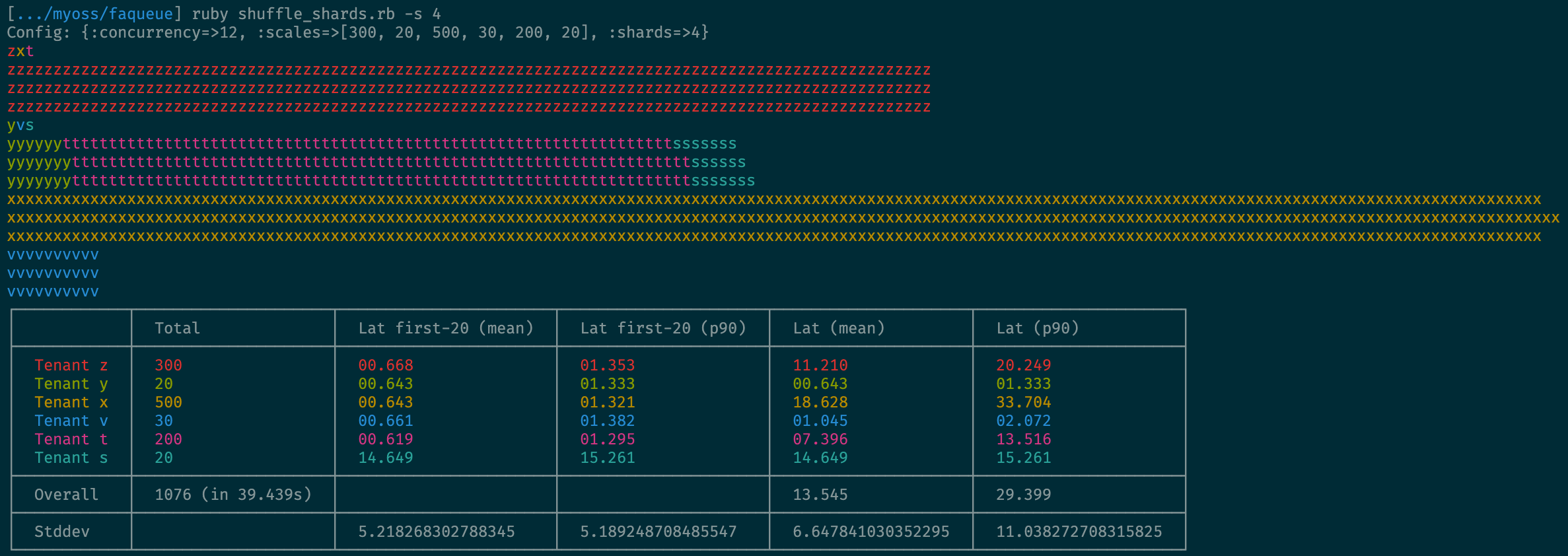

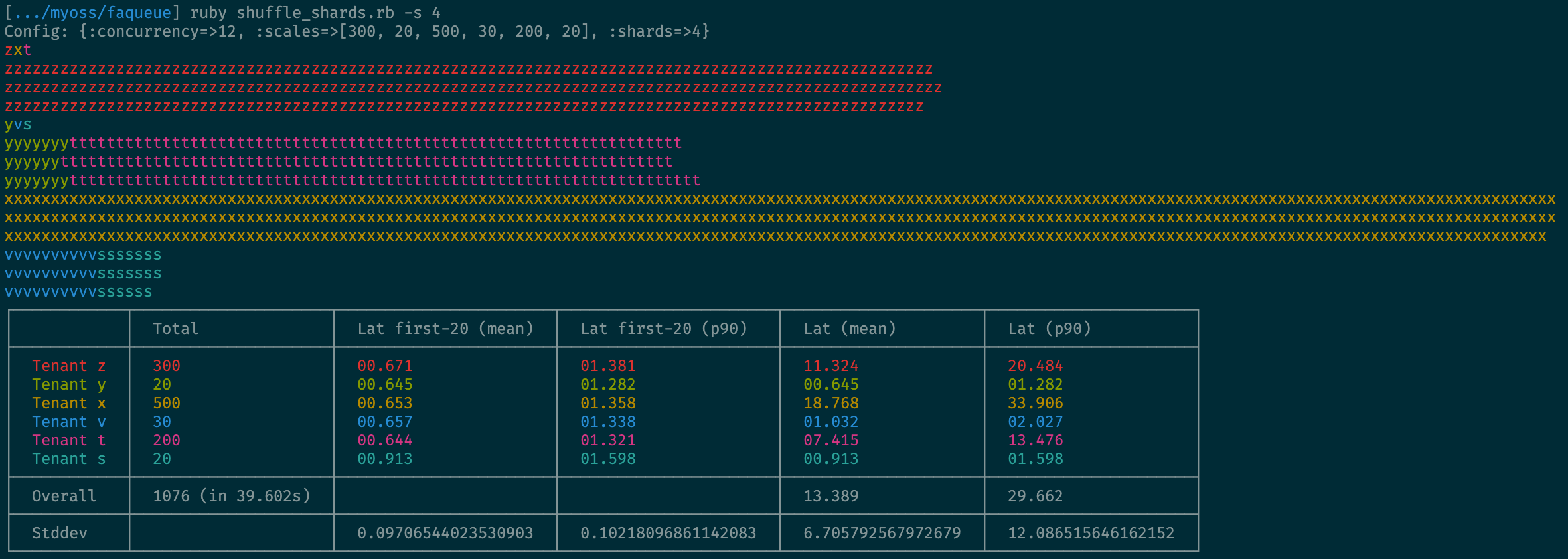

With four shards (unfair):

With four shards (fair):

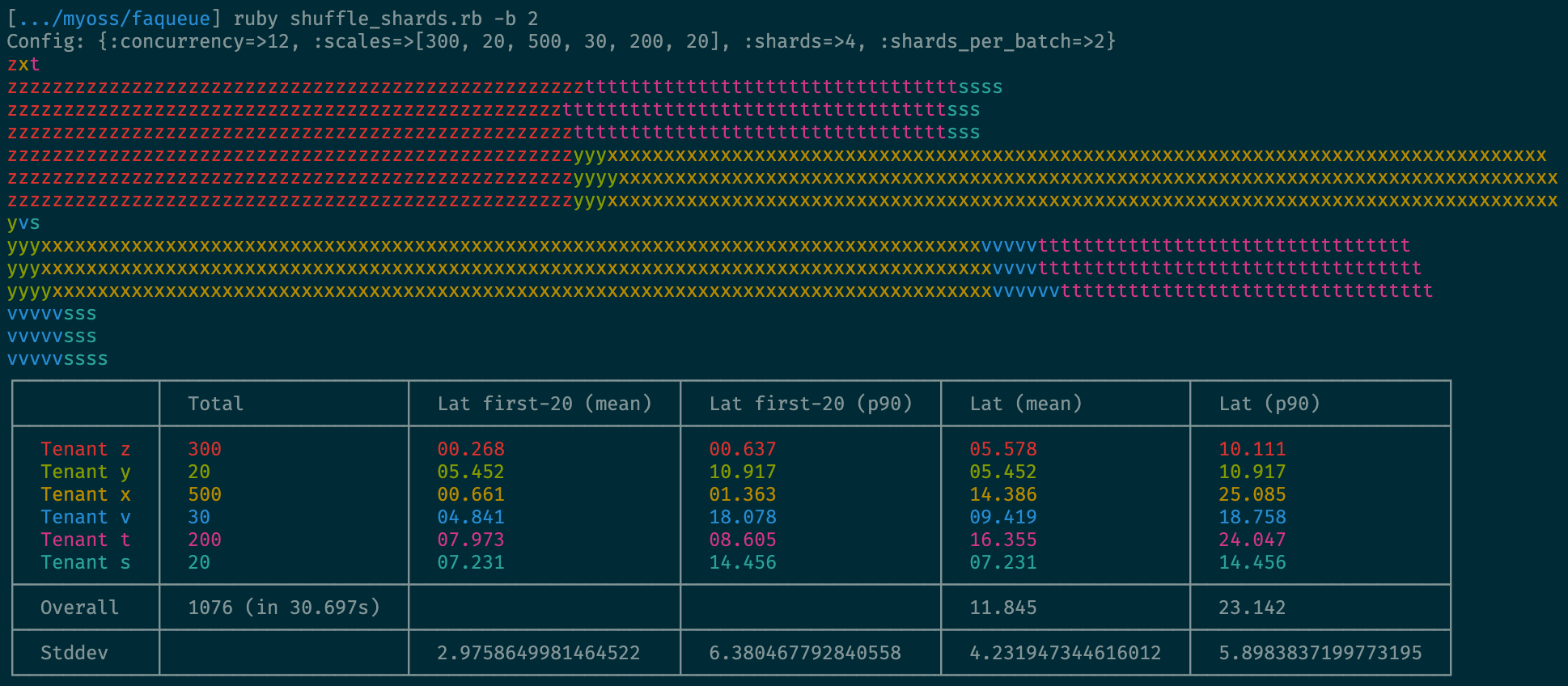

With four shards total and each batch using two shards:

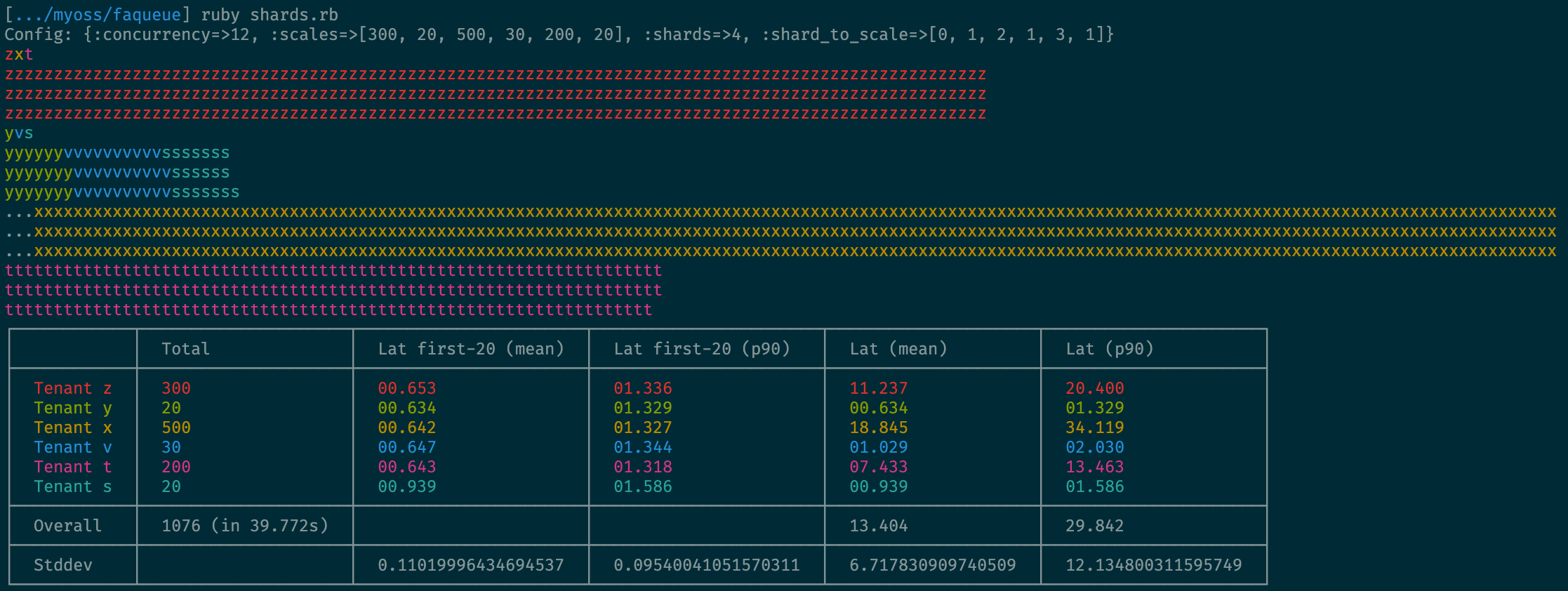

This approach assumes assigning a shard to each tenant. If we know how to distrbute tenants accross the shards so that they do not block each other, that would be a good solution. However, that task by itself is not easy (and a totally different story).

This approach has been implemnted in one of the Evil Martians projects back in the days.

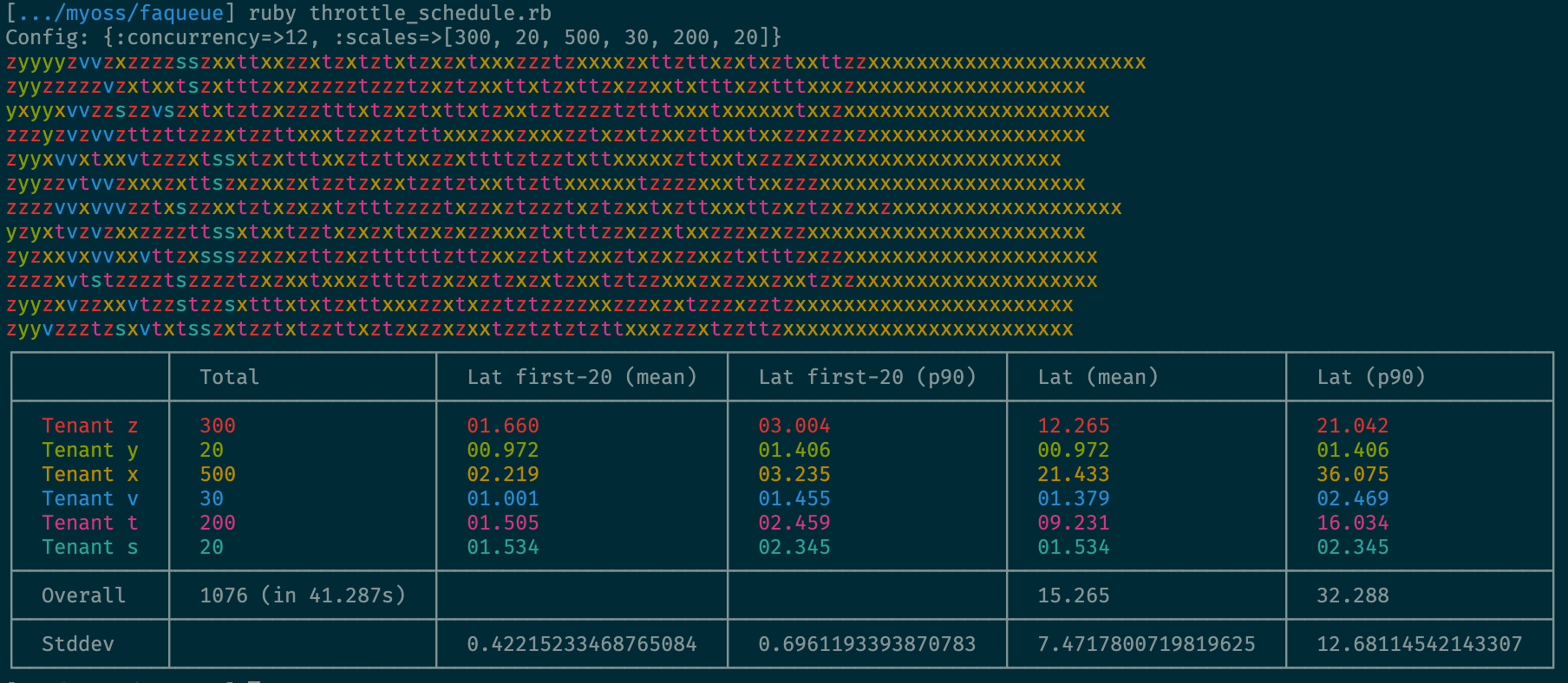

The idea is the following: we define a cooldown period for each tenant, i.e., a period during which only a single job is allowed to be performed (actually, enqueued). Every time a job is executed, we store a deadline (now + cooldown) in a distributed cache. If the next job arrives earlier than the deadline, we increase the deadline and re-schedules this job to be executed later.

See the example implementation for Sidekiq.

This approach is inspired by the job-iteration technique used in Shopify: instead of enqueuing atomic jobs for each batch, we perform them synchrounously in a loop and pause the iteration if we took more than the specified amount of time. "Pause" means re-enqueuing the current jobs with the cursor specified to indicate the starting point for the iteration.

You can achieve a similar behaviour for Sidekiq via job-iteration by configaring an appropriate max wait time:

JobIteration.max_job_runtime = 2.minutes- The State of Background Jobs in 2019 by Kir Shatrov

- Fairway