This is a compressed description of the paper Data compresion for quantum machine learning. For more details, read the paper; if you have questions, get in touch!

In the last few years, quantum computers have garnered widespread excitement across numerous disciplines, from drug discovery to finance. The prospect of performing tasks too complex or large for classical computers prompted research across both academia and industry (see Google, Microsoft, IBM, Amazon). This was spurred by Google's landmark 2019 study where a research team demonstrated that quantum computers could perform a particular task that regular "classical" supercomputers could not. In the coming years, the quantum computing community has two primary tasks -- first, to scale up quantum computing hardware, and second, to find useful algorithms that can be run on quantum computers. For the near future, however, quantum computers are noisy and hardware constrained (in the jargon, they are Noisy Intermediate Scale Quantum -- NISQ -- devices), so theoretical algorithms with large hardware requirements are impractical.

There has also been parallel thought that because quantum systems easily produce patterns that classical systems can't efficiently replicate, quantum computers may be able to outperform classical computers on machine learning tasks. The field of quantum machine learning is exciting, but is hampered by the lack of robust quantum hardware. This project introduces algorithms for image classification and data compression, specifically for NISQ devices. By creating and simulating algorithms on near-term hardware, we can benchmark quantum machine learning algorithms without access to full fledged quantum computers, which are years away. We also provide an open-sourced quantum dataset that uses our methods to encode images onto a format suitable for quantum computers, so future researchers have a common benchmark.

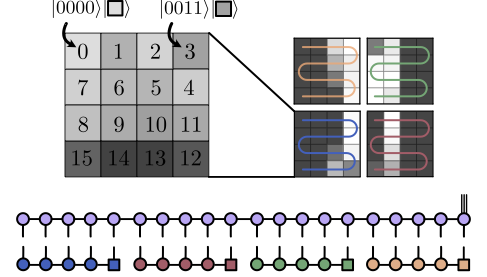

Our approach uses tensor networks, which are factorizations of high-dimensional tensors. Tensor networks are useful because (1) they can be stored efficiently on classical computers, (2) they can efficiently approximate many quantum states, and (3) there is a direct (albeit slightly complicated) way to encode a tensor network onto a quantum computer. We divide each image into patches, as in the following figure, then feed them into a tensor network. We encode each patch as a tensor network of its own.

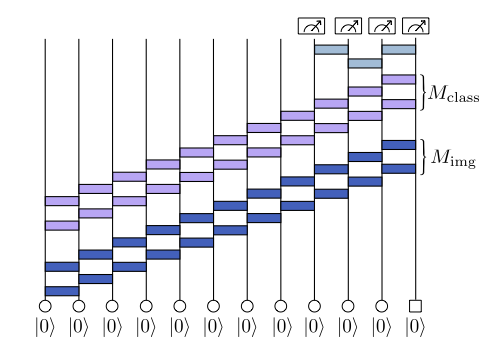

Similarly, we introduce a second algorithm where each image is encoded as a quantum circuit (a collection of quantum gates, which are the essential processing unit in quantum computing, analogous to AND/OR gates in regular computing), then passed to a classifier quantum circuit.

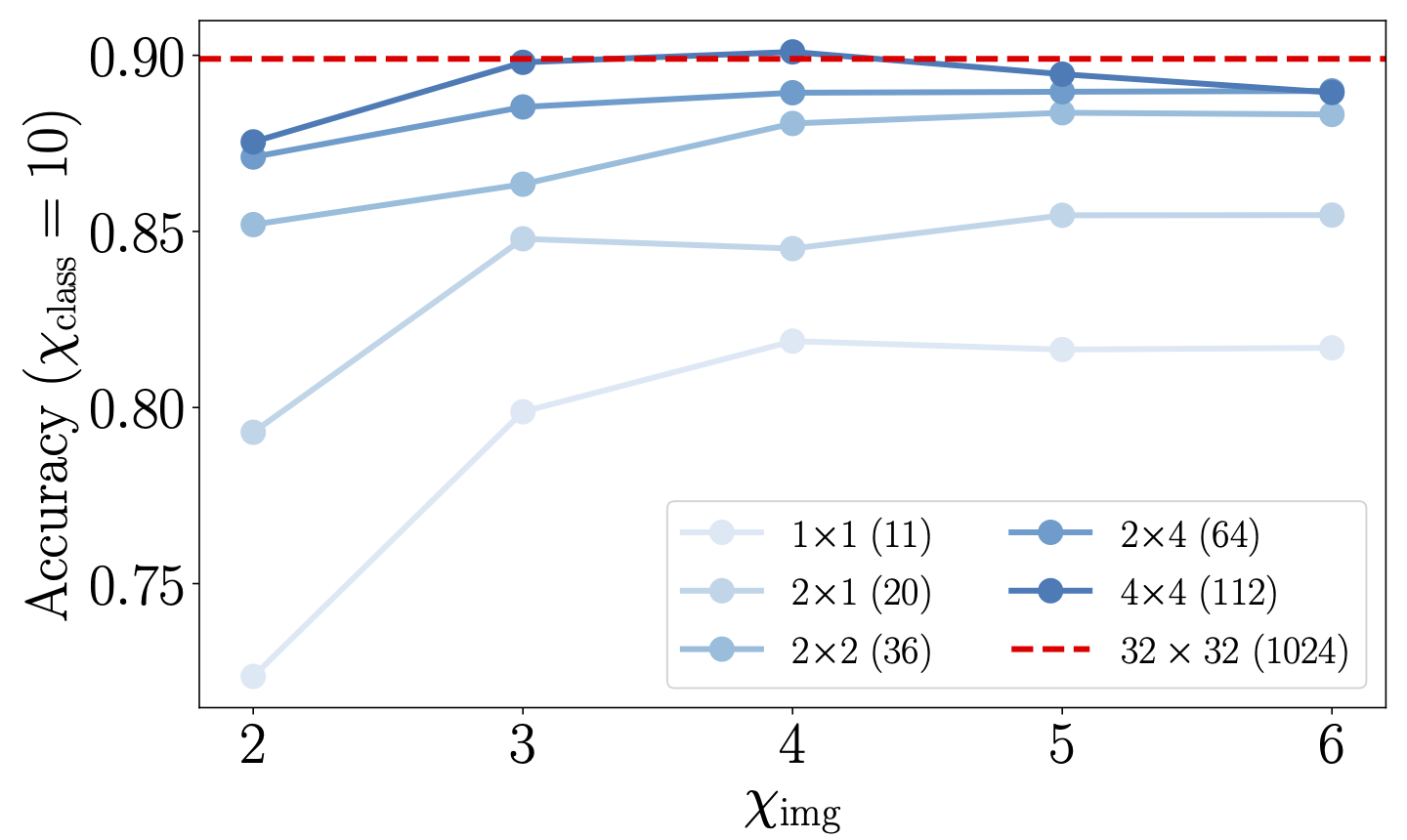

We achieve competitive results for classifying images. In the following plots,

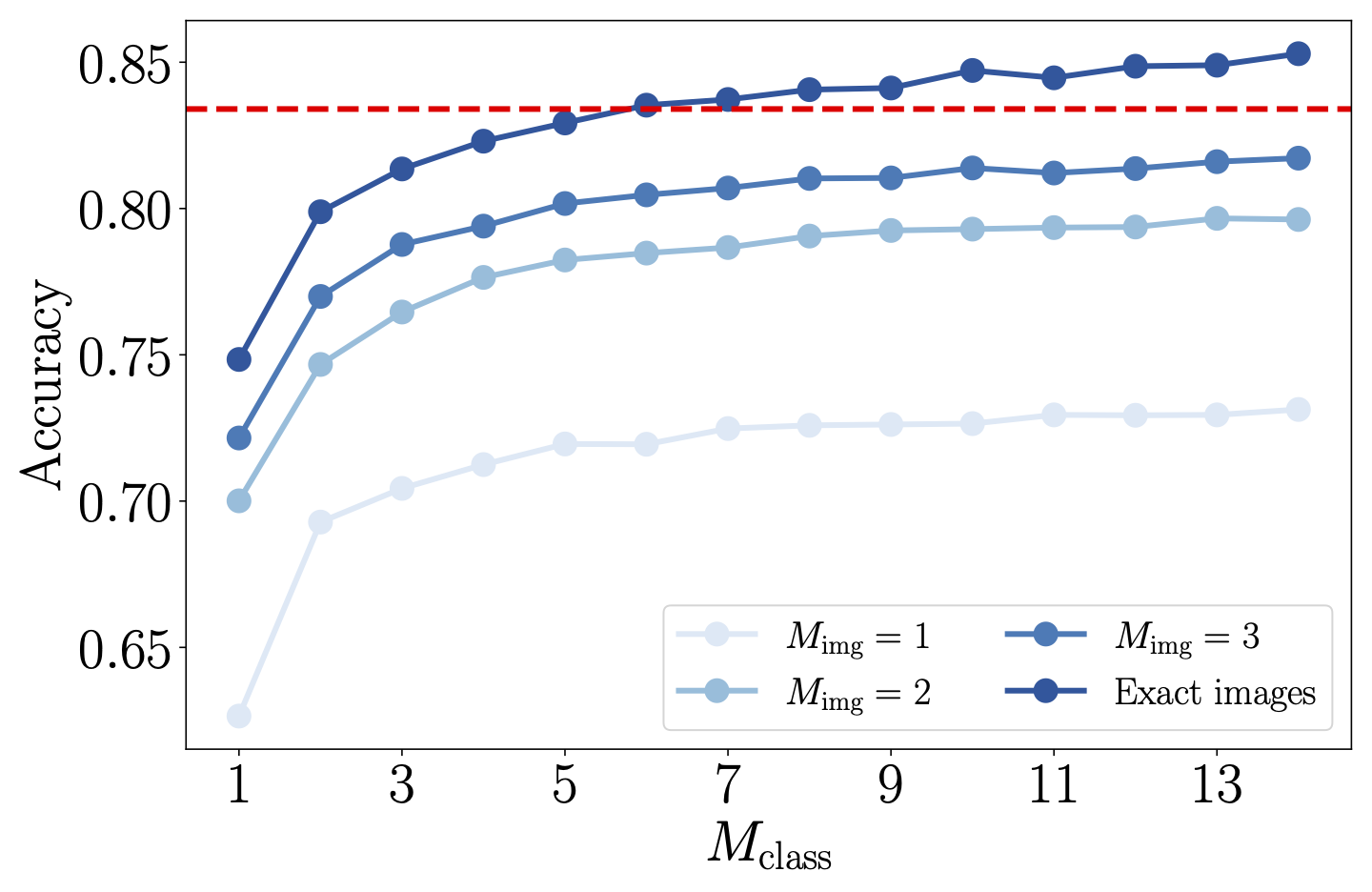

Similarly, we achieve reasonable classification benchmarks on the quantum circuit. Here,

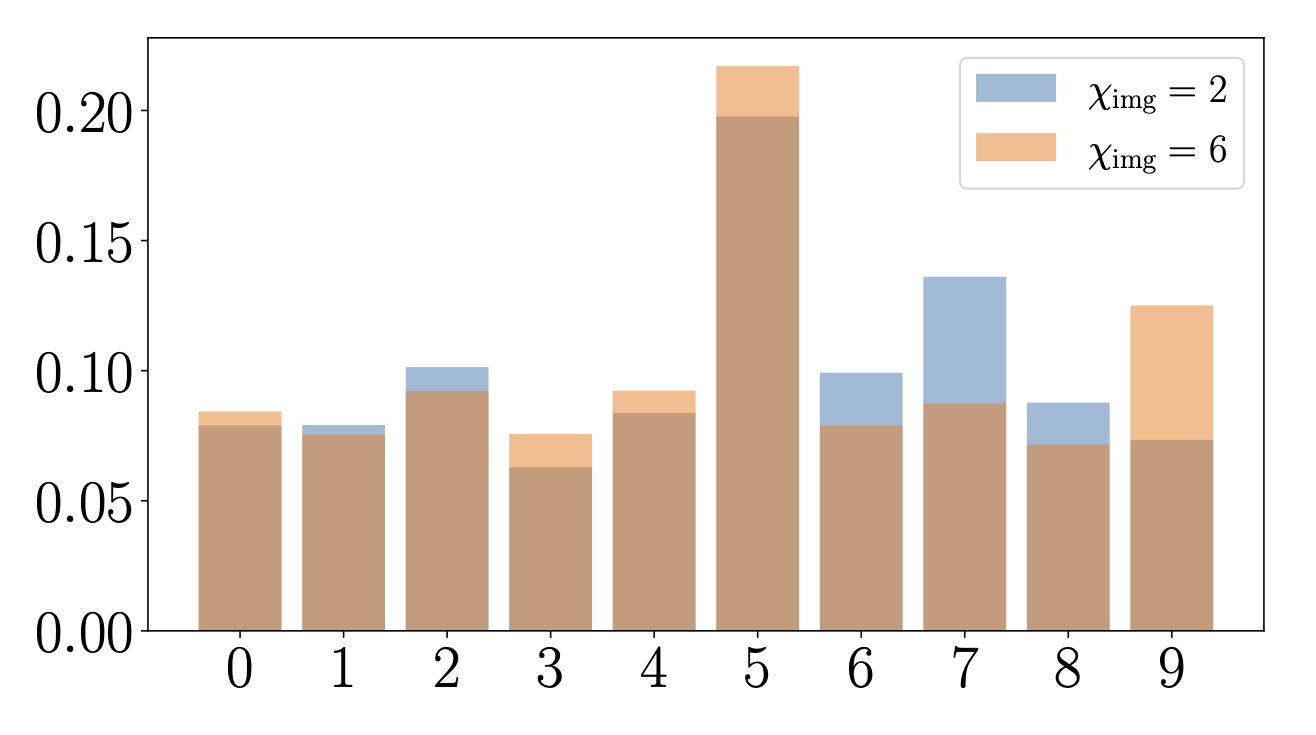

Finally, the actual prediction is well separated from the other possibilities, as shown in the following histogram.

This figure plots the predicted label -- one of ten possibilities -- against a quantity proportional to confidence of the classifier. The classifier is pretty confident in the correct label being the fifth one, even when we compress down to a small bond dimension (e.g.,

These are pretty simple classification tasks -- it makes sense to scale this to more complex datasets, and to explore whether there are tasks on which quantum computers can perform better than classical computers (convolutional neural networks are already really good at image classification).

This code is written to run on an SGE computing cluster, but you should be able to adapt it to your needs. The function main() in main_tn.py contains most of the relevant functions. If you're having difficulties, feel free to get in touch.