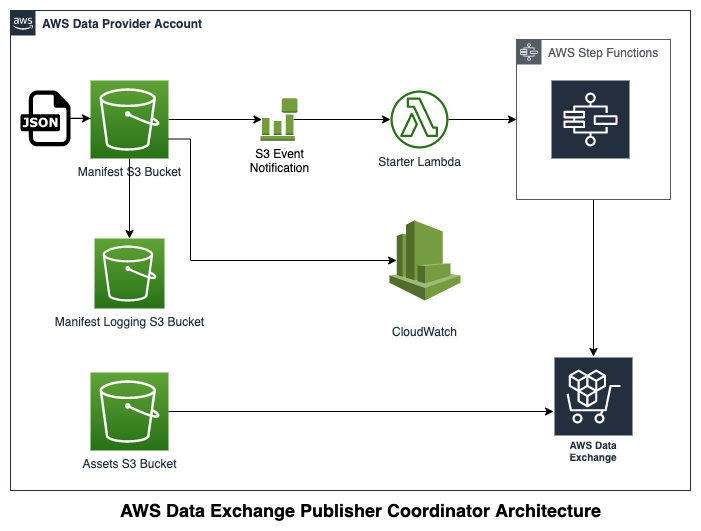

This project sets up AWS Step Functions Workflow to automatically execute the publication steps for new dataset revisions for AWS Data Exchange (ADX) Products. Execution is triggered when a manifest file for a new revision is uploaded to the Manifest S3 bucket.

This project offers several improvements over aws-data-exchange-publisher-coordinator, addressing various service limits with AWS Data Exchange and improved logging.

The following service limits have been addressed in this solution:

- 10,000 assets per revision

- 100 assets per import job and a maximum of 10 concurrent import jobs

- Supports folder prefixes. E.g. if you want to include an S3 folder called data, specify key as "data/" and the solution will include all files within that folder's hierarchy.

Note - You are recommended to use this update for datasets that have automatic revision publishing enabled - https://aws.amazon.com/about-aws/whats-new/2021/07/announcing-automatic-revision-publishing-aws-data-exchange/

Below is the architecture diagram of this project:

You should have the following prerequisites in place before running the code:

- An AWS Data Exchange product and dataset

- Three existing S3 buckets:

- AssetBucket: For uploading the assets

- ManifestBucketLoggingBucket: For logging activities. Please find more information on here on how to Create a Logging Amazon S3 bucket

- DistributionBucket: For uploading the Lambda code

- Python 3.8+

- AWS CLI

- AWS SAM CLI

- Ensure that the logging bucket you configure as part of MANIFEST_BUCKET_LOGGING_BUCKET variable has WRITE and READ_ACP permissions for the Amazon S3 Log Delivery group. For more information, see documentation

- Note - Default Cloud 9 environment has AWS CLI and AWS SAM CLI installed. To upgrade the python version to 3.8, you can execute following commands: sudo amazon-linux-extras enable python3.8 sudo yum install python38

Once you have all prerequisites in place, clone the repository and update the code:

- Update the

run.shfile with desired values for the following variables including the names of the three pre-existing buckets you created above and a name forManifestBucketwhich will be created by Cloudformation.

SOLUTION_NAME='aws-data-exchange-publihser-coordinator' # name of the solution

SOLUTION_VERSION='1.0.0' # version number for the code

SOURCE_CODE_BUCKET=my-bucket-name # Existing bucket where customized code will reside

MANIFEST_BUCKET='adx-publisher-coordinator-manifest-bucket-1234' # Name for a new bucket that solution would create.

ASSET_BUCKET='adx-publisher-coordinator-assets-bucket-1234' # An Existing bucket where you intend to upload assets for including in a new revision.

MANIFEST_BUCKET_LOGGING_BUCKET='adx-publisher-coordinator-manifest-logging-bucket-1234' # Existing bucket where activity logs will be saved.

MANIFEST_BUCKET_LOGGING_PREFIX='adx-publisher-coordinator-logs/' # Prefix string for manifest bucket access logs (including the trailing slash).

LOGGING_LEVEL='INFO' # Logging level of the solution; accepted values: [ DEBUG, INFO, WARNING, ERROR, CRITICAL ]

STACK_NAME=my-stack-name # name of the cloudformation stack

REGION=us-east-1 # region where the cloudformation stack will be created

run.sh creates a local directory, replaces the names you specified in the Cloudformation template, packages the Lambda codes as zip files, uploads the code to the $CFN_CODE_BUCKET S3 bucket in your account using the AWS CLI, and finally builds and deploys the Cloudformation template using the AWS SAM CLI.

- From the root directory of the project, run

run.sh:

chmod +x run.sh

./run.sh

Any time a manifest file is uploaded to the ManifestBucket, a Step Function execution pipeline is trigerred. The manifest file should follow a specific format:

- The of the manifest file should end with

.json - The size of the manifest file should be less than

10GB - The file should include a

JSONobject with the following format:

{

"product_id": <PRODUCT_ID>,

"dataset_id": <DATASET_ID>,

"asset_list": [

{ "Bucket": <S3_BUCKET_NAME>, "Key": <S3_OBJECT_KEY> },

{ "Bucket": <S3_BUCKET_NAME>, "Key": <S3_OBJECT_KEY> },

...

]

}

- If you find any issues with or have enhancement ideas for this product, open up a GitHub issue and we will gladly take a look at it. Better yet, submit a pull request. Any contributions you make are greatly appreciated ❤️.

- If you have any questions or feedback, send us an email at data@rearc.io.

Rearc is a cloud, software and services company. We believe that empowering engineers drives innovation. Cloud-native architectures, modern software and data practices, and the ability to safely experiment can enable engineers to realize their full potential. We have partnered with several enterprises and startups to help them achieve agility. Our approach is simple — empower engineers with the best tools possible to make an impact within their industry.