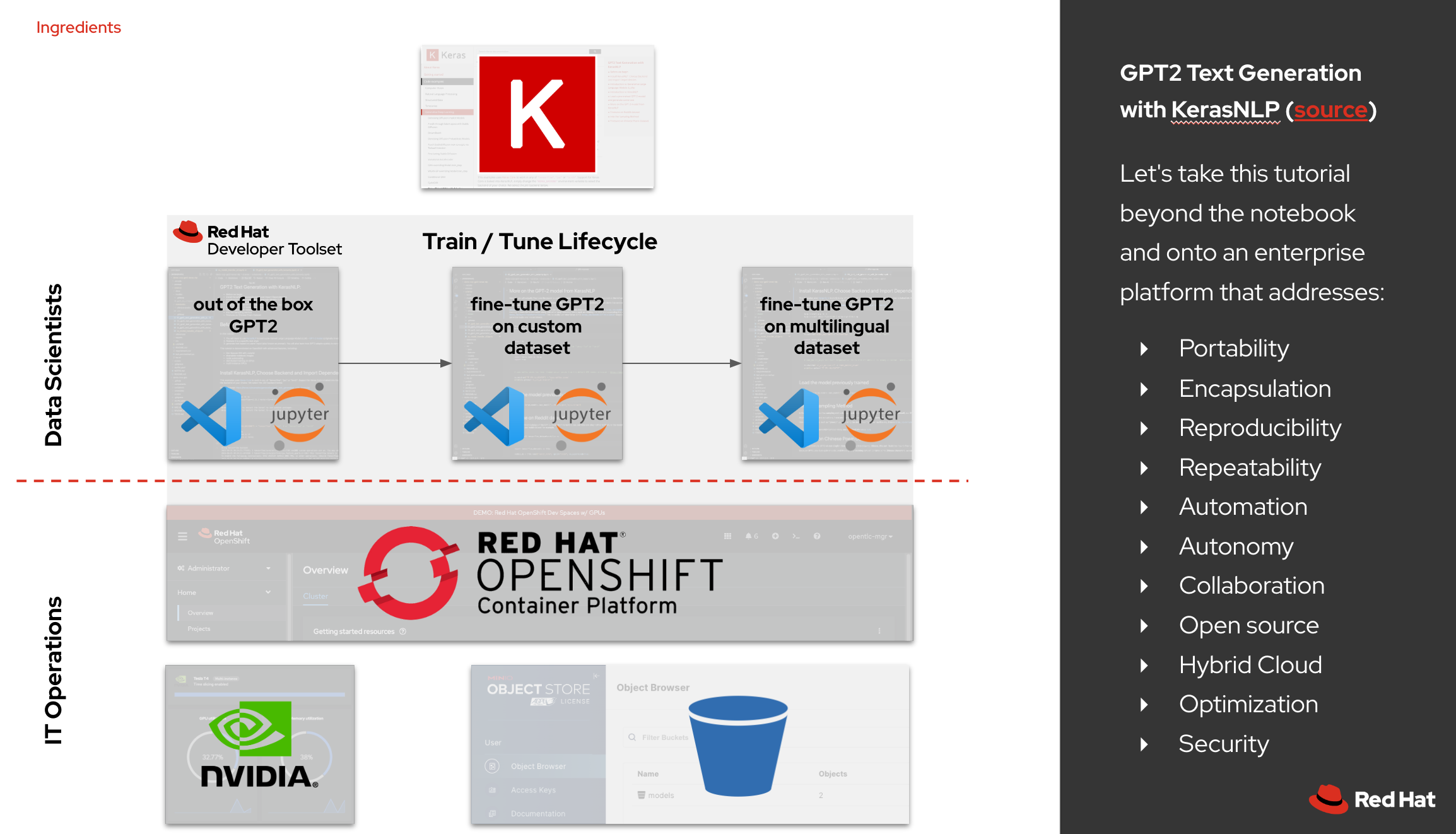

This repo demos the use of the GPT2 Text Generation w/ KerasNLP on Red Hat OpenShift with NVIDIA GPUs.

Important

This demo is done entirely in-memory, of a pod, to keep it simple.

In production use cases, Large Language Models (LLMs) need additional components, like a vector database and embedding model, to store data and fine-tune the model on custom data.

Key concepts:

- OpenShift Developer Tools for training

- OpenShift autoscaling GPU nodes

- OpenShift limits for GPU nodes

- Minio Object Storage

- KerasNLP for Large Language Models (LLMs)

- OpenAI GPT-2 model fine-tuning

- NVIDIA Multi-instance GPUs

- NVIDIA Time Slicing GPUs

- NVIDIA GPU Monitoring

- Online experimentation

- Red Hat OpenShift Cluster 4.10+

- Cluster admin permissions

The following cli tools are required:

bash,gitoc- Download mac, linux, windowskubectl(optional) - Included inocbundlekustomize(optional) - Download mac, linux

NOTE: bash, git, and oc are available in the OpenShift Web Terminal

# start a bash shell

# (this means you mac users; zsh)

bash

# oc login to your cluster

# oc login --token=<yours> --server=https://<yours>

oc whoami

# git clone demo

git clone https://github.com/redhat-na-ssa/datasci-keras-gpt2-nlp.git

cd datasci-keras-gpt2-nlp

# run setup script

./scripts/bootstrap.sh

# expected results

# Running: oc apply -k gitops

# again...

# again...

# [OK]

# WARNING: Be certain you want your cluster returned to a vanilla state

. ./scripts/bootstrap.sh

delete_demo

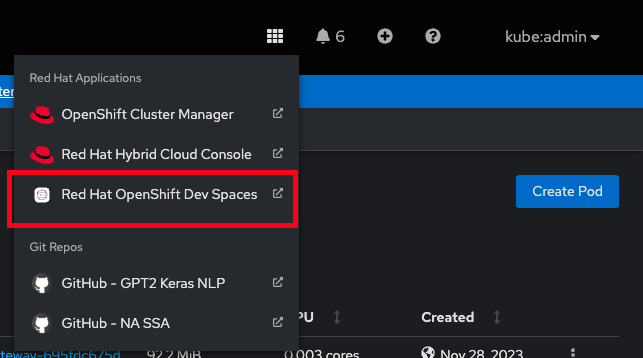

- Launch DevSpaces from the waffle menu on the OCP Web Console

Note

This may take 5+ mins post bootstrap setup

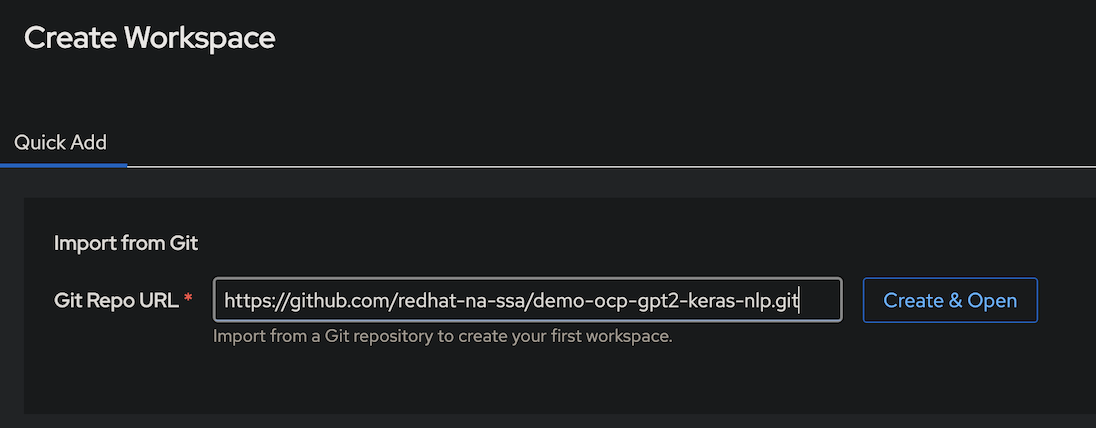

Create & OpenDevSpaces with the current repo

- Click the

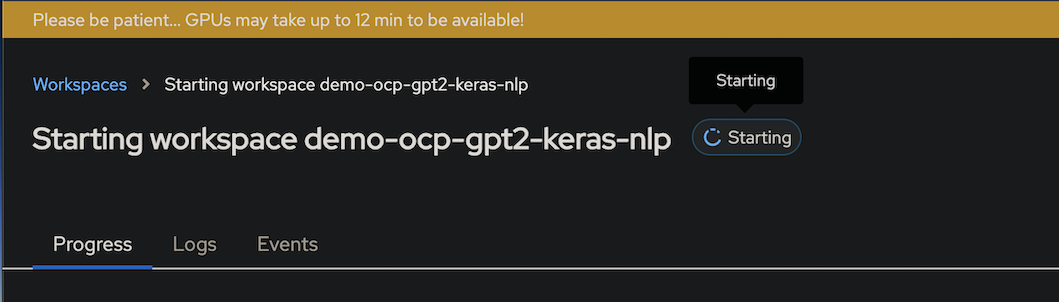

Eventssubmenu to watch progress

Note

This may take 12+ mins

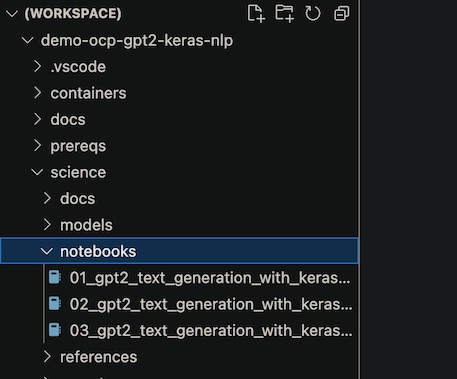

- Open the science/notebooks folder

- Run the notebooks in order