🚀 This repository holds the assets that supplement the article "Offline to Online: Feature Storage for Real-time Recommendation Systems with NVIDIA Merlin" written originally for the NVIDIA Developer Blog.

We provide examples of recommendation system architectures and provide cloud deployment instructions for production usage. Each of the examples rely on Redis and the NVIDIA Merlin framework which provides a number of building blocks for creating recommendation systems.

There are 3 examples within this repository

- Offline Batch Recommendations

- Online Recommendation Systems

- Large-Scale Recommender Models with HugeCTR

The Large-Scale expands on the second architecture for use cases that demand large scale (> 1 GPU) training or inference.

Each of the examples are designed to run locally on a NVIDIA GPU enabled system with docker and docker-compose. We recommend executing all of the following on a cloud instance with an NVIDIA GPU (ideally the AWS Pytorch AMI)

However, we also provide a set of terraform scripts and ansible notebooks that can deploy the infrastructure necessary to execute the examples on AWS instances.

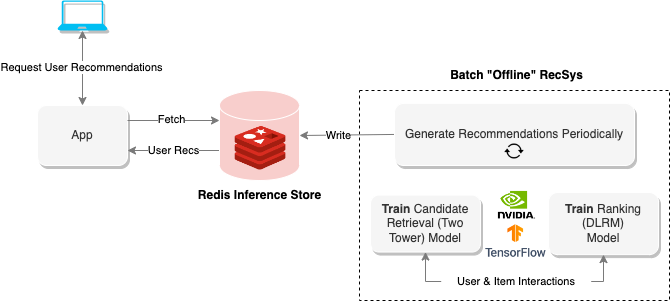

"Offline" recommendation systems use batch computing to process large quantities of data and then store them for later retrieval. The diagram above shows an example of such a system that uses a Two-Tower approach to generate recommendations and then stores them inside a Redis database for later retrieval.

The offline notebook provides methods to build up this type of recommendation system as well as trains and exports the models necessary for running the online recommendation system in the following section.

To execute the notebook, run the following

$ cd offline-batch-recsys/

$ docker compose up # -d to daemonizeThen open the link generated by Jupyter in a browser.

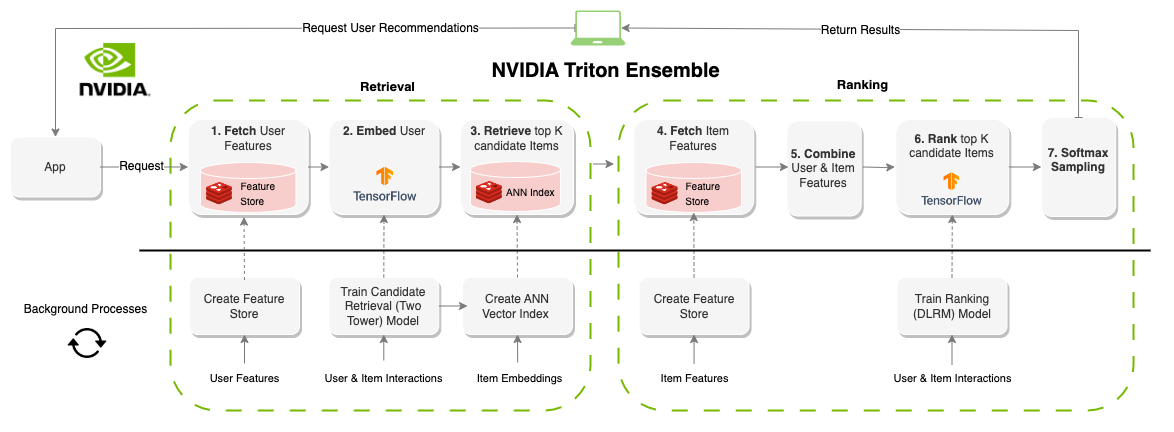

An "online" recommendation system generates recommendations on-demand. As opposed to batch oriented systems, online systems are latency-constrained. When designing these systems, the amount of time to produce recommendations is likely the most important factor. Commonly capped around 100-300ms, each portion of the system needs components that are not only efficient but scalable to millions of users and items. Creating an online recommendation system has significantly more constraints than batch systems, however, the result is often better recommendations as information (features) can be updated in real-time. The diagram above shows an example of this architecture.

The previous example for batch recommendations generates the models and datasets for this notebook, but you can also just download pre-trained assets with the AWS CLI as follows

aws s3 cp s3://redisventures/merlin/merlin-recsys-data.zip ./data

To execute the notebook, run the following

$ cd online-multi-stage-recsys/

$ docker compose up # -d to daemonizeThis section contains two notebooks: one for deploying the feature store (Redis) and creating the vector index (Redis) and another for defining and running the ensemble model to run the entire pipeline (Triton).

Note: Be sure to run the first notebook prior to the second or the model will not execute.

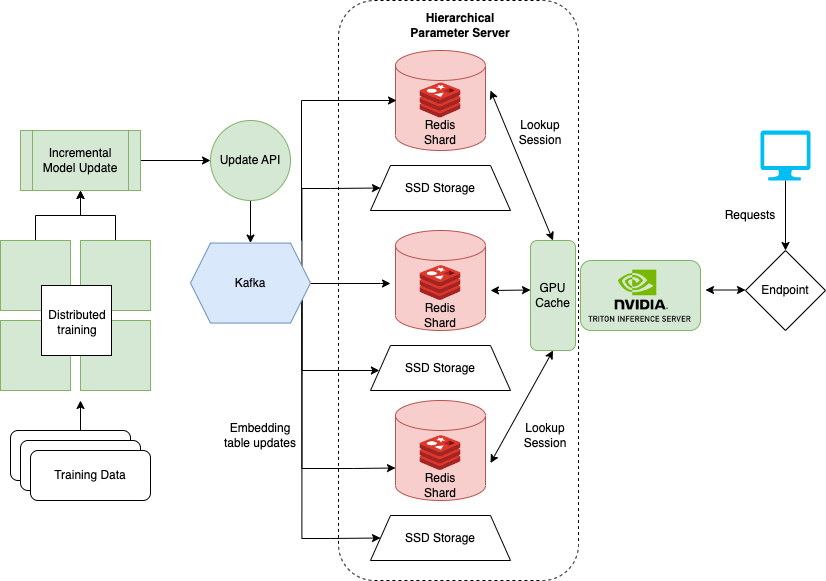

The last notebook that shows how to handle very large datasets when training models like DLRM for recommendation systems. Large enterprises often have millions of users and items. The entire embedding table of a model may not fit on a single GPU. For this, NVIDIA created the HugeCTR framework.

HugeCTR is a part of a NVIDIA Merlin framework and adds facilities for distributed training and serving of recommendation models. The notebook detailed here focuses on the deployment and serving of HugeCTR and provides a pre-trained version of DLRM that can be used for the example. More information on distributed training with HugeCTR can be found here.

To execute the local notebook, run the following

$ cd large-scale-recsys/

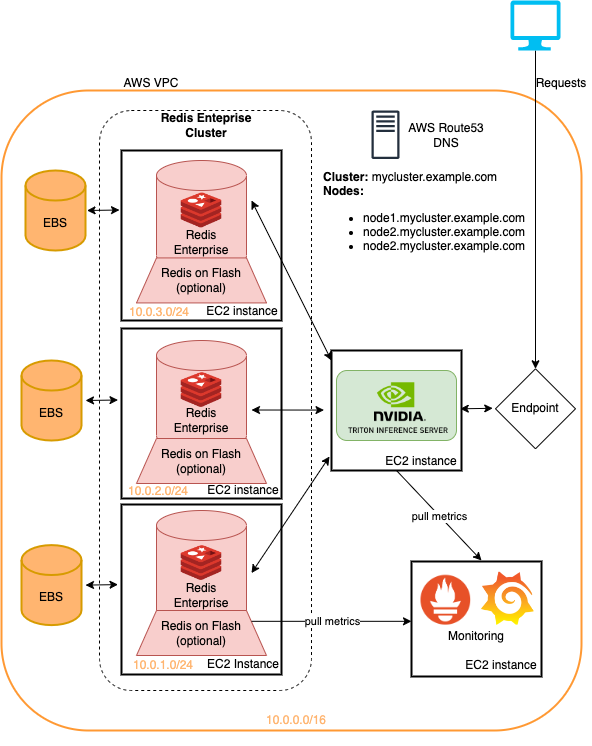

$ docker compose up # -d to daemonizeThis folder includes terraform scripts and ansible playbooks that deploy

- Redis Enterprise Software

- GPU instances for Triton inference serving

- Grafana and Prometheus instance for monitoring

- A VPN

- DNS records

All on Amazon Web Services.

The examples presented in this repository can all be run on the terraformed infrastructure with minimal changes. This is a quick method to deploy this infrastructure to try out the Recommendation System pipelines detailed here. See the README within the cloud-deployment for more.

The models in this tutorial can be retrieved with the aws cli by running

aws s3 cp s3://redisventures/merlin/merlin-recsys-data.zip ./data

The following repostories link to code/assets used in articles and notebooks

The notebooks here build on the work of many pre-existing notebooks such as

We highly recommend reading