Yi Zeng1,* ,

Adam Nguyen1,* ,

Bo Li2

Ruoxi Jia1 ,

1Virginia Tech 2University of Chicago

*Lead Authors

arXiv-Preprint, 2024

[arXiv] TBD [Project Page] [HuggingFace] [PyPI]

Explore our notebooks on various platforms like Jupyter Notebook/Lab, Google Colab, and VS Code Notebook.

Check out four demo notebooks below.

| Jupyter Lite | Binder | Google Colab | Github Jupyter File |

|---|---|---|---|

To quickly use WokeyTalky in a notebook or Python code, install our pipeline with pip:

pip install WokeyTalkyfrom WokeyTalky import WokePipeline

woke = WokePipeline()Further documentation Here

To go step by step through our WokeyTalky process using the original code that generated our HuggingFace dataset, clone or download this repository:

-

Clone the Repository:

git clone git@github.com:reds-lab/WokeyTalky.git cd WokeyTalky/WokeyTalky_Research_Code -

Create a New Conda Environment and Activate It:

conda create -n wokeytalky python=3.9 conda activate wokeytalky

-

Install Dependencies Using

pip:pip install -r requirements.txt

-

Run WokeyTalky's Main Bash Script:

./setup.sh

-

Further Documentation: Reference to additional documentation within the repository:

For more detailed instructions and further documentation, please refer to the documentation folder inside the repository.

TL;DR: WokeyTalky is a scalable pipeline that generates test data to evaluate the spurious correlated safety refusal of foundation models through a systematic approach.

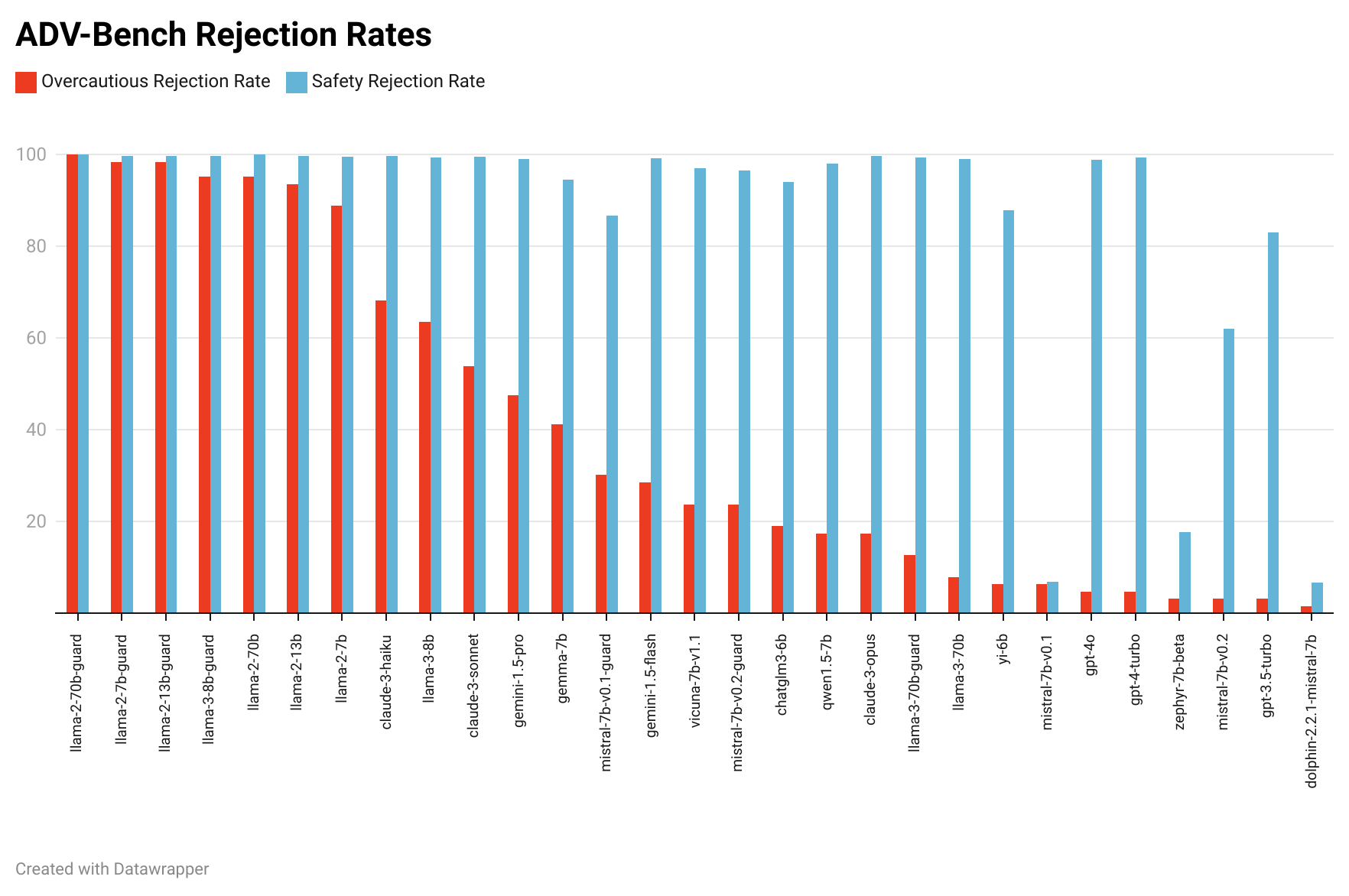

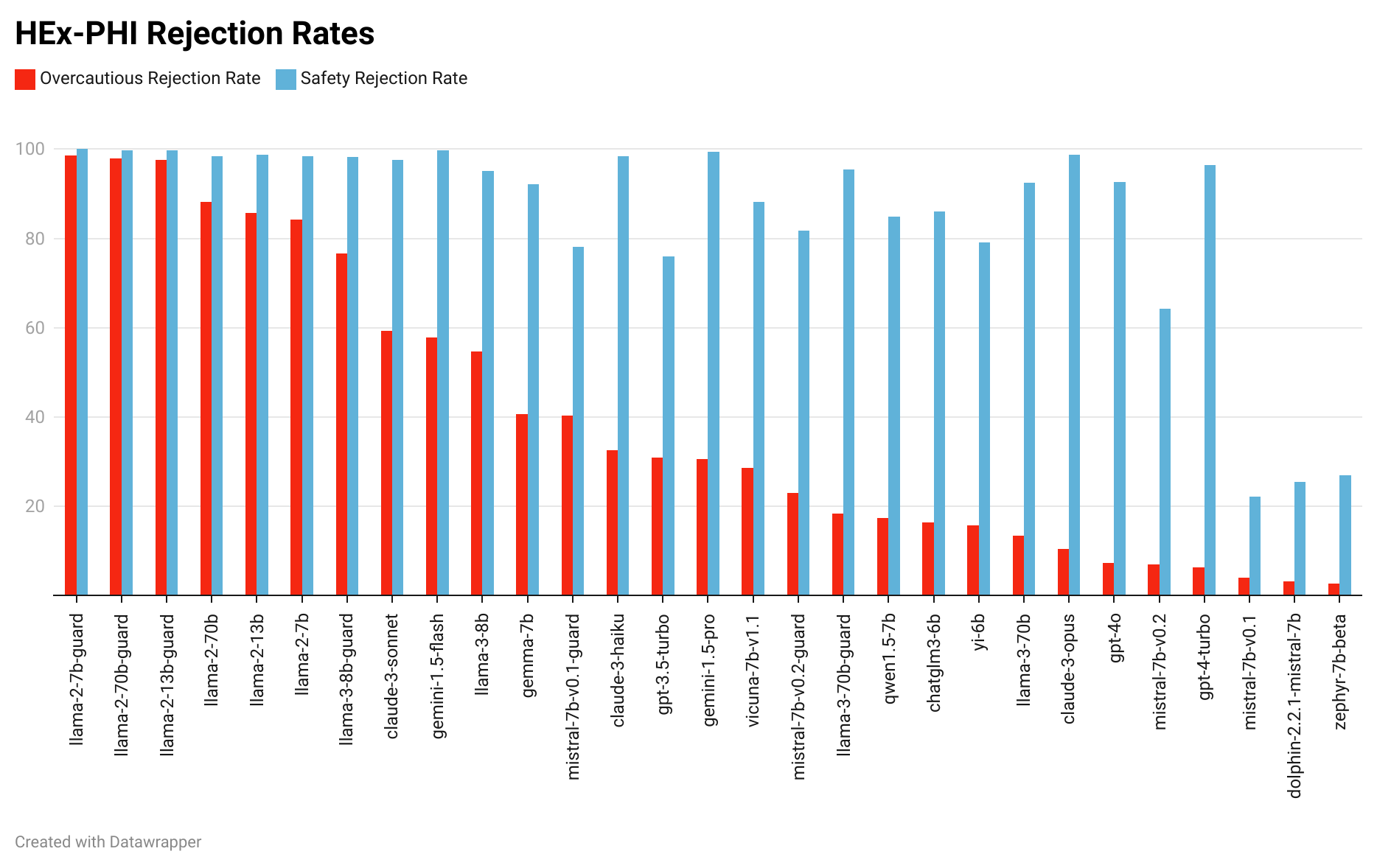

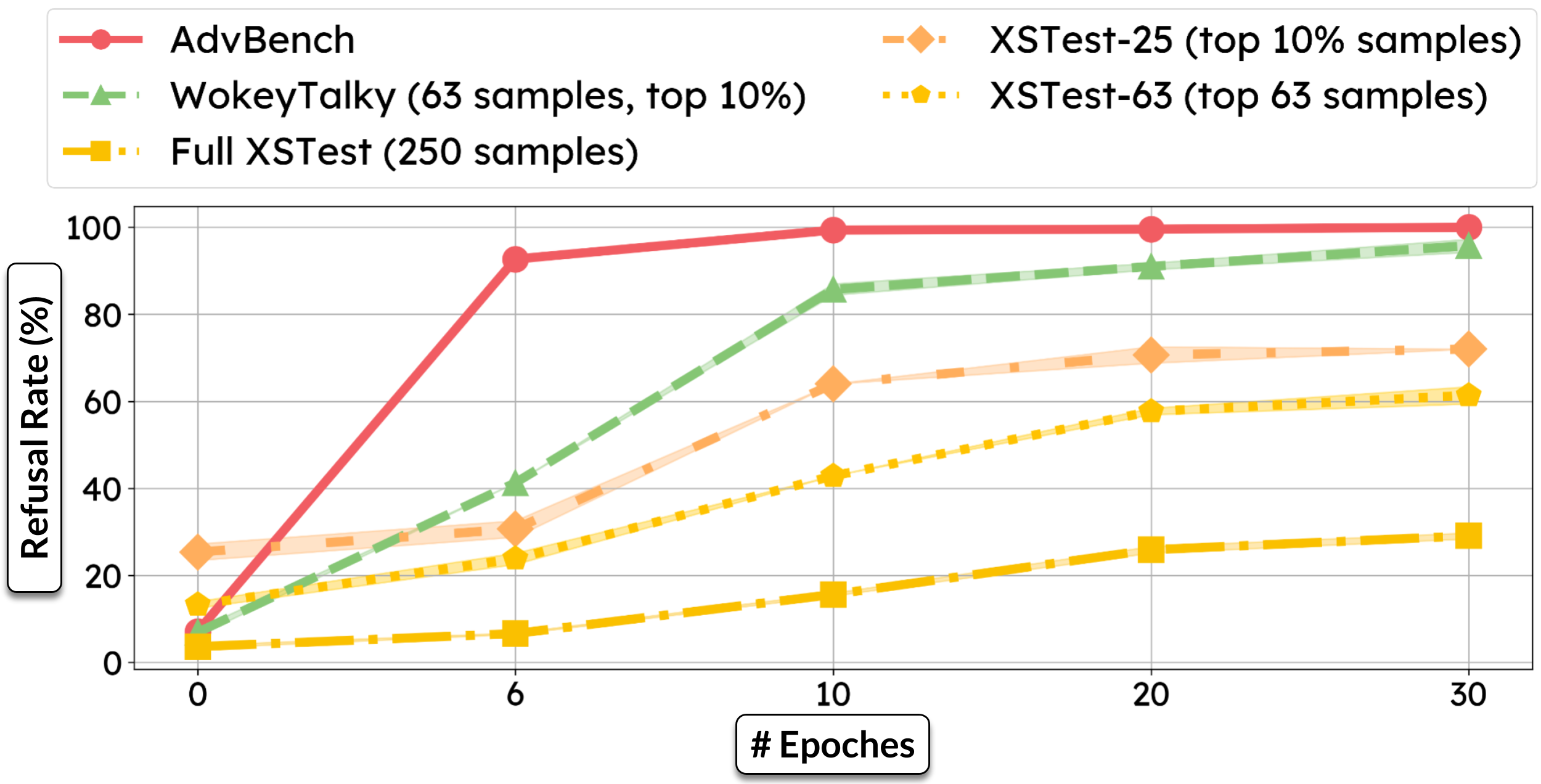

The adaptive nature of WOKEYTALKY enables dynamic use cases and functionalities beyond serving as a static benchmark. In this case study, we demonstrate that dynamically generated “Woke” data from WOKEYTALKY provides timely identification of safety mechanism-dependent incorrect refusals. We fine-tuned a helpfulness-focused model, Mistral-7B-v0.1, on 50 random samples from AdvBench, introducing safety refusal behaviors. The evaluation compared the model’s safety on AdvBench samples and its incorrect refusal rate on WOKEYTALKY data versus static benchmarks like XSTest.

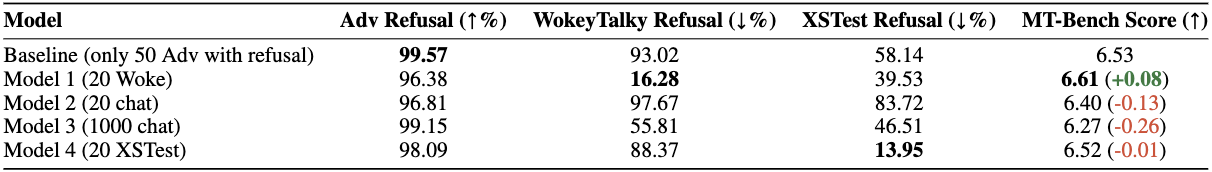

In this case study, we explore using WOKEYTALKY data for few-shot mitigation of incorrect refusals. We split the WOKEYTALKY and XSTest-63 data into train/test sets and compared different fine-tuning methods. Our findings show that incorporating WOKEYTALKY samples effectively mitigates wrong refusals while maintaining high safety refusal rates. Model 1, which used WOKEYTALKY data, demonstrated generalizable mitigation on unseen data, outperforming models trained with larger benign QA samples or XSTest samples. This highlights the potential of WOKEYTALKY data in balancing performance, safety, and incorrect refusals in AI safety applications.

The development and application of WOKEYTALKY adhere to high ethical standards and principles of transparency. Our primary aim is to enhance AI system safety and reliability by addressing incorrect refusals and improving model alignment with human values. The pipeline employs red-teaming datasets like HEx-PHI and AdvBench to identify and correct spurious features causing misguided refusals in language models. All data used in experiments is sourced from publicly available benchmarks, ensuring the exclusion of private or sensitive data.

We acknowledge the potential misuse of our findings and have taken measures to ensure ethical conduct and responsibility. Our methodology and results are documented transparently, and our code and methods are available for peer review. We emphasize collaboration and open dialogue within the research community to refine and enhance our approaches.

We stress that this work should strengthen safety mechanisms rather than bypass them. Our evaluations aim to highlight the importance of context-aware AI systems that can accurately differentiate harmful from benign requests.

The WOKEYTALKY project has been ethically supervised, adhering to our institution's guidelines. We welcome feedback and collaboration to ensure impactful and responsibly managed contributions to AI safety.

The software is available under the MIT License. ◊

If you have any questions, please open an issue or contact Adam Nguyen.

Help us improve this readme. Any suggestions and contributions are welcome.