The Contextual Loss [project page]

This is a Tensorflow implementation of the Contextual loss function as reported in the following papers: (PyTorch implementation is also available - see bellow)

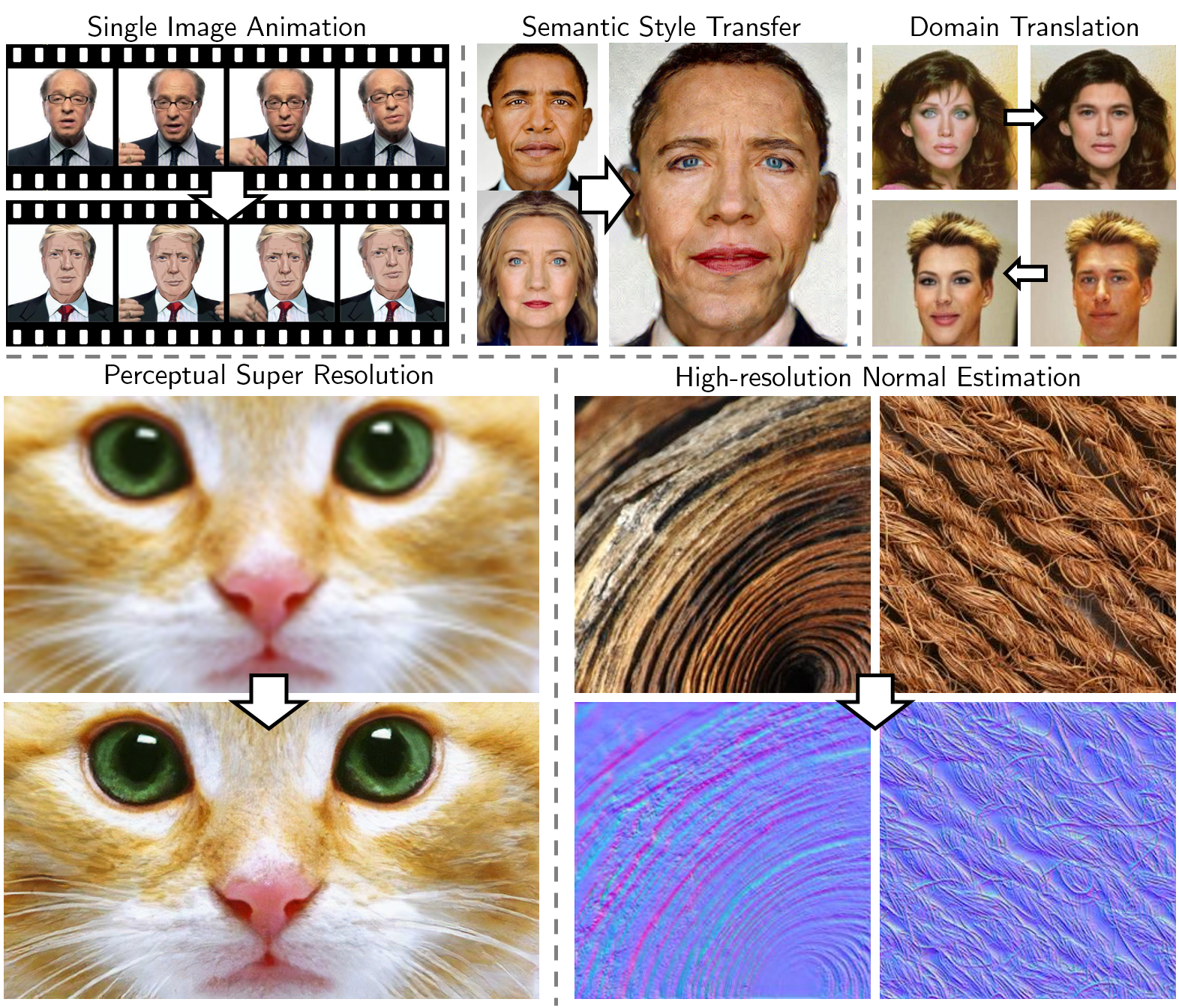

The Contextual Loss for Image Transformation with Non-Aligned Data, arXiv

Learning to Maintain Natural Image Statistics, arXiv

Roey Mechrez*, Itamar Talmi*, Firas Shama, Lihi Zelnik-Manor. The Technion

Copyright 2018 Itamar Talmi and Roey Mechrez Licensed for noncommercial research use only.

This code is mainly the contextual loss function. The two papers have many applications, here we provide only one applications: animation from single image.

An example pre-trained model can be download from this link

The data for this example can be download from this link

Required python libraries: Tensorflow (>=1.0, <1.9, tested on 1.4) + Scipy + Numpy + easydict

Tested in Windows + Intel i7 CPU + Nvidia Titan Xp (and 1080ti) with Cuda (>=8.0) and CuDNN (>=5.0). CPU mode should also work with minor changes.

- Clone this repository.

- Download the pretrained model from this link

- Extract the zip file under

resultfolder. The models should be inbased_dir/result/single_im_D32_42_1.0_DC42_1.0/ - Update the

config.base_dirandconfig.vgg_model_pathinconfig.pyand run:single_image_animation.py

- Change

config.TRAIN.to_traintoTrue - Arrange the paths to the data, should have

trainandtestfolders - run

single_image_animation.pyfor 10 epochs.

We have also released a PyTorch implementation of the loss function. See CX/CX_distance.py. Note that we havn't test this implemntation to reproduce the results in the paper.

This software is provided under the provisions of the Lesser GNU Public License (LGPL). see: http://www.gnu.org/copyleft/lesser.html.

This software can be used only for research purposes, you should cite the aforementioned papers in any resulting publication.

The Software is provided "as is", without warranty of any kind.

If you use our code for research, please cite our paper:

@article{mechrez2018contextual,

title={The Contextual Loss for Image Transformation with Non-Aligned Data},

author={Mechrez, Roey and Talmi, Itamar and Zelnik-Manor, Lihi},

journal={arXiv preprint arXiv:1803.02077},

year={2018}

}

@article{mechrez2018Learning,

title={Learning to Maintain Natural Image Statistics, [arXiv](https://arxiv.org/abs/1803.04626)},

author={Mechrez, Roey and Talmi, Itamar and Shama, Firas and Zelnik-Manor, Lihi},

journal={arXiv preprint arXiv:1803.04626},

year={2018}

}

[1] Template Matching with Deformable Diversity Similarity, https://github.com/roimehrez/DDIS

[2] Photographic Image Synthesis with Cascaded Refinement Networks https://cqf.io/ImageSynthesis/