Extension package of TVM Deep Learning Compiler for Renesas DRP-AI accelerators powered by EdgeCortix MERA™ (DRP-AI TVM)

TVM Documentation | TVM Community | TVM github |

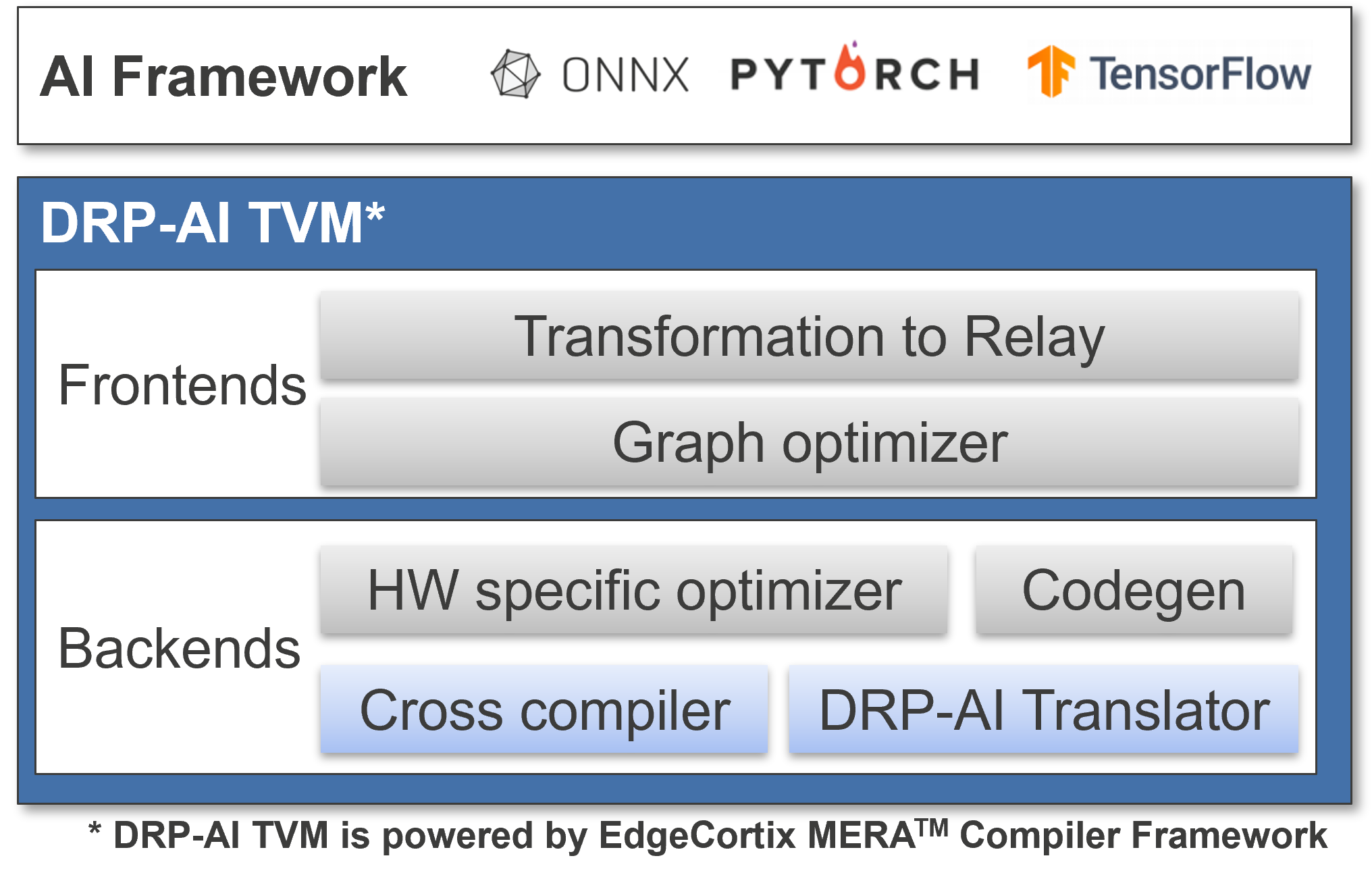

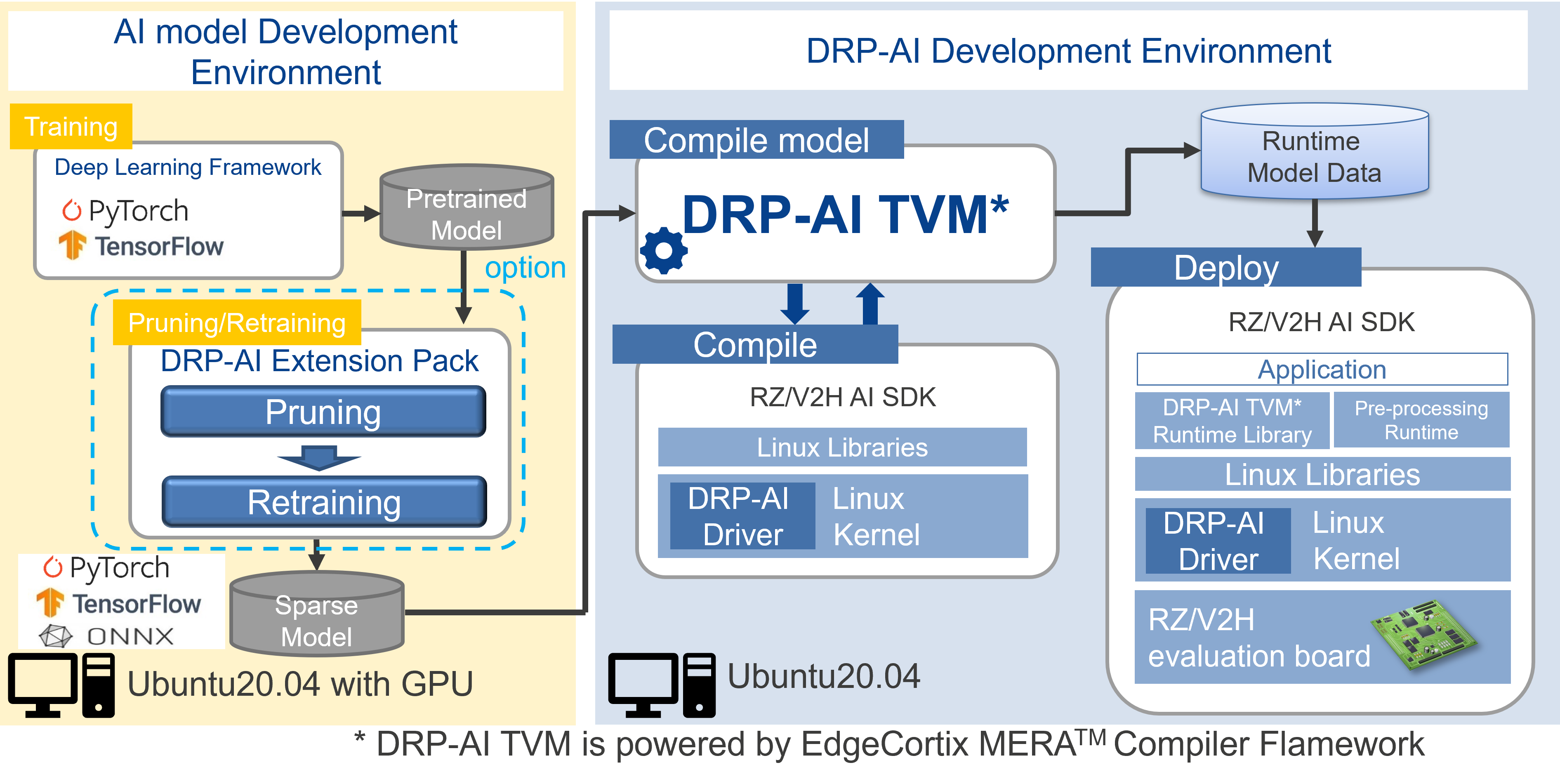

DRP-AI TVM 1 is Machine Learning Compiler plugin for Apache TVM with AI accelerator DRP-AI provided by Renesas Electronics Corporation.

(C) Copyright EdgeCortix, Inc. 2022

(C) Copyright Renesas Electronics Corporation 2022

Contributors Licensed under an Apache-2.0 license.

- Renesas RZ/V2L Evaluation Board Kit (How to get)

- Renesas RZ/V2M Evaluation Board Kit (How to get)

- Renesas RZ/V2MA Evaluation Board Kit (How to get)

- Renesas RZ/V2H Evaluation Board Kit

This compiler stack is an extension of the DRP-AI Translator to the TVM backend. CPU and DRP-AI can work together for the inference processing of the AI models.

| Directory | Details |

|---|---|

| tutorials | Sample compile script |

| apps | Sample inference application on the target board |

| setup | Setup scripts for building a TVM environment |

| obj | Pre-build runtime binaries |

| docs | Documents, i.e., Model list and API list |

| img | Image files used in this document |

| tvm | TVM repository from GitHub |

| 3rdparty | 3rd party tools |

| how-to | Sample to solve specific problems, i.e., How to run validation between x86 and DRP-AI |

| pruning | Sample scripts to prune the models with DRP-AI Extension Package |

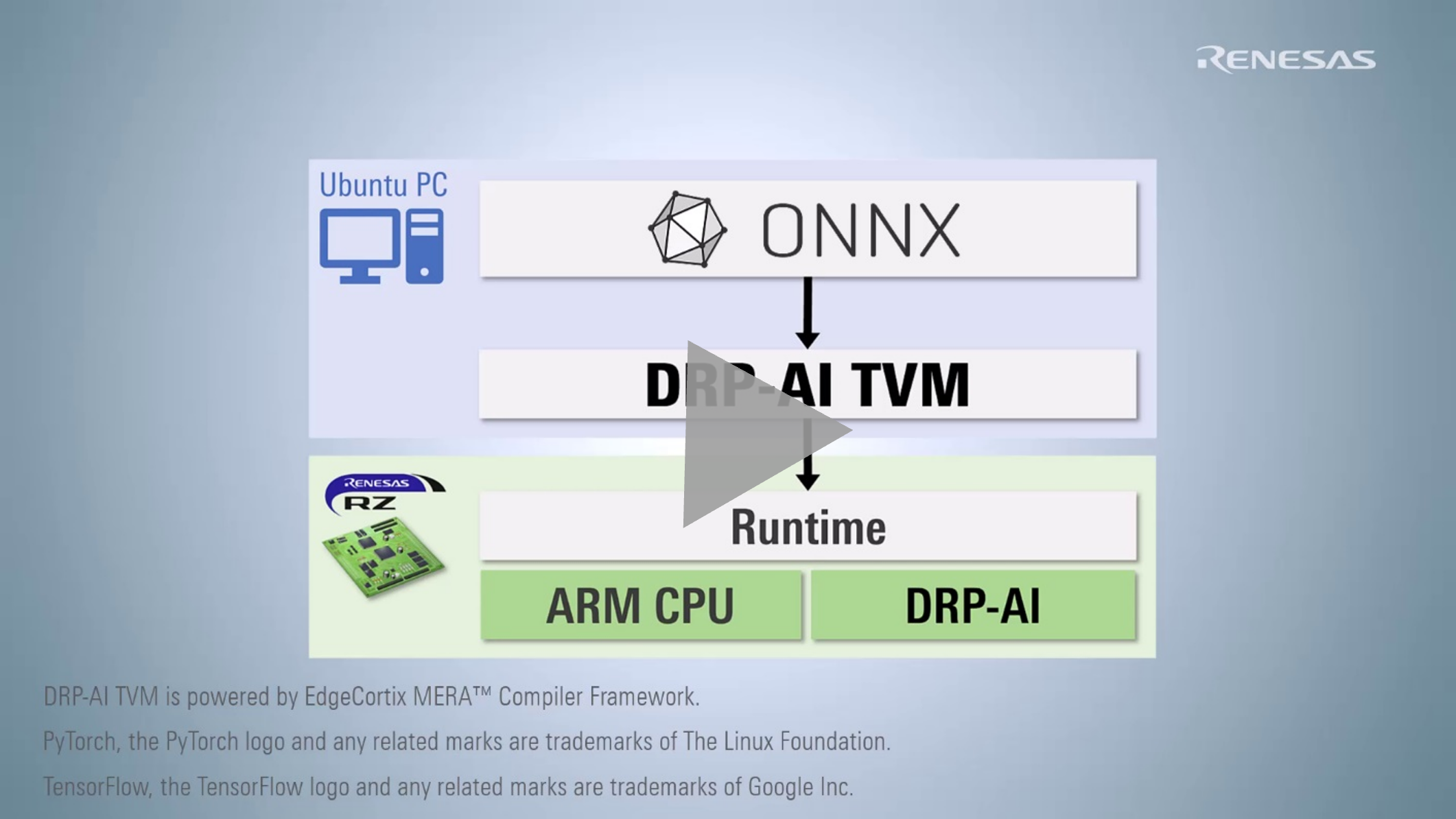

Following video shows brief tutorial for how to deploy ONNX model on RZ/V series board.

RZ/V DRP-AI TVM Tutorial - How to Run ONNX Model (YouTube)

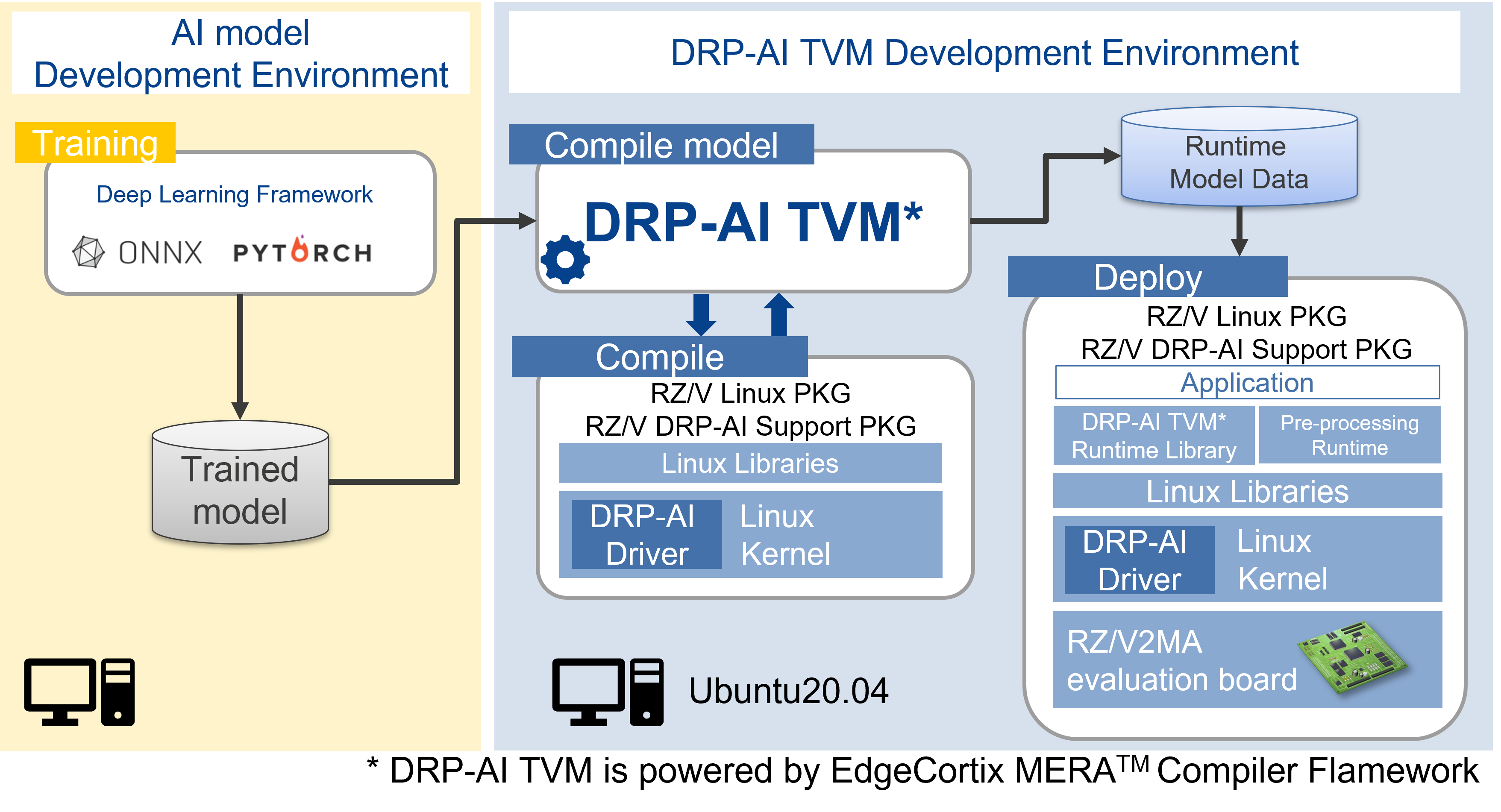

To deploy the AI model to DRP-AI on the target board, you need to compile the model with DRP-AI TVM1 to generate Runtime Model Data (Compile).

SDK generated from RZ/V Linux Package and DRP-AI Support Package is required to compile the model.

After compiled the model, you need to copy the file to the target board (Deploy).

You also need to copy the C++ inference application and DRP-AI TVM1 Runtime Library to run the AI model inference.

| RZ/V2L, RZ/V2M, RZ/V2MA |

|---|

|

| RZ/V2H |

|---|

|

Following pages show the example to compile the ResNet18 model and run it on the target board.

- What is Pruning and Quantization?

- Installing DRP-AI Extention Package

- Pruning and retraining by DRP-AI Extension Package

Compile model with DRP-AI TVM1

- Application Example for DRP-AI TVM (RZ/V2L, RZ/V2M, RZ/V2MA)

- Application Example for DRP-AI TVM (RZ/V2H)

To find more AI examples, please see How-to page.

If you want to know which models have been tested by Renesas, please refer to Model List.

If error occurred at compile/runtime operation, please refer error list.

How-to page includes following explanation.

- profiler;

- validation between x86 and DRP-AI;

- etc.

If you have any questions, please contact Renesas Technical Support.