=================

- Code

- Datasets

- Deployment and Failure Injection Scripts of Train-Ticket

- Citation

- Supplementary details

A preprint version: https://arxiv.org/abs/2207.09021

See requirements.txt and requirements-dev.txt. Note that DGL 0.8 is not released yet, so you need to install DGL 0.8 manually from the source code. PyTorch version should be equal to or greater than 1.11.0.

I also publish a docker image on DockerHub: https://hub.docker.com/repository/docker/lizytalk/dejavu, which is based on NGC PyTorch Image and supports GPU acceleration.

I use the command realpath in the example commands below, which is not bundled in macOS and Windows. On macOS, you can install it by brew install coreutils.

| Algorithm | Usage |

|---|---|

| DejaVu | Run for dataset A1: python exp/run_GAT_node_classification.py -H=4 -L=8 -fe=GRU -bal=True --data_dir=data/A1 |

| JSS'20 | Run for dataset A1: python exp/DejaVu/run_JSS20.py --data_dir=data/A1 |

| iSQUAD | Run for dataset A1: python exp/DejaVu/run_iSQ.py --data_dir=data/A1 |

| Decision Tree | Run for dataset A1: python exp/run_DT_node_classification.py --data_dir=data/A1 |

| RandomWalk@Metric | Run for dataset A1: python exp/DejaVu/run_random_walk_single_metric.py --data_dir=data/A1 --window_size 60 10 --score_aggregation_method=min |

| RandomWalk@FI | Run for dataset A1: python exp/DejaVu/run_random_walk_failure_instance.py --data_dir=data/A1 --window_size 60 10 --anomaly_score_aggregation_method=min --corr_aggregation_method=max |

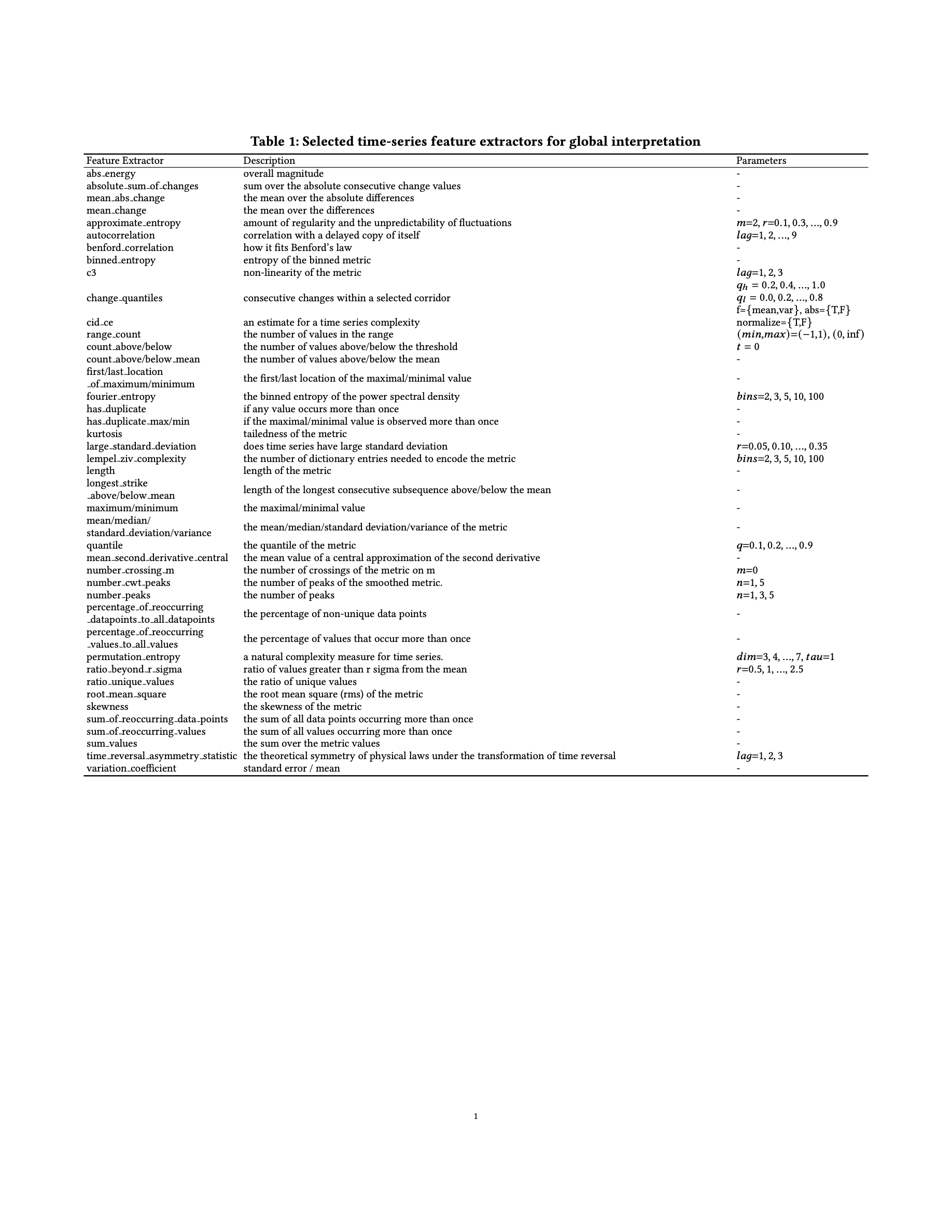

| Global interpretation | Run notebooks/explain.py as a jupyter notebook with jupytext |

| Local interpretation | DejaVu/explanability/similar_faults.py |

The commands would print a one-line summary in the end, including the following fields: A@1, A@2, A@3, A@5, MAR, Time, Epoch, Valid Epoch, output_dir, val_loss, val_MAR, val_A@1, command, git_commit_url, which are the desrired results.

Totally, the main experiment commands of DejaVu should output as follows:

- FDG message, including the data paths, edge types, the number of nodes (failure units), the number of metrics, the metrics of each failure class.

- Traning setup message: the faults used for training, validation and testing.

- Model architecture: model parameters in each part, total params

- Training process: the training/validation/testing loss and accuracy

- Time Report.

- command output one-line summary.

$ docker run -it --rm -v $(realpath .):/workspace lizytalk/dejavu bash -c 'source .envrc && python exp/run_GAT_node_classification.py -H=4 -L=8 -fe=GRU -bal=True --data_dir=./data/A1'

...

2022-06-20 03:49:26.204 | INFO | DejaVu.workflow:<lambda>:124 - command output one-line summary: 75.00,93.75,100.00,100.00,1.31,335.0238134629999,,,/SSF/output/run_GAT_node_classification.py.2022-06-20T03:43:49.950103,,,,python exp/run_GAT_node_classification.py -H=4 -L=8 -fe=GRU -aug=True -bal=True --data_dir=./data/A1 --max_epoch=100,https://anonymous-submission-22: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@github.com/anonymous-submission-22/DejaVu/tree/240d7d2514c31ca699b2b818ba7d888e0eb71c4d

train finished. saved to /SSF/output/run_GAT_node_classification.py.2022-06-20T03:43:49.950103

The datasets A, B, C, D are public at :

- https://www.dropbox.com/sh/ist4ojr03e2oeuw/AAD5NkpAFg1nOI2Ttug3h2qja?dl=0

- https://doi.org/10.5281/zenodo.6955909 (including the raw data of the Train-Ticket dataset)

In each dataset,

graph.ymlorgraphs/*.ymlare FDGs,metrics.csvis metrics, andfaults.csvis failures (including ground truths).FDG.pklis a pickle of the FDG object, which contains all the above data. Note that the pickle files are not compatible in different Python and Pandas versions. So if you cannot load the pickles, just ignore and delete them. They are only used to speed up data load.

The trace data in dataset D is incorrect. We missed the Istio Gateway and thus, the trace ID were not properly assigned to the spans (upto commit b653e4a1ddc65e5cf1d51d239a427e2775216069). We have now fixed the issues (https://github.com/lizeyan/train-ticket/commit/898637e770317901a43552adba373703113e7735).

https://github.com/lizeyan/train-ticket

@inproceedings{li2022actionable,

title = {Actionable and Interpretable Fault Localization for Recurring Failures in Online Service Systems},

booktitle = {Proceedings of the 2022 30th {{ACM Joint Meeting}} on {{European Software Engineering Conference}} and {{Symposium}} on the {{Foundations}} of {{Software Engineering}}},

author = {Li, Zeyan and Zhao, Nengwen and Li, Mingjie and Lu, Xianglin and Wang, Lixin and Chang, Dongdong and Nie, Xiaohui and Cao, Li and Zhang, Wenchi and Sui, Kaixin and Wang, Yanhua and Du, Xu and Duan, Guoqing and Pei, Dan},

year = {2022},

month = nov,

series = {{{ESEC}}/{{FSE}} 2022}

}Since the DejaVu model is trained with historical failures, it is straightforward to interpret how it diagnoses a given failure by figuring out from which historical failures it learns to localize the root causes.

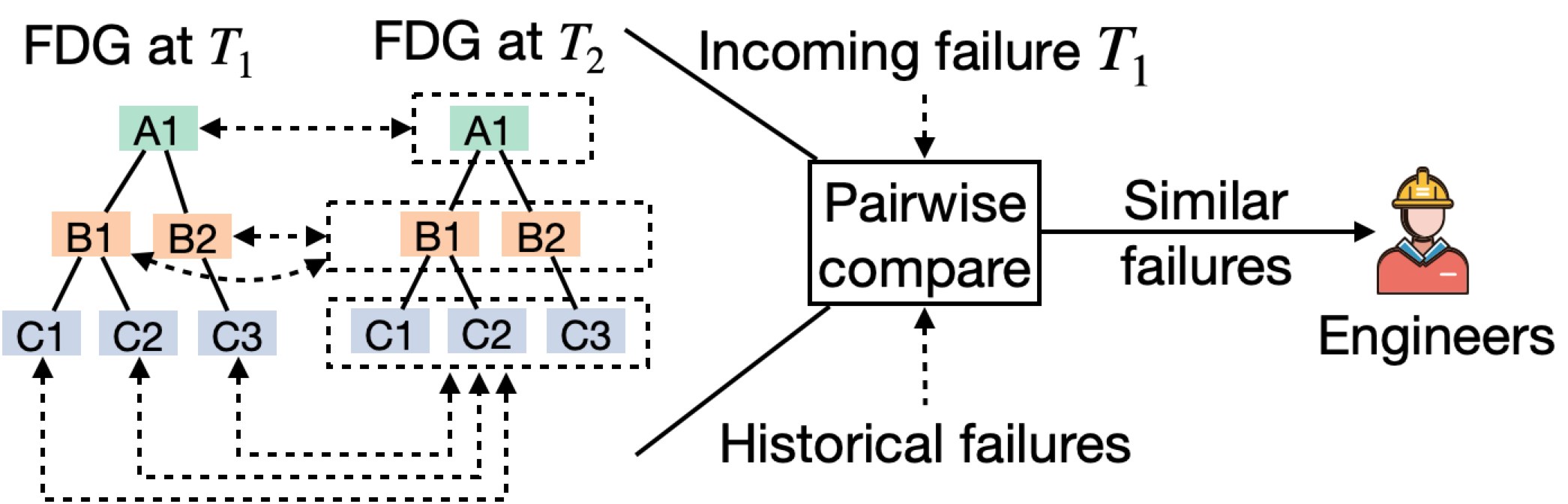

Therefore, we propose a pairwise failure similarity function based on the aggregated features extracted by the DejaVu model.

Compared with raw metrics, the extracted features are of much lower dimension and contain little useless information, which the DejaVu model ignores.

However, computing failure similarity is not trivial due to the generalizability of DejaVu.

For example, suppose that the features are

To solve this problem, we calculate similarities based on failure classes rather than single failure units.

As shown in \cref{fig:local-interpretation}, for each failure units at an in-coming failure

For an in-coming failure, we calculate its similarity to each historical failure and recommend the top-k most similar ones to engineers. Our model is believed to learn localizing the root causes from these similar historical failures. Furthermore, engineers can also directly refer to the failure tickets of these historical failures for their diagnosis and mitigation process. Note that sometimes the most similar historical failures may have different failure classes to the localization results due to imperfect similarity calculation. In such cases, we discard and ignore such historical failures.