Hateful Meme Detection through Context-Sensitive Prompting and Fine-Grained Labeling [English | 中文]

This repository contains the codes and datasets used in our study.

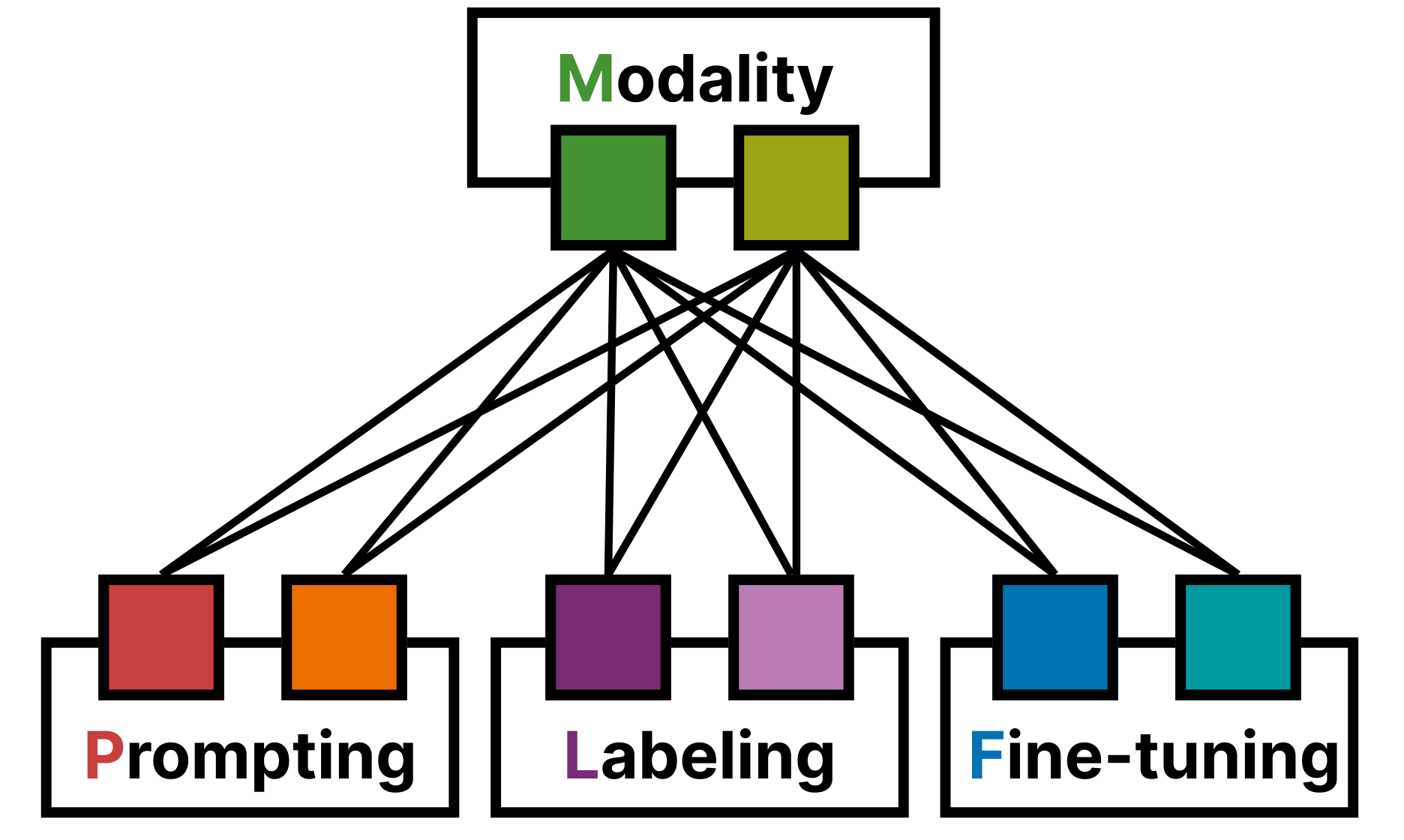

Figure 1. The conceptual framework

Publication: AAAI 2025 (Student Abstract, Oral)

Authors: Rongxin Ouyang

Link to Paper:

- AAAI Proceedings: [TBD] (main)

- ArXiv: 2411.10480 (main + supplementary information)

Link to Tutorial, for social scientis who are willing to annotate images using large language models, we provided a simplified tutorial tested on Google Colab's free resources:

Due to the size and copyright restrictions of the original dataset, please use the provided links to access the dataset for our study.

We thank all contributors of the prior models used in our study:

- Sanh, V. (2019). DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108.[Model Card] [Fine-tuning Guide]

- Chen, Z., Wu, J., Wang, W., Su, W., Chen, G., Xing, S., ... & Dai, J. (2024). Internvl: Scaling up vision foundation models and aligning for generic visual-linguistic tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 24185-24198). [Model Card] [Fine-tuning Guide]

- OpenAI (2023). GPT-4 Technical Report. (as a teacher)

./dataset/./dataset/raw/hateful_memes_expanded/Meta Hateful Memes Meta Data./dataset/raw/hateful_memes_expanded/img/Meta Hateful Memes Images...

./process/./process/internvl_finetuned/Finetuned InternVL models...

./script/./script/1.finetune.distilbert.sample.ipynbFinetuning DistilBERT (unimodal)./script/2.finetune.internvl.sample.shFinetuning Internvl 2.0 8B (multi-modal)./script/3.evaluation.batch.pyEvaluations of all models...

This work was supported by the Singapore Ministry of Education AcRF TIER 3 Grant (MOE-MOET32022-0001). We gratefully acknowledge invaluable comments and discussions with Shaz Furniturewala and Jingwei Gao.

- If you encountered any questions, feel free to reach out to Rongxin (rongxin$u.nus.edu). 😄

TBD

MIT License