Test data, Matlab code and data sets and user manuals

This paper has been published in Applied Sciences https://www.mdpi.com/2076-3417/9/18/3900. If you find it useful, please cite accordingly.

IMPORTANT

Data and programs: everything is in matlab format.

Clear all the data and close all windows

clear all

close all

Load the matrix with the data from Randen's paper

load randenData

whos

Name Size Bytes Class Attributes dataRanden 1x9 9438192 cell maskRanden 1x9 9438192 cell trainRanden 1x9 40371184 cell

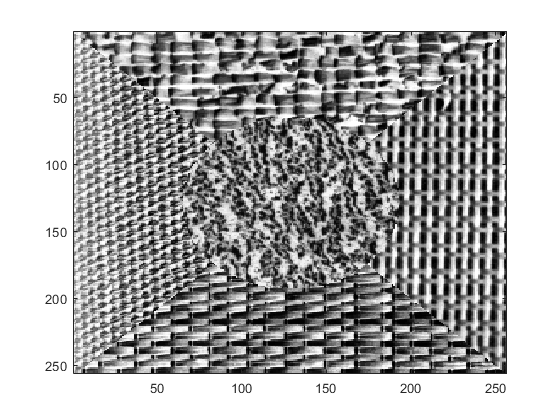

imagesc(dataRanden{1})

colormap gray

This is just one of the figures with different textures, the whole set, with training data (not Matlab)

if needed is available at Trygve

Randen's webpage:

http://www.ux.uis.no/~tranden/

imagesc(maskRanden{1})

colormap jet

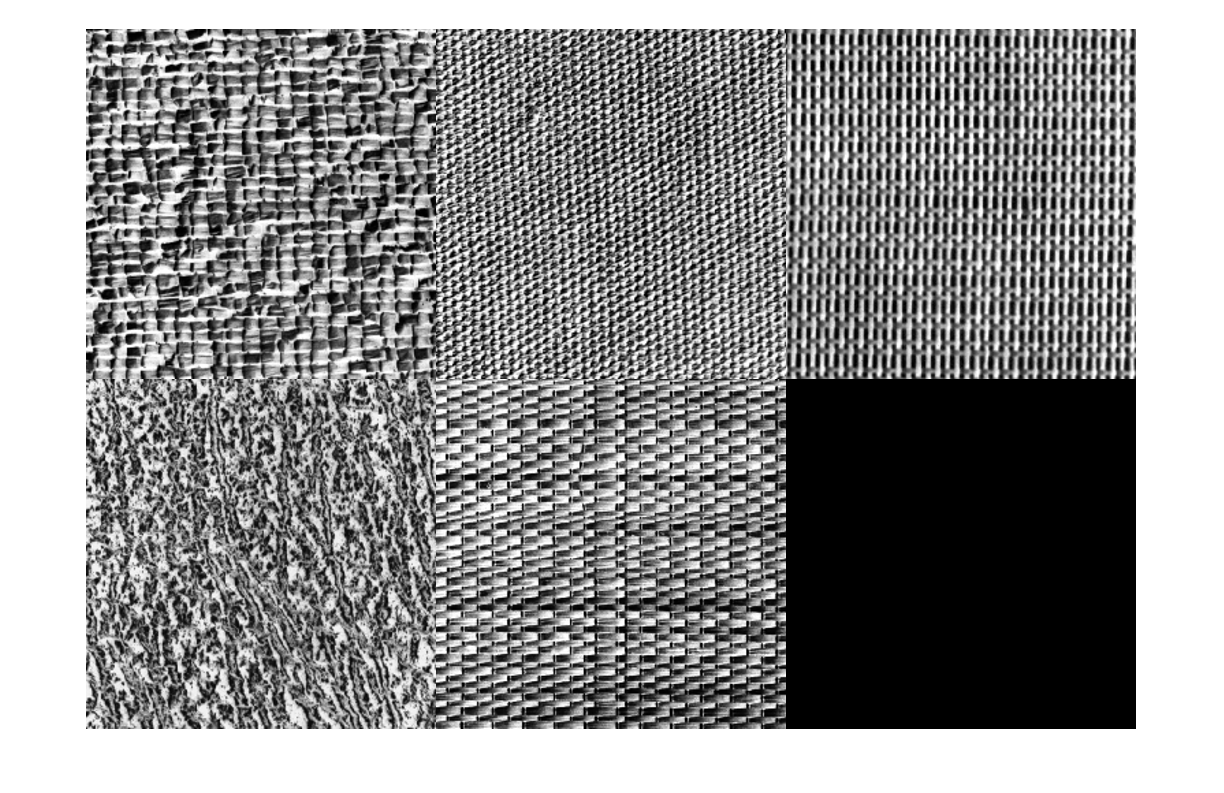

montage(mat2gray( trainRanden{1}))

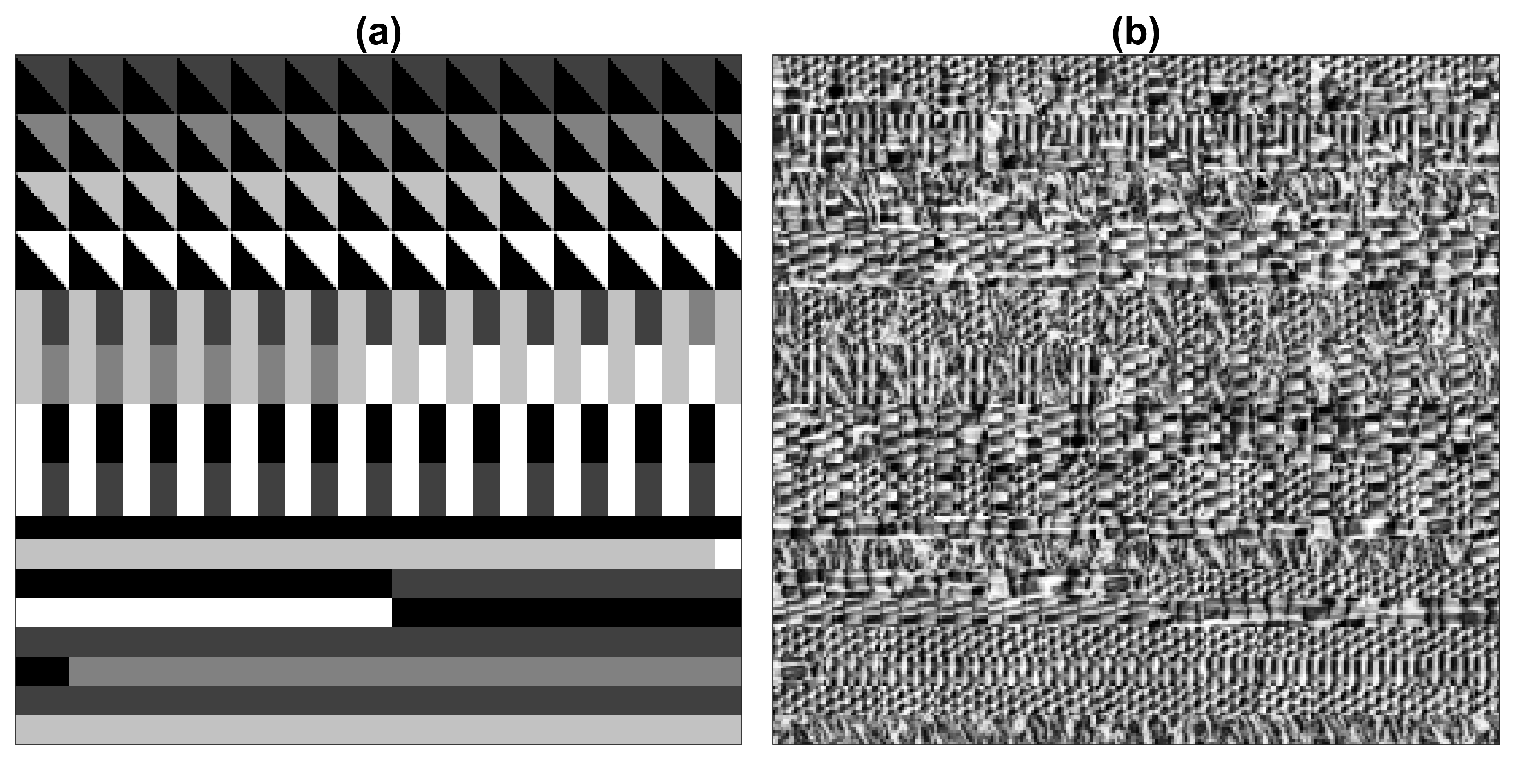

To generate training data that will be used to train U-Nets in 32x32 patches you can use either of the following files:

prepareTrainingLabelsRanden.m

prepareTrainingLabelsRanden_HorVerDiag.m

The first one will only prepare patches with two vertical textures, whilst the second will arrange in diagonal, vertical and horizontal arrangements. Obviously, you will need to change the lines where folders are defined so that you save these training pairs of textures and labels correctly in your computer. The patches look like this:

Finally, to train and compare results you need to run the file:

segmentationTextureUnet.m

This file will loop over different training options and network configurations, so it takes long, very long especially if you do not have GPUs enabled.

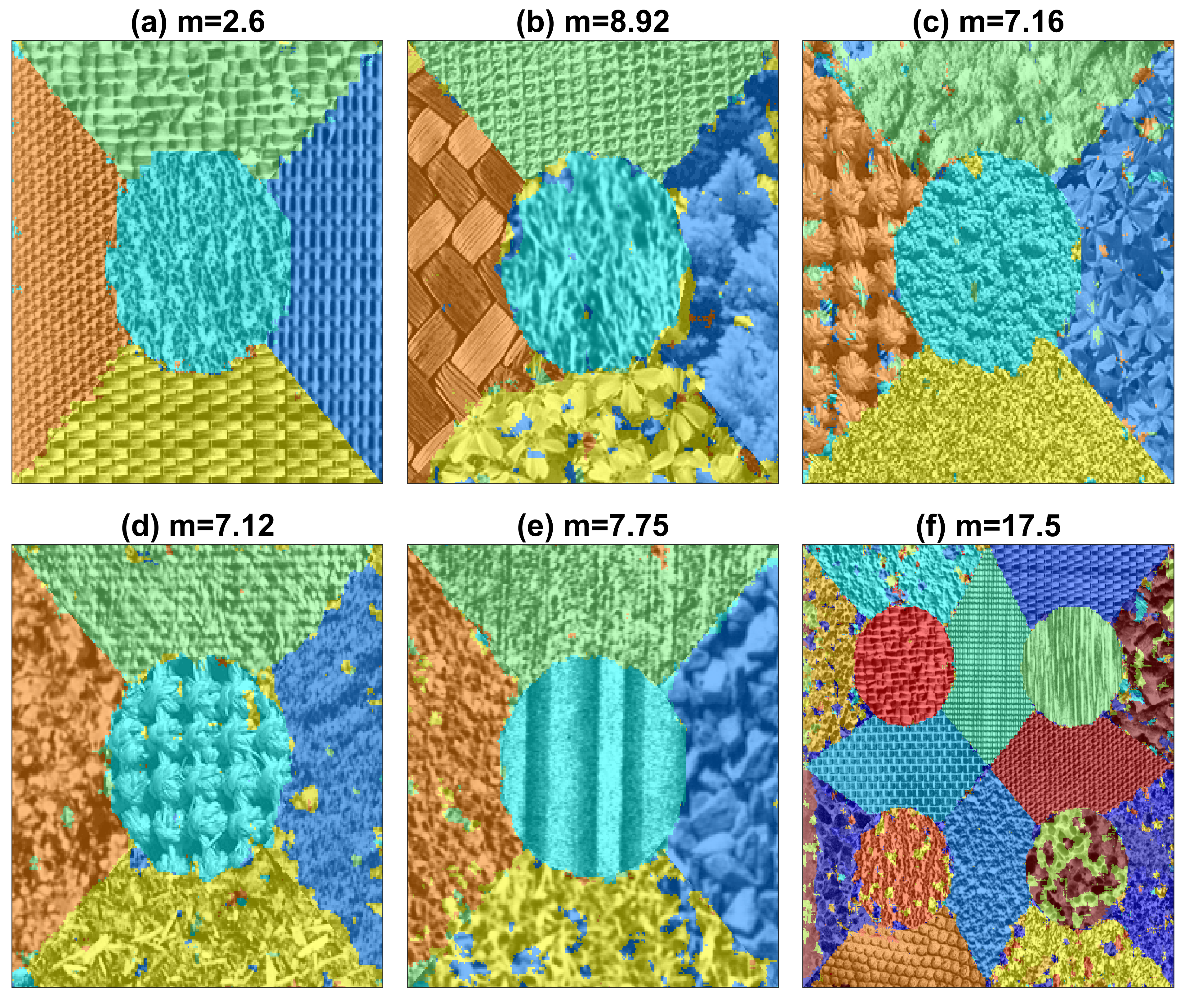

Current results are shown below.

More details are described in the paper.