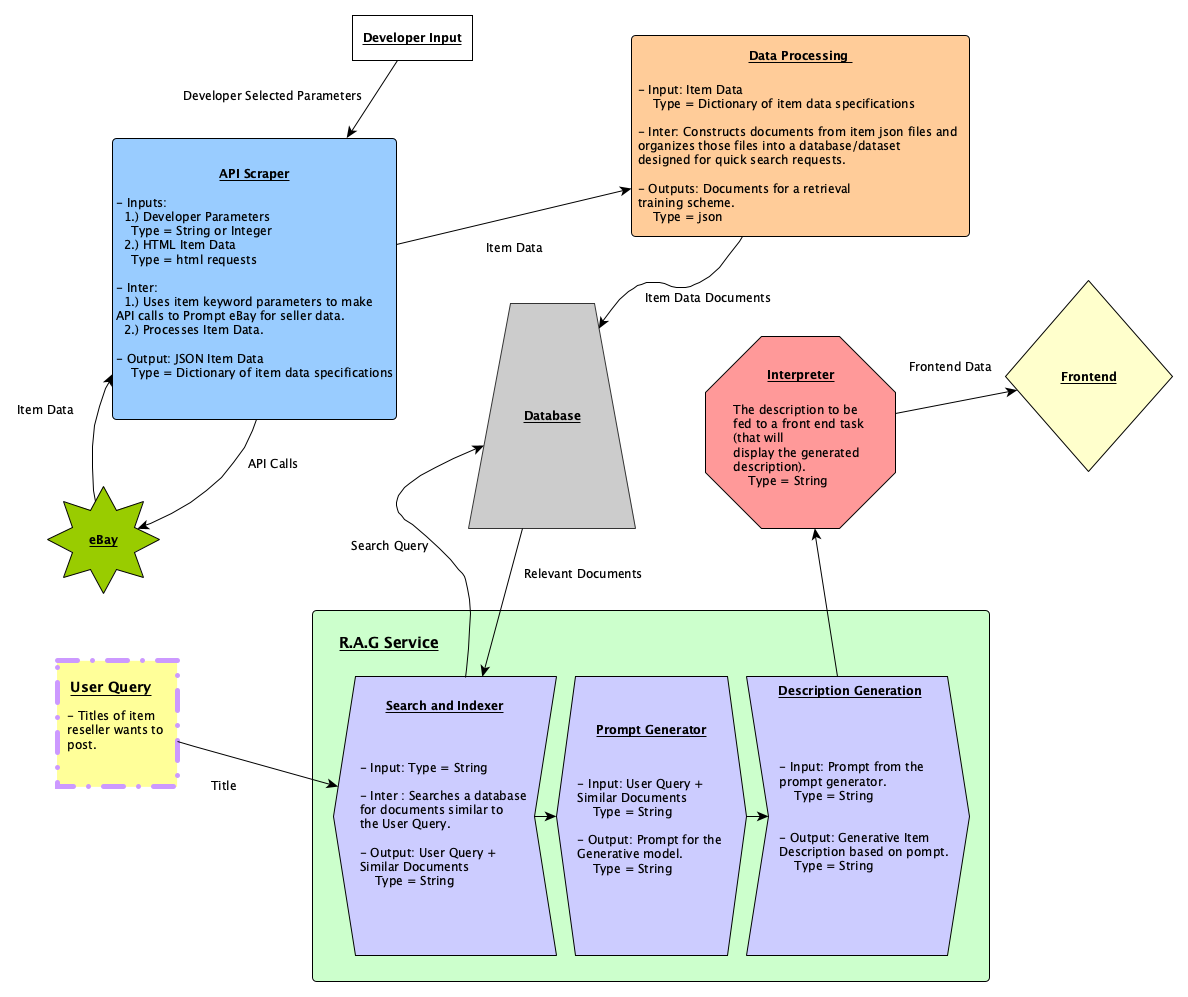

eAs is a tool that generates aggregated item pricing and descriptions by simply inputing an item's name. It uses ColBERT and GPT2 for Retrieval-Augmented Generation, open-source ecommerce item data for knowledge base acquisition, and Docker for microservice orchestration.

Explore the docs »

View Demo

·

Report Bug

·

Request Feature

Table of Contents

This project aims to streamline the listing process by providing high-quality, autogenerated item pricing and descriptions using a RAG model scheme. This not only saves time for sellers but also ensures that listings are detailed, price-appropriate, and appealing to potential buyers.

I was unable to find ecommerce item datasets with diverse listing large enough for meaningful use. Because of this I had to opt to collect as much useful item data in a single category, e.g. fashion/clothing. As of now, eAs can only reccomend information based on fashion and clothing because of this.

- Developing the Knowledge Base:

- Data Collection: Knowledge base developed with open-source ecommerce datasets,

TrainingDataPro/asos-e-commerce-datasetfor example. - Data Organization: Organized the knowledge base into a collection of documents (strings) for ColBERT’s Indexer. (See the

ecomAssistantdirectory containing theDataCollatorclass for details.) - Data Indexing: Used ColBERT’s Indexer to process and index the collection, making it searchable.

- This was done on Google Colab as GPU support is needed for indexing. See the

ColBERT_eAS_Indexing.ipynbnotebook for specifics on the indexing process. - The index files can be saved and used later with the Searcher in a dedicated retrieval script.

- This was done on Google Colab as GPU support is needed for indexing. See the

- Executing the Retrieval Query:

- Query Processing: Once the collection is indexed, ColBERT’s Searcher can take a query and compare it to the indexed documents to find the k best matches.

- User Query Input: The user inputs a descriptive title for the item they wish to sell, which the Searcher uses to find relevant documents.

- Aggregating Price Data:

- Document Retrieval: ColBERT retrieves documents based on the text query (the item’s title), providing information about the k most similar items scraped from eBay.

- Price Calculation: Extract the price attribute from each of the k documents, and calculate an aggregated price value to suggest to the user for their product.

Instructions for simple local setup and run are given below. If you would like to see details on how to setup virtual environments for testing, that information is available in the 'RAG' and 'DataScraper' directories.

- Download Docker Desktop here.

- Install node http server globally for local hosting:

npm install http-server -g- Create a directory for this program, and clone this repo into it:

git clone https://github.com/rfeinberg3/ecomAssistant.git

cd ecomAssistant- Initialize the API retrieval server (give this a minute to load):

docker compose up --detach- Run the server with Node.js http-server module:

cd frontend

npx http-server --cors --port 8080- Now you can go to the provided link to use the web app!

For details on how a more diverse dataset could be created with datascraping, please refer to my datascraper repo. Setup is super easy!

- Build a Knowledge base of item data.

- Use Selenium Chrome Drivers to extract item descriptions buried in html.

- Research and integrate useful ecommerce item data dataset.

- Indexing and Search Scripts (Retrieval part of RAG).

- Research and test retrieval model to integrate into this program.

- Index on colab (GPU support needed).

- Create CPU functional Search script.

- Use HuggingFace ColBERT repo create in memory model calls.

- Create microservices with Docker.

- Orchestrate containers with docker compose.

- Full Stack Paradigm.

- Write frontend with HTML, CSS, and JavaScript.

- Refactor retrieval in to API server with Flask.

- Setup networking to allow frontend to make API calls to backend (locally for now)

- Setup Mock Frontend (Firebase hosting)

- Convert the

Datasetdirectory into a dedicated Database (PostgreSQL)- Connect db to retrieval app to create in memory dataset for Search.

- Containerize psql db

- Add real ecommerce dataset to db

- Research, test, and integrate a generative model to use with this program for item description generation.

- Refactor all code into classes with abstracts where applicable

See the open issues for a full list of proposed features (and known issues).

Contributions are what makes the open-source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the Apache-2.0 License. See LICENSE.txt for more information.

Ryan Feinberg - LinkedIn - rfeinberg3@gmail.com