This repository contains source codes and training set for the following paper:

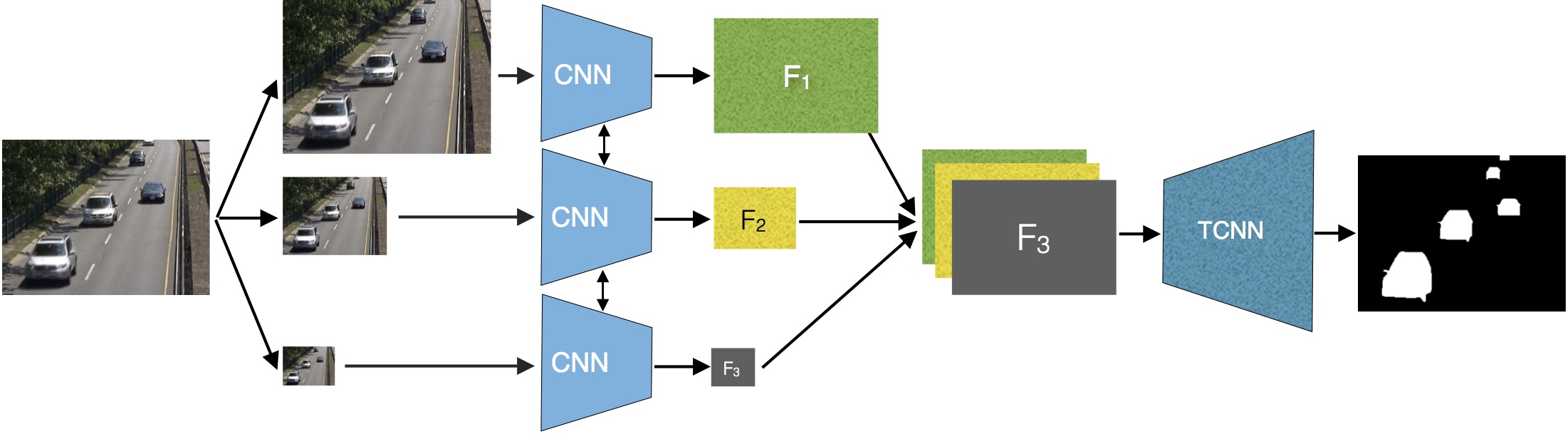

"Foreground Segmentation Using a Triplet Convolutional Neural Network for Multiscale Feature Encoding" by Long Ang LIM and Hacer YALIM KELES

Paper Link : https://arxiv.org/abs/1801.02225

If you find FgSegNet useful in your research, please consider citing:

@article{lim2018foreground,

title={Foreground Segmentation Using a Triplet Convolutional Neural Network for Multiscale Feature Encoding},

author={Lim, Long Ang and Keles, Hacer Yalim},

journal={arXiv preprint arXiv:1801.02225},

year={2018}

}

This work was implemented with the following frameworks:

- Python 3.6.3

- Keras 2.0.6

- Tensorflow-gpu 1.1.0

Easy to train! Just a SINGLE click, gooo!!!

-

Clone this repo:

git clone https://github.com/lim-anggun/FgSegNet.git -

Modify the following files in

your installed dirwith the files inutils dir:<Your Keras DIR>\layers\convolutional.py<Your Keras DIR>\backend\tensorflow_backend.py<Your Keras DIR>\keras\losses.py<Your Keras DIR>\metrics.py<Your PYTHON 3.6>\site-packages\skimage\transform\pyramids.py

replace

out_rows = math.ceil(rows / float(downscale))

out_cols = math.ceil(cols / float(downscale))

with

out_rows = math.floor(rows / float(downscale))

out_cols = math.floor(cols / float(downscale))

-

Download VGG16 weights from HERE and place it in appropriate directory (e.g. FgSegNet dir), or it will be downloaded and stored in /.keras/models/ automatically.

-

Download our train+val frames from HRER and CDnet2014 dataset, then place them into corresponding directory.

Example:

FgSegNet/ FgSegNet/FgSegNet.py /FgSegNetModule.py FgSegNet_dataset2014/ baseline/ highway50 highway200 pedestrians50 pedestrians200 ... badWeather/ skating50 skating200 ... ... CDnet2014_dataset/ baseline/ highway pedestrians ... badWeather/ skating ... ... -

Go to terminal (or command prompt), then change dir path to python codes and train by running the following commands.

>cd FgSegNet

>cd FgSegNet

>python FgSegNet.py

We perform two separated evaluations and report our results on two test splits (test dev & test challenge):

- We compute our results locally. (on

test devdataset) - We upload our results to Change Detection 2014 Challenge. (on

test challengedataset where ground truth values are not shared with the public dataset)

(Both results are reported in our paper. Please refer to it for details)

Compute metrics locally using CDnet Utilities

test dev: by considering only the range of the frames that contain the ground truth labels by excluding training frames (50 or 200 frames)test challenge: dataset on the server side (http://changedetection.net)

We split 20% for training (denoted by n frames, where n ∈ [2−148]) and 80% for testing.

We perform two sets of experiment: first, we split the frames 20% for training (denoted by n frames, where n ∈ [3 − 23]) and 80% for testing, second we split 50% for training (where n ∈ [7 − 56]) and remaining 50% for testing.

The table below shows the overall results across 11 categories obtained from Change Detection 2014 Challenge.

| Methods | PWC | F-Measure | Speed (320x240, batch-size=1) on NVIDIA GTX 970 GPU |

|---|---|---|---|

| FgSegNet_M | 0.0559 | 0.9770 | 18fps |

| FgSegNet_S | 0.0461 | 0.9804 | 21fps |

The table below shows the overall test results across 14 video sequences.

| Methods | PWC | F-Measure |

|---|---|---|

| FgSegNet_M | 0.9431 | 0.9794 |

| FgSegNet_S | 0.8524 | 0.9831 |

💡 TODO

TODO

- add FgSegNet_S (FPM module) source code

- add more results and training frames of other datasets

09/06/2018:

- add quantity results on changedetection.net (CDnet 2014 dataset)

- add supporting codes

29/04/2018:

- add jupyter notebook and model for test prediction.

27/01/2018:

- add FgSegNet_M (a triplet network) source code and training frames

lim.longang at gmail.com

Any issues/discussions are welcome.