- Environment Setup

- Warmup - Connecting to Pulsar

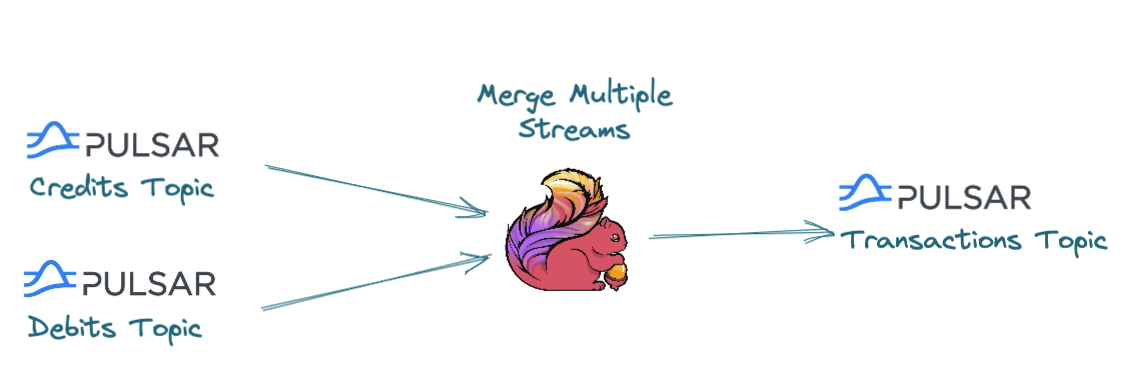

- Handling Multiple Streams

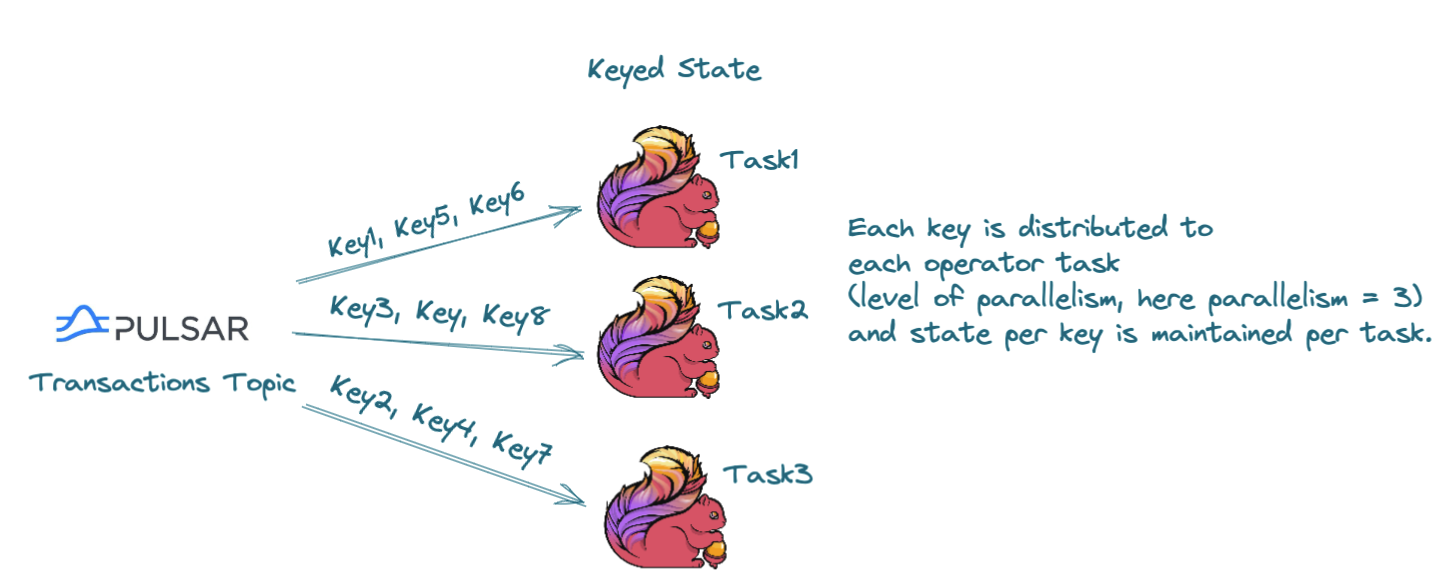

- Warmup - Keyed State

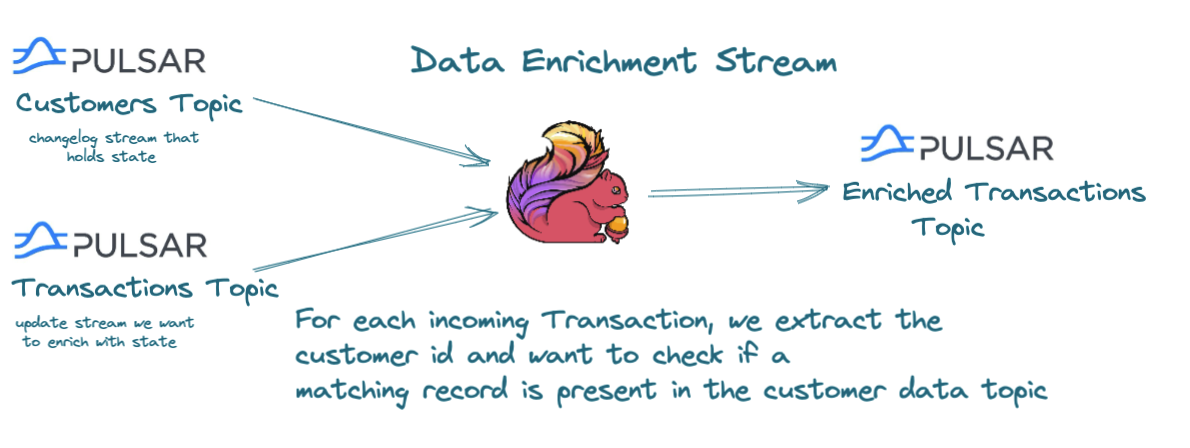

- Performing Data Enrichment and Lookups

- Buffering

- Side Outputs

- State Backends

- Checkpoints, Savepoints and Restart Strategies

- Additional Resources

In order to run the code samples we will need a Pulsar and Flink cluster up and running. You can also run the Flink examples from within your favorite IDE in which case you don't need a Flink Cluster.

If you want to run the examples inside a Flink Cluster run to start the Pulsar and Flink clusters.

docker-compose upif you want to run within your IDE you can just spin up a pulsar cluster

docker-compose up pulsarWhen the cluster is up and running successfully run the following command:

./setup.shOutcomes: How can we connect to Pulsar and start consuming events. We will see how we can achieve this by using:

Use Case:

In some scenarios you might need to merge multiple streams together, for example data from two pulsar topics.

The input topics events can either the same or different schemas.

When the input events have the same schema you can use the union function, otherwise you can use the connect function.

Outcome:

We will how to achieve this using two input transaction topics - one containing credit transactions and one with debits and merge these two

streams into one datastream. We will see two different approaches:

- Union Function: Input datastreams need to be of the same input data type

- Connect Function: Input datastreams to be of different types

Other options to explore:

-

- the events need to belong to the same window and match on some join condition

-

- the events need to be between the same interval

lowerBoundInterval < timeA - timeB < upperBoundInterval

Outcomes:

- Understand the difference between Operator State and Keyed State

- How Flink handles state and the available State primitives

We will review with an example that accumulates state per customer key and calculates: How many transactions we have per customer?

You can find the relevant examples here

Use Case:

In some scenarios we need to be able to perform Data Enrichment and/or Data Lookups having on topic as an

append-only stream while the other topic is a changelog stream - keeps only the latest state for a particular key.

We will see how to implement such use cases by using Flink's combining Flink's process function and Keyed State to enrich

transaction events with user information.

You can find the relevant examples here.

Use Case:

This scenario is pretty similar to the previous one, but here we are buffering the events when there is some missing state.

We can not guarantee when both matching events will arrive into our system and in such case we might want to buffer the events

until a matching one arrives.

You can find the relevant examples here.

Use Case:

Some scenarios you might want to split one stream into multiple streams - stream branches - and forward

them to different downstream operators for further processing.

We will see how to implement such a use cases in order to account for unhappy paths. Following our Data Enrichment example, we will see

how to handle transaction events for which user information is not present (maybe due to some human error or system malfunction).

You can find the relevant examples here.

Outcomes:

- Understand Flink State backends

- Use RocksDB as the state backend to handle state that can't fit in memory

Outcomes:

- Understand how Flink's Checkpointing mechanism works

- Understand how we can combine Flink checkpoints and Restart Strategies to recover our job

- Checkpoints vs Savepoints

- How we can recover state from a previous checkpoint

You can find the relevant examples here.