My first attempt at a rough ETL pipeline; technologies include spark, GCS, prefect orchestration, and terraform

Infrastructure: Terraform is used for 'infrastructure as code' to set up a data lake in GCS

Orchestration/Scheduling: prefect is used to perform the DAG flow batch-processing

Dag flow: The following DAG process is run once an hour through prefects scheduler/ orion

- gcs_pull_task: pull from GCS a copy of my pre-uploaded fitbit data in csv format, save locally for appending

- fitbit_task: Pull in data from my personal fitbit using the fitbit API from the last hour, transform to a pandas dataframe, and return it

- pyspark_task: use pyspark/SQL to transform the data and pull the largest rate for the hour

- overwrite_master_table: append the new fitibit data to the csv file

- gcs_push_task: push the updated csv file to GCS and overwrite the old version

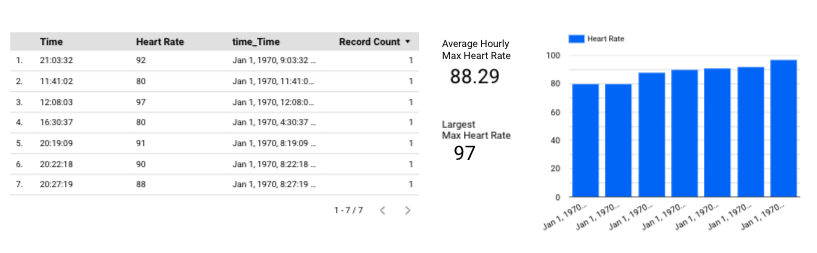

The dashboard was built using google datastudio. It shows the max heart rate over time, the average max heart rate, and the current max of the max heart rates.

I was able to install most of the requierments through guides in the data engineering zoomcamp course, or through online articles. Thanks to everyone who helped with their public repos/ articles!

- Terraform/GCP Cloud Platform: DE zoomcamp guide

- Prefect: prefect install documentation, and prefect beginners guide

- Fitbit API: Fitbit API article by Stephen Hsu

- Spark/PySpark: DE zoomcamp spark windows/linux/macOS download guide, and Pyspark download guide

- build the infrastrcture GCS data lake

bash tf_make_bash.sh; note this pulls a code listed in my git-ignore file. - start prefect/ the prefect UI

prefect orion start - start a prefect agent

prefect agent start -q test - run the deployment script to initiate the batch processing

python pt_deployment.py

Here are a few repos and projects by others that I looked at for inspiration and help with project repo formatting -- thank you to the creators.