In order to understand the concepts of Seq2Seq models or Transformer models, the first thing to know is about RNNs,

- RNN Series

- Unfolding RNNs Part I

- Unfolding RNNs Part II

- Understanding LSTMs

- The Unreasonable Effectiveness of Recurrent Neural Networks

- Recurrent neural network based language model

- Extensions of Recurrent Neural Network Language Model

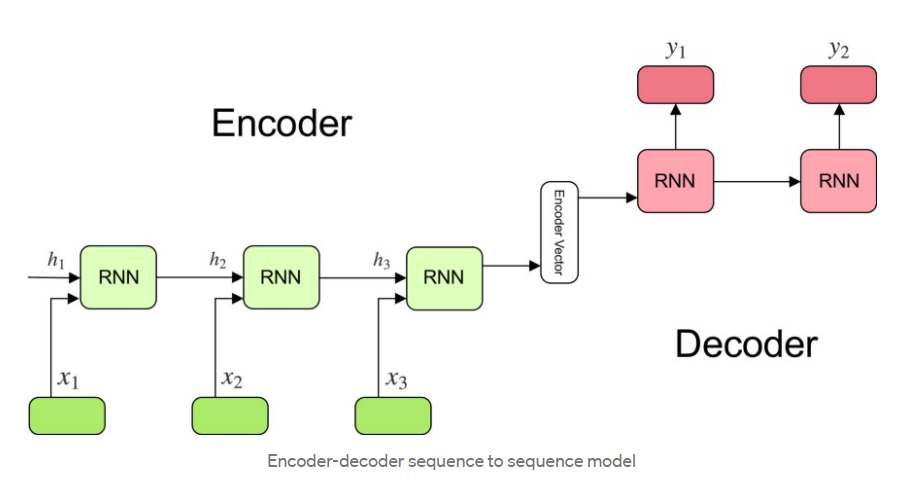

A Sequence to Sequence Model aims to map a fixed-length input with a fixed-length output where the length of the input and output may differ.

- Sequence to Sequence Learning with Neural Networks

- Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation

- Neural Machine Translation by jointly learning to Align and Translate

- Chatbots with Seq2Seq Part 1

- Practical seq2seq Part 2

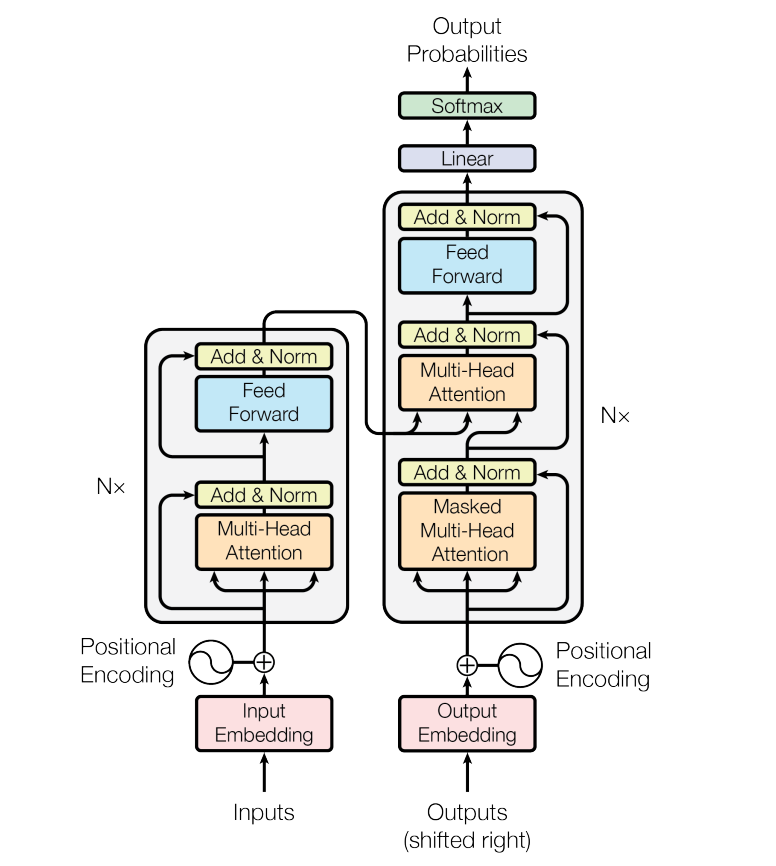

The Transformer in NLP is a novel architecture that aims to solve sequence-to-sequence tasks while handling long-range dependencies with ease.

“The Transformer is the first transduction model relying entirely on self-attention to compute representations of its input and output without using sequence-aligned RNNs or convolution.”