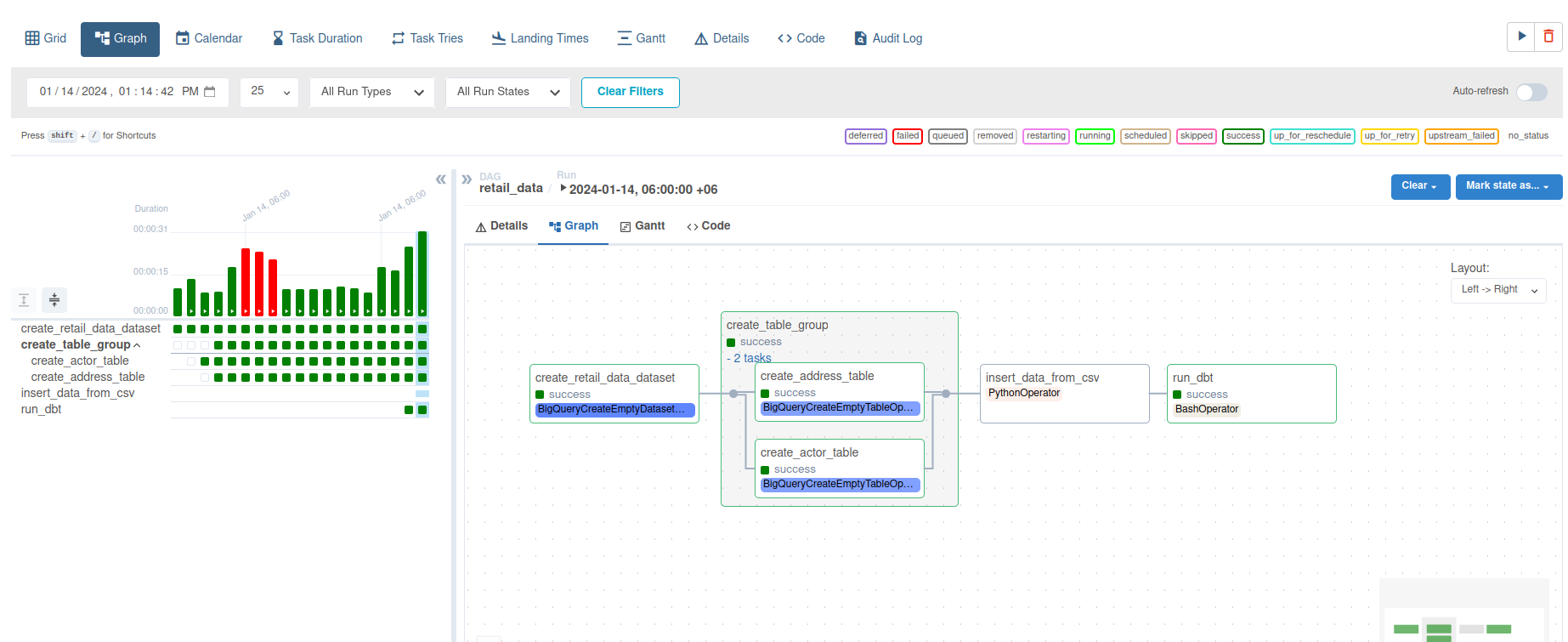

Fetch Data from a simple csv file, send the data in GCP BigQuery table, run dbt to automate the dwh and run soda to check data quality.

- create python venv

- enable it

- install dependencies from

requirements.txtfile - configure airflow in airflow.cfg

- create as GCP service account and add a key. also, download the key in json format.

- In

Variablesection add the following three varibales- gcp_project

- gcp_bigquery_retail_dataset

- gcp_account : downloaded json file path

- In

Connectionsection add a newGCPconnection- connection name: my_gcp_conn

- value: downloaded

service account json file content

- at the bottom of the

dags/data_retail_project.py, modify thebash commandwith dbt and soda project dir,dbt and soda env from where thedbt and sodawill run.

- airflow webserver

- airflow scheduler