You Xie,

Hongyi Xu,

Guoxian Song,

Chao Wang,

Yichun Shi,

Linjie Luo

ByteDance Inc.

)

|

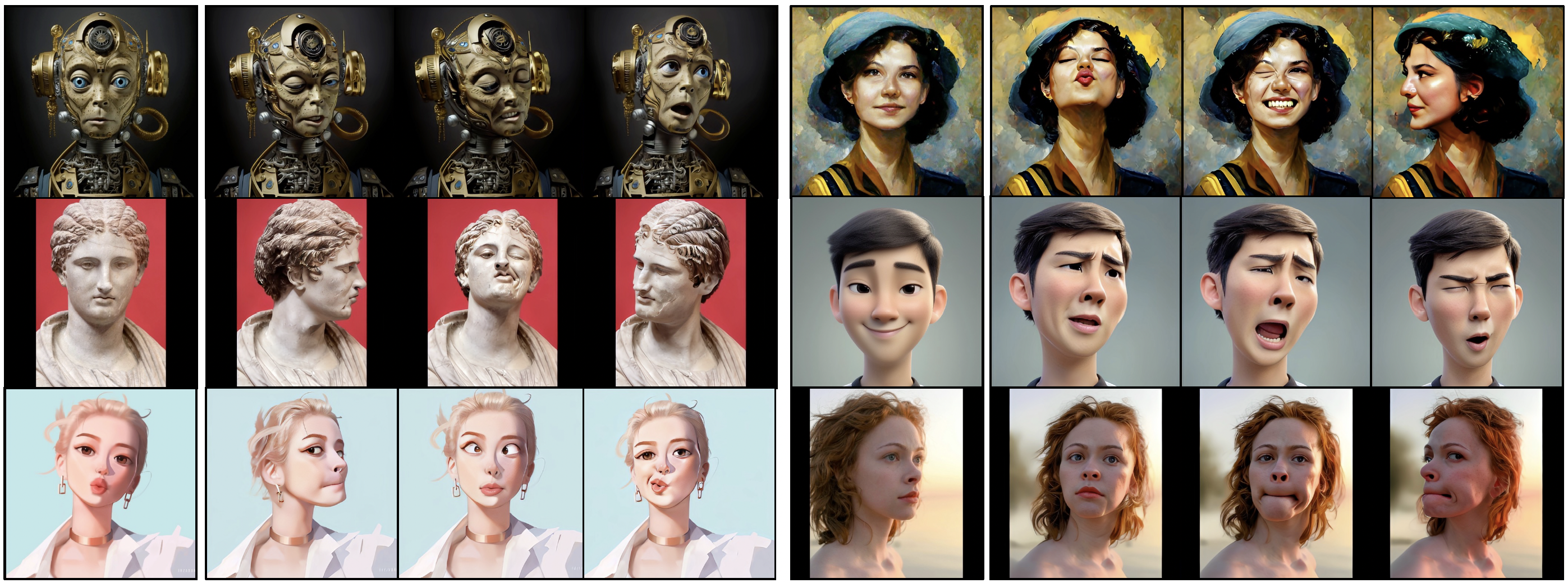

This repository contains the video generation code of SIGGRAPH 2024 paper X-Portrait.

Note: Python 3.9 and Cuda 11.8 are required.

bash env_install.shPlease download pre-trained model from here, and save it under "checkpoint/"

bash scripts/test_xportrait.shparameters:

model_config: config file of the corresponding model

output_dir: output path for generated video

source_image: path of source image

driving_video: path of driving video

best_frame: specify the frame index in the driving video where the head pose best matches the source image (note: precision of best_frame index might affect the final quality)

out_frames: number of generation frames

num_mix: number of overlapping frames when applying prompt travelling during inference

ddim_steps: number of inference steps (e.g., 30 steps for ddim)

efficiency: Our model is compatible with LCM LoRA (https://huggingface.co/latent-consistency/lcm-lora-sdv1-5), which helps reduce the number of inference steps.

expressiveness: Expressiveness of the results could be boosted if results of other face reenactment approaches, e.g., face vid2vid, could be provided via parameter "--initial_facevid2vid_results".

If you find this codebase useful for your research, please use the following entry.

@inproceedings{xie2024x,

title={X-Portrait: Expressive Portrait Animation with Hierarchical Motion Attention},

author={Xie, You and Xu, Hongyi and Song, Guoxian and Wang, Chao and Shi, Yichun and Luo, Linjie},

journal={arXiv preprint arXiv:2403.15931},

year={2024}

}