Official Repository of Panacea.

[Paper] Panacea: Panoramic and Controllable Video Generation for Autonomous Driving,

Yuqing Wen1*†, Yucheng Zhao2*,Yingfei Liu2*, Fan Jia2, Yanhui Wang1, Chong Luo1, Chi Zhang3, Tiancai Wang2‡, Xiaoyan Sun1‡, Xiangyu Zhang2

1University of Science and Technology of China, 2MEGVII Technology, 3Mach Drive

*Equal Contribution, †This work was done during the internship at MEGVII, ‡Corresponding Author.

[WebPage] https://panacea-ad.github.io/

Apr. 18th, 2024: We release our Gen-nuScenes dataset generated by Panacea. Please check themetrics/folder to use it.Apr. 18th, 2024: We release the BEV-perception evaluation codes based on StreamPETR. Please check the

metrics/folder and follow themetrics/README.mdfor detailed evaluation.

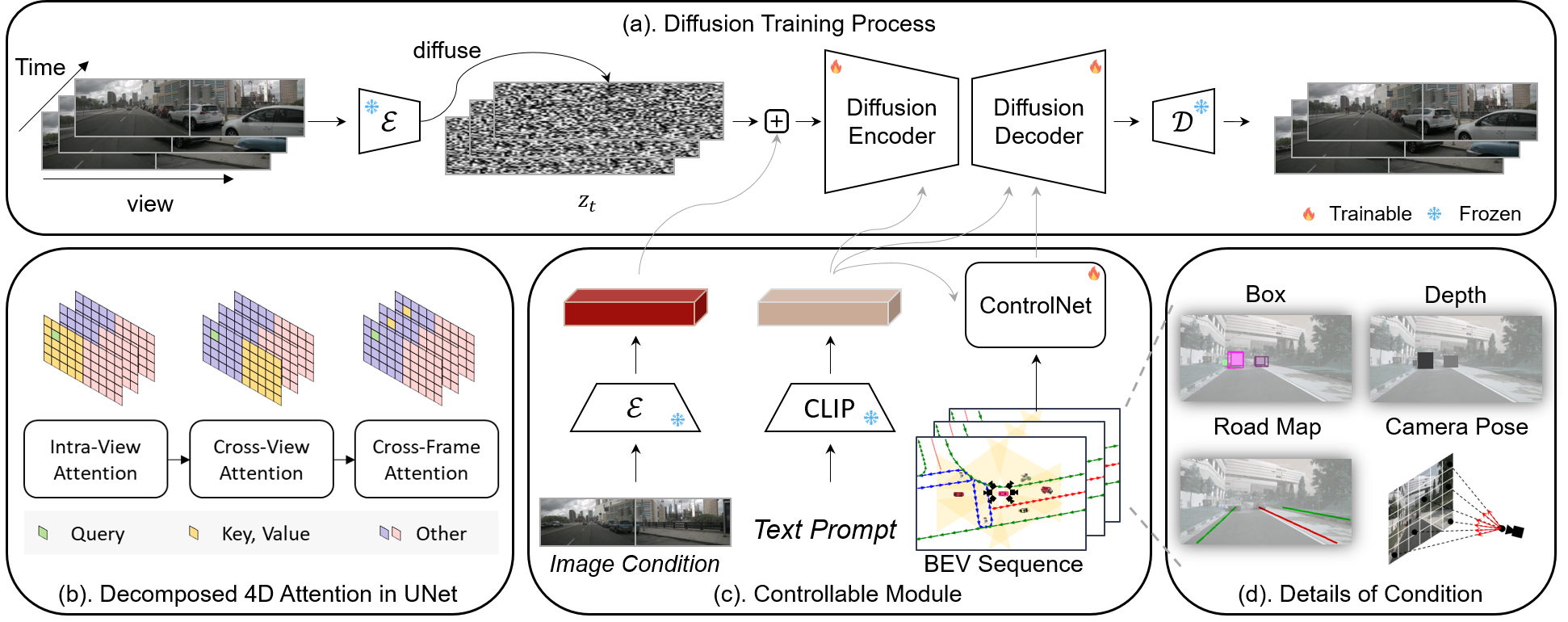

Overview of Panacea. (a). The diffusion training process of Panacea, enabled by a diffusion encoder and decoder with the decomposed 4D attention module. (b). The decomposed 4D attention module comprises three components: intra-view attention for spatial processing within individual views, cross-view attention to engage with adjacent views, and cross-frame attention for temporal processing. (c). Controllable module for the integration of diverse signals. The image conditions are derived from a frozen VAE encoder and combined with diffused noises. The text prompts are processed through a frozen CLIP encoder, while BEV sequences are handled via ControlNet. (d). The details of BEV layout sequences, including projected bounding boxes, object depths, road maps and camera pose.

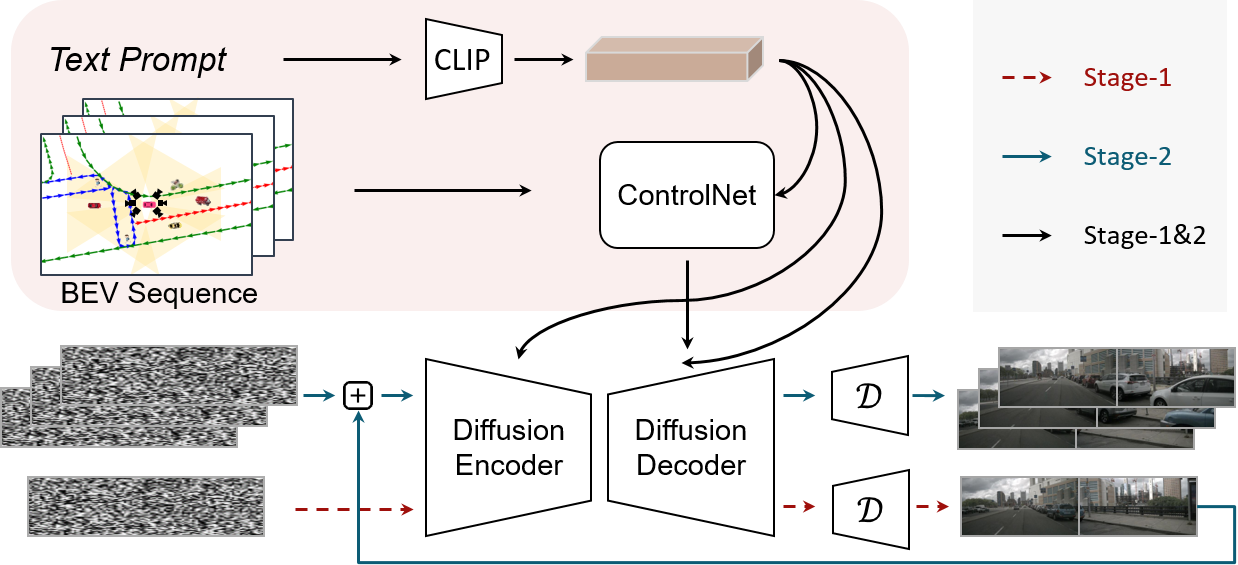

The two-stage inference pipeline of Panacea. Its two-stage process begins by creating multi-view images with BEV layouts, followed by using these images, along with subsequent BEV layouts, to facilitate the generation of following frames.

|

|

Controllable multi-view video generation. Panacea is able to generate realistic, controllable videos with good temporal and view consistensy.

|

Video generation with variable attribute controls, such as weather, time, and scene, which allows Panacea to simulate a variety of rare driving scenarios, including extreme weather conditions such as rain and snow, thereby greatly enhancing the diversity of the data.

|

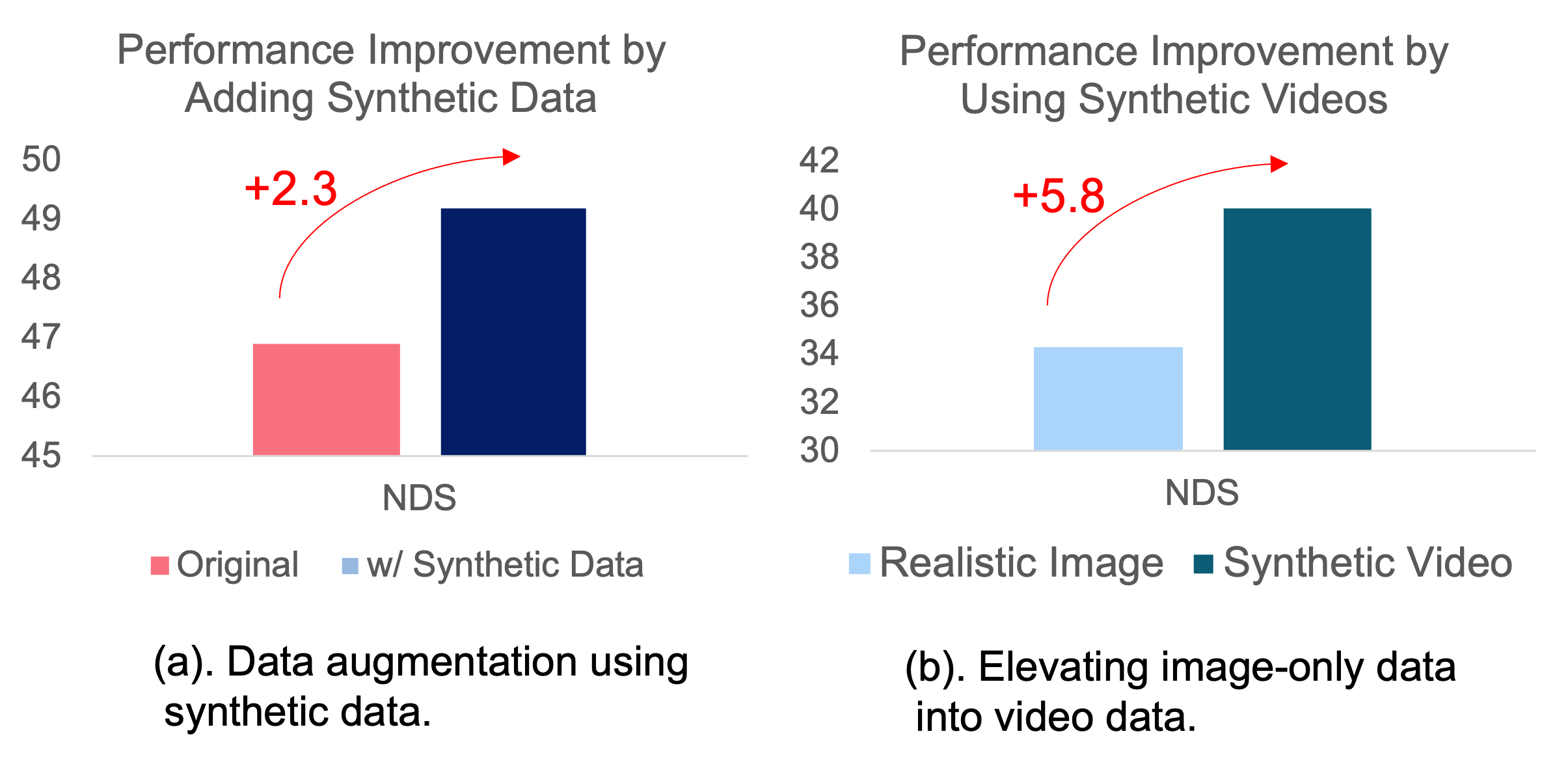

(a). Panoramic video generation based on BEV (Bird’s-Eye-View) layout sequence facilitates the establishment of a synthetic video dataset, which enhances perceptual tasks. (b). Producing panoramic videos with conditional images and BEV layouts can effectively elevate image-only datasets to video datasets, thus enabling the advancement of video-based perception techniques.

@artical{@misc{wen2023panacea,

title={Panacea: Panoramic and Controllable Video Generation for Autonomous Driving},

author={Yuqing Wen and Yucheng Zhao and Yingfei Liu and Fan Jia and Yanhui Wang and Chong Luo and Chi Zhang and Tiancai Wang and Xiaoyan Sun and Xiangyu Zhang},

year={2023},

eprint={2311.16813},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

}