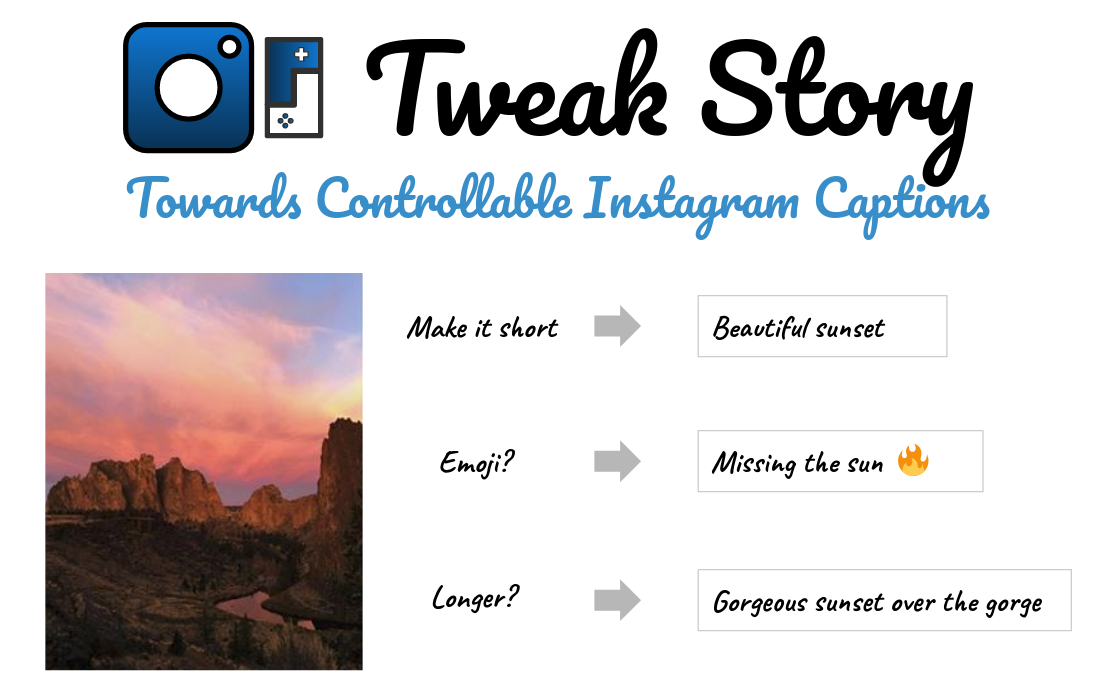

Tweak Story is an open-source project towards generating controllable Instagram captions, powered by PyTorch, Transformers and Streamlit. We apply attention-based condition LSTM network to generate engaging captions that can be tweaked by our users. With different attributes we offer, user can easily mix and match different combination of attributes for their own flavor. In current stage, we offer sentence length and emoji flag as available attributes.

You can find an online version of Tweak Story here.

app has 4 major parts :

app.py- Main script for launching our Streamlit appsrc- This folder contains all utility scripts for powering the appckpts- This folder contains model checkpoint, word map and configuration file for our modeldemo- This folder contains example images you can try out in our app

Download the following files and place them into ckpts folder

Clone the repo and navigate to the repo:

git clone https://github.com/riven314/TweakStory.git

cd TweakStory/app

Build and run the docker image locally:

make run

Navigate to http://localhost:8501 for the app. (Streamlit runs on port 8501 by default)

Shutdown the server:

make stop

Note : When you'll run the container for the first time it will download a resnet101 model. The Streamlit app is run in CPU.

cd TweakStory/app

pytest -s tests

TODO

This project is a part of Data Science Incubator (Summer 2020) organized by Made With ML, jointly developed by Alex Lau and Naman Bhardwaj. We constantly look for better generation quality and deployment strategy. We welcome your contributions and please contact us if you do!

Our work is mainly based upon the following published research:

@article{Xu2015show,

title={Show, Attend and Tell: Neural Image Caption Generation with Visual Attention},

author={Xu, Kelvin and Ba, Jimmy and Kiros, Ryan and Cho, Kyunghyun and Courville, Aaron and Salakhutdinov, Ruslan and Zemel, Richard and Bengio, Yoshua},

journal={arXiv preprint arXiv:1502.03044},

year={2015}

}

@article{Ficler2017show,

title={Controlling Linguistic Style Aspects in Neural Language Generation},

author={Jessica Ficler and Yoav Goldberg},

journal={arXiv preprint arXiv:1707.02633},

year={2017}

}