TITFormer: Combining Text and Simulating Infrared Modalities Based on Transformer for Image Enhancement

PyTorch implementation for TITFormer: Combining Text and Simulating Infrared Modalities Based on Transformer for Image Enhancement

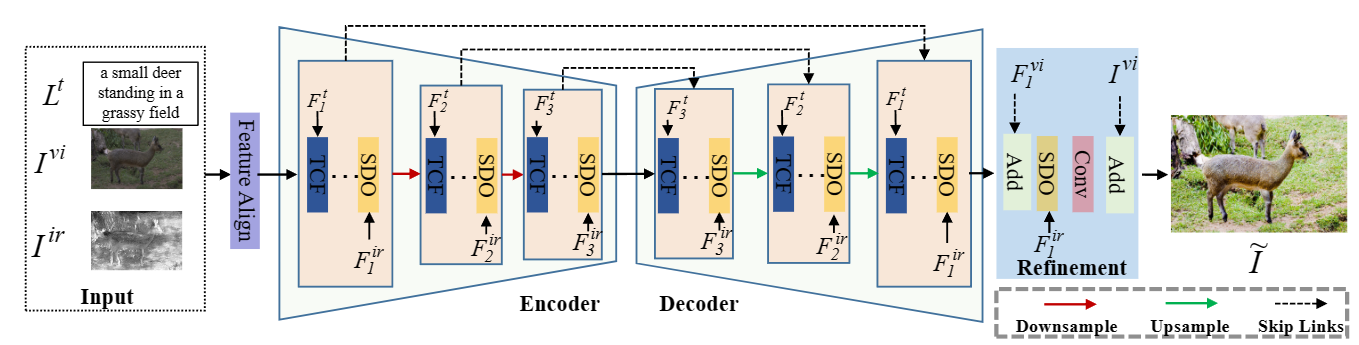

The image enhancement task requires a complex balance between extracting high-level contextual information and optimizing spatial details in the image to improve the visual quality. Most of existing methods have limited capability in capturing contextual features and optimizing spatial details when they only rely on a single modality. To address the above issues, this paper introduces a novel multi-modal image enhancement network based on Transformer, named as TITFormer, which combines textual and simulating infrared modalities firstly for this important task. TITFormer comprises a text channel attention fusion (TCF) network block and an infrared-guided spatial detail optimization (SDO) network block. The TCF extracts contextual features from the high-dimensional features compressed after spatial channel transformation of the text modality and original image. The SDO module uses simulating infrared images characterized by pixel intensity to guide the optimization of spatial details with contextual features adaptively. Experimental results demonstrate that TITFormer achieves state-of-the-art performance on two publicly available benchmark datasets. Our code will be released after the review.

bert-base-uncased/: bert pretrained_model.option/: including configurations to conduct experiments.data/: dataset path.dataset.py: the dataset class for image enhancement (FiveK and PPR10K).model.py: the implementation of TITFormer model.trainppr.py: a python script to run a train PPR10K.trainrgb.py: a python script to run a train FiveK.

- Python 3.7.0

- PyTorch 1.11.0+cu113

You can set up the Envirment with conda and pip as follows:

conda create -n titformer python=3.7

conda activate titformer

pip install tqdm

<!--pip install torch==1.11.0+cu102 torchvision==0.12.0+cu102 --extra-index-url https://download.pytorch.org/whl/cu102-->

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

pip install scikit-image

pip install opencv-python

pip install omegaconf einops transformers korniaThe paper use the FiveK and PPR10K datasets for experiments. It is recommended to refer to the dataset creators first using the above two urls.

- FiveK

You can download the original FiveK dataset from the dataset homepage and then preprocess the dataset using Adobe Lightroom following the instructions in Prepare_FiveK.md.

For fast setting up, you can also download only the 480p dataset preprocessed by Zeng ([GoogleDrive],[onedrive],[baiduyun:5fyk]), including 8-bit sRGB, 16-bit XYZ input images and 8-bit sRGB groundtruth images.

After downloading the dataset, please unzip the images into the ./data/FiveK directory. Please also place the annotation files in ./seplut/annfiles/FiveK to the same directory. The final directory structure is as follows.

./data/fiveK

input/

JPG/480p/ # 8-bit sRGB inputs

PNG/480p_16bits_XYZ_WB/ # 16-bit XYZ inputs

expertC/JPG/480p/ # 8-bit sRGB groundtruths

Infrared/PNG/480p/ # simulating infrared input

encodings.pt # text embeding data

train.txt

test.txt

- PPR10K

We download the 360p dataset (train_val_images_tif_360p and masks_360p) from PPR10K to conduct our experiments.

After downloading the dataset, please unzip the images into the ./data/PPR10K directory. Please also place the annotation files in ./seplut/annfiles/PPR10K to the same directory. The expected directory structure is as follows.

data/PPR10K

source/ # 16-bit sRGB inputs

source_aug_6/ # 16-bit sRGB inputs with 5 versions of augmented

masks/ # human-region masks

target_a/ # 8-bit sRGB groundtruths retouched by expert a

target_b/ # 8-bit sRGB groundtruths retouched by expert b

target_c/ # 8-bit sRGB groundtruths retouched by expert c

Infrared # 8-big simulating infrared input

encodings.pt # text embeding data

train.txt

train_aug.txt

test.txt

Download link for simulating infrared image and text data

| Dataset | Link |

|---|---|

| FiveK | Google Drive/Baidu |

| ppr10K | Google Drive /Baidu |

- On FiveK-sRGB (for photo retouching)

python trainrgb.py- On FiveK-XYZ (for tone mapping)

python trainxyz.py- On PPR10K (for photo retouching)

python trainppr.pyYou can test models in ./run/. To conduct testing, please use the following commands:

python test.pyWe provide trained models

| Dataset | Task | PSNR | SSIM | Path |

|---|---|---|---|---|

| FiveK | photo retouching | 28.07 | 0.947 | pretrained_models/Ima-RGB-C 2024.01.13--20-38-53 |

| FiveK | tone mapping | 27.12 | 0.936 | pretrained_models/Ima-XYZ-C 2024.01.14--15-39-37 |

| ppr10K | photo retouching | 27.08 | 0.949 | pretrained_models/PPR-RGB-a 2024.01.15--10-45-36 |

You can evaluate models in ./pretrained_models/. To conduct testing, please use the following commands:

python test.pyThis codebase is based on the following open-source projects. We thank their authors for making the source code publically available.