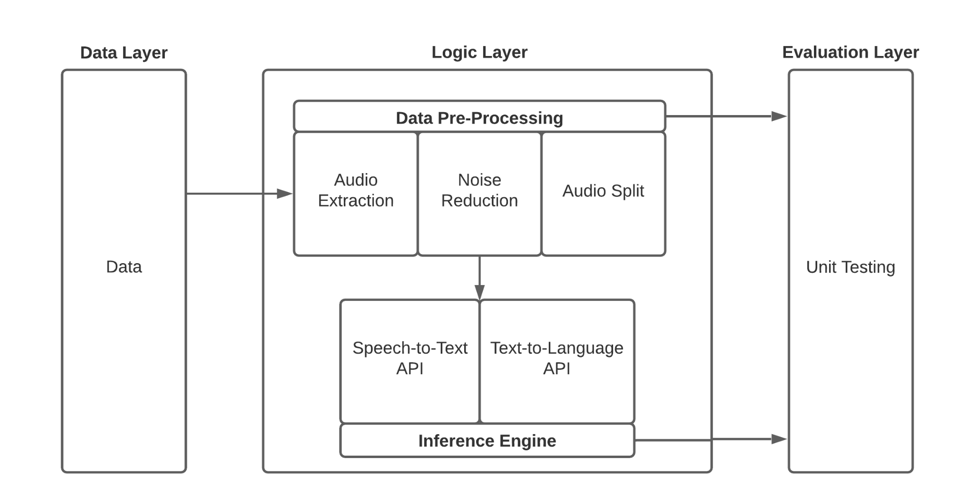

An initial proof of concept that will take video data, create a proof of concept of how to observe and provide feedback to teachers with ML on some aspect of Language of Instruction, Language of Student Response, Communication Mode (reading, speaking, listening, writing-- what the teacher elicits from the students), or Activity Structures.

Use the package manager pip to install the packages.

pip install SpeechRecognition

pip install langdetect

pip install moviepy

pip install pydub

pip install openpyxlSample command to run audio extraction tool: Download a video file say sample_video.asf, and put the path of the input video file in the command below:

python main.py -i "sample_video.asf"

python main.py -i path_of_the_video_file_to_be_tested-i stands for input. It takes as argument the path of the video file to be tested.

.

├── Data

│ ├── Input

│ │ └── sampleVideo.mp4

│ ├── Output

│ │ └── output.csv

│ └── Processed

│ └── output.wav

├── README.md

├── Utils

│ ├── SplitAudio.py

│ ├── SplitAudio.pyc

│ ├── __init__.py

│ ├── __init__.pyc

│ ├── __pycache__

│ │ ├── SplitAudio.cpython-37.pyc

│ │ ├── __init__.cpython-37.pyc

│ │ ├── extractAudio.cpython-37.pyc

│ │ └── speech_recognition.cpython-37.pyc

│ ├── extractAudio.py

│ ├── extractAudio.pyc

│ ├── speech_recognition.py

│ └── speech_recognition.pyc

├── after_noise_removal.png

├── audio_files_harvard.wav

├── before_noise_removal.png

├── documentation

│ └── Spring2021

│ ├── i0.pdf

│ └── i1.pdf

├── main.py

├── noise_removed_harvard.wav

├── removeNoise.sh

├── sample.wav

├── test_video.asfPivotal tracker account: https://www.pivotaltracker.com/n/projects/2495399