helios

Sample implementation of natural language image search with OpenAI's CLIP and Elasticsearch or OpenSearch.

Inspired by https://github.com/haltakov/natural-language-image-search.

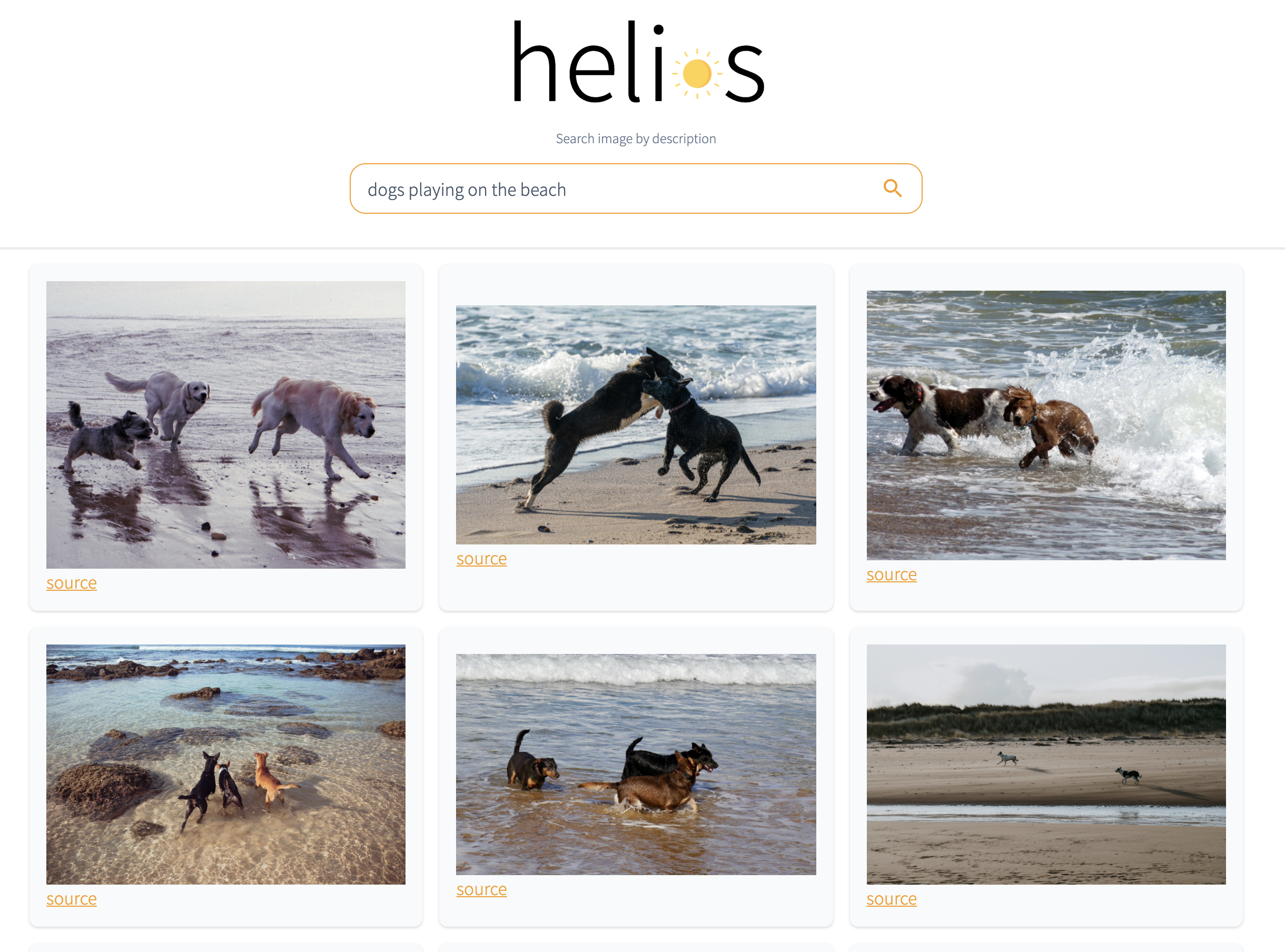

The goal is to build a web interface to index and search images with natural language.

The demo use Unsplash Dataset, but you are not limited to it.

Guide: OpenSearch

1- Launch the services

Make sure you have the latest version of docker installed. Then run :

docker-compose --profile opensearch --profile backend --profile frontend upIt will launch the following services:

By default, opensearch credentials are admin:admin.

2- Create the index

Next step is to create the index. The template used is defined in /scripts/opensearch_template.py.

We use Approximate k-NN search because we expect a high number of images (+1M). Run the helper script:

docker-compose run --rm scripts create-opensearch-indexIt will create an index named images.

3 - Optional: Load unsplash dataset

To be searchable, images need to be embedded with CLIP and indexed.

If you want to try it on the Unsplash Dataset, you can compute the features as done here. You can also use the pre-computed features, courtesy of @haltakov.

In both cases, you need the permission of Unsplash.

You should have two files:

- A csv file with photos ids, let name it

photo_ids.csv - A npy file with the features, let name it

features.npy

Move them to the /data folder, so the docker container used to run scripts can access them.

Use the helper script to index the images. For example:

docker-compose run --rm scripts index-unsplash-opensearch --start 0 --end 10000 /data/photo_ids.csv /data/features.npyWill index the ids from 0 to 10000.

4 - Launch the search

After indexing, you can search for images in the frontend.

The frontend is a simple Next.js app, that send search queries to the backend.

The backend is a python app, that embed search queries with CLIP and send an approximate k-nn request to the OpenSearch service.

The sources code are in the app and api folders.