This repo is an extended version of the original one focused in the integration with the streaming services provided by the Red Hat Openshift Application Services.

The Red Hat OpenShift Streams for Apache Kafka and Red Hat OpenShift Service Registry services are no longer available as part of the Red Hat Developer Sandbox without cost.

If you are interested to use these managed services (and follow this repository), please, contact with your local account or with us here

This repo integrates the following managed services:

- Red Hat Developer Sandbox provides immediate access into an OpenShift cluster to deploy applications.

- Red Hat OpenShift Streams for Apache Kafka is a fully hosted and managed service that provides a cloud service of running Apache Kafka brokers. Apache Kafka is an open-sourced distributed event streaming platform for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

- Red Hat OpenShift Service Registry is a fully hosted and managed service that provides a cloud service of running an API and Schema registry instance. Service Registry is a datastore for sharing standard event schemas and API designs across API and event-driven architectures. You can use Service Registry to decouple the structure of your data from your client applications, and to share and manage your data types and API descriptions at runtime using a REST interface.

Remember that this application is based in Quarkus, so you are getting the best features of OpenShift and Java in the same package ... with really fast start up ... like a 🚀

Oct 21, 2022 2:14:31 PM io.quarkus.bootstrap.runner.Timing printStartupTime

INFO: kafka-clients-quarkus-sample 2.13.3-SNAPSHOT on JVM (powered by Quarkus 2.13.3.Final) started in 3.802s. Listening on: http://0.0.0.0:8080

But in 'native' mode, then the applications is a high-fast 🚀 starting in a few milliseconds.

2022-10-21 14:24:32,089 INFO [io.quarkus] (main) kafka-clients-quarkus-sample 2.13.3-SNAPSHOT native (powered by Quarkus 2.13.3.Final) started in 0.169s. Listening on: http://0.0.0.0:8080

To deploy this application into OpenShift environment, we use the amazing JKube.

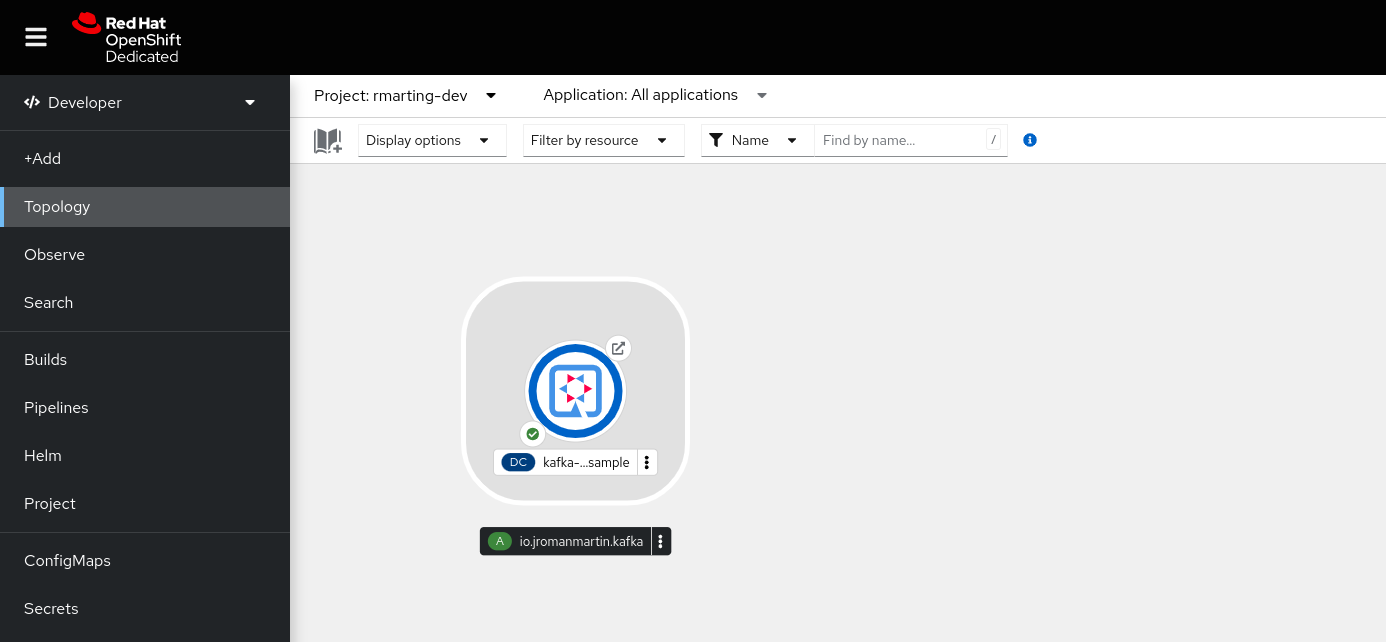

This application deployed in the Red Hat Developer Sandbox looks like this:

The environment and services are provided by the different managed services by Red Hat. It is very easy to start there, you only require to have a Red Hat Developer account. You could sign here.

Once you have your account created and ready, we need to install the

Red Hat OpenShift Application Service CLI (rhoas) that will help to

manage all the services from our terminal. Please, follow the instructions

for your platform here

This repo was tested with the following version:

❯ rhoas version

rhoas version 0.53.0So, we are now ready to log in into the Red Hat OpenShift Application Services:

rhoas loginAnd, finally, we need to log into our Red Hat OpenShift Sandbox environment.

In the right side of the navigator bar our username is displayed, clicking on it, we can see the Copy login command

option. This option will show us a oc command to login into our Red Hat OpenShift Sandbox from a terminal. We copy

and paste that command into our terminal (similar to):

oc login --token=<TOKEN> --server=https://api.<SANDBOX>.openshiftapps.com:6443Now, we are ready to deploy our managed services.

This command will create our own instance of Apache Kafka easily:

rhoas kafka create --name event-bus --useProvisioning a new Apache Kafka instance takes some time (few minutes), but we could verify the status with:

rhoas status kafkaThe Status property must be ready before continuing these instructions. This simple command can

check the provision status:

until ready=$(rhoas status kafka -o json | jq .kafka.status) && [ "$ready" == "\"ready\"" ]; do echo "Kafka not ready"; sleep 10; done; echo "Kafka ready"Next step is to create the Kafka Topic used by our application:

rhoas kafka topic create --name messagesThis command will create our own instance of Apache Kafka easily:

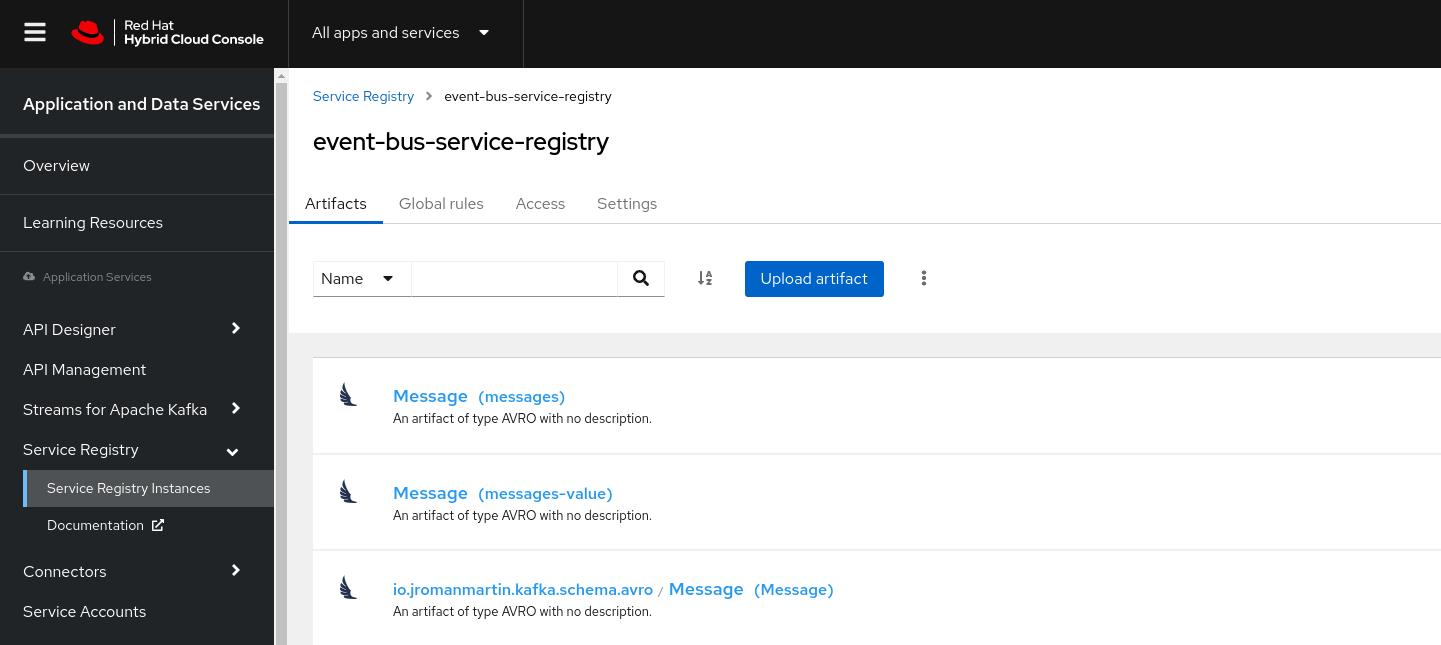

rhoas service-registry create --name event-bus-service-registry --useThe Status property must be ready before continuing these instructions. This simple command can

check the provision status:

until ready=$(rhoas status service-registry -o json | jq .registry.status) && [ "$ready" == "\"ready\"" ]; do echo "Service Registry not ready"; sleep 10; done; echo "Service Registry ready"Your instance of the Service Registry provides an user interface available to manage the artifacts. This command shows the url to be used by a browser:

rhoas service-registry describe -o json | jq .browserUrlOnce our application services are ready we need to get the technical details of each of them to use in our application.

First we will get the configuration of these services with the following command:

rhoas generate-config --type env --output-file ./event-bus-config.env --overwriteThe event-bus-config.env file will contain the right values of our instances using

the following environment variables:

- KAFKA_HOST has the bootstrap server connection

- SERVICE_REGISTRY_URL has the Service Registry base url or our instance

- SERVICE_REGISTRY_CORE_PATH the uri to interact with the Service Registry

- SERVICE_REGISTRY_COMPAT_PATH the compact uri of the Service Registry

These properties will be needed by our application in runtime, so we will store

them in a ConfigMap in our namespace. This config map will be mounted in our

application deployment manifest.

oc create configmap event-bus-config --from-env-file=./event-bus-config.envThe application services provided have enabled Auth/Authz, so we will require to have valid credentials to access them. These credentials will be provided by a Service Account. The Service Account provides a username (Client ID) and password (Client Secret) to connect to the different services.

To create a Service Account:

rhoas service-account create \

--short-description event-bus-service-account \

--file-format env \

--overwrite \

--output-file=./event-bus-service-account.envNOTE: The output of that command includes a set of instructions to execute to grant our service account to the different services created. It is important to follow them.

One of the commands is to grant full access to produce and consume Kafka messages:

rhoas kafka acl grant-access --producer --consumer --service-account <SERVICE_ID> --topic all --group allOther command is to grant read and write access to the currently selected Service Registry instance, enter this command:

rhoas service-registry role add --role=manager --service-account <SERVICE_ID>The event-bus-service-account.env file will contain the right values of our

instances using the following environment variables:

- RHOAS_SERVICE_ACCOUNT_CLIENT_ID has the client id.

- RHOAS_SERVICE_ACCOUNT_CLIENT_SECRET has the client secret.

- RHOAS_SERVICE_ACCOUNT_OAUTH_TOKEN_URL has the OAuth Token service.

Again, these properties will be needed by our application in runtime, so we

will store them in a Secret (it is sensible data) in our namespace. This

secret will be mounted in our application deployment manifest.

oc create secret generic event-bus-service-account --from-env-file=./event-bus-service-account.envTo use locally these properties (e.g: to run locally our application), we could load them with:

export $(grep -v '^#' event-bus-config.env | xargs -d '\n')

export $(grep -v '^#' event-bus-service-account.env | xargs -d '\n')The different environment variables are integrated in the following files to use them automatically:

- pom.xml file has references to publish the schemas into the Service Registry

- application.properties file has references of Apache Kafka and Service Registry

To register the schemas in Service Registry running in OpenShift:

./mvnw clean generate-sources -PapicurioThe next screenshot shows the schemas registered in the Web Console:

To run locally:

./mvnw compile quarkus:devOur application should start successfully, connecting to the different application services provided on the cloud. The REST API section includes how to test the application.

Or you can deploy into OpenShift platform using Eclipse JKube Maven Plug-ins.

There is a deployment definition in deployment.yml file to mount the ConfigMap and Secret with the right environment variables of our services.

This file will be used by JKube to deploy our application in OpenShift.

spec:

template:

spec:

containers:

- envFrom:

- configMapRef:

name: event-bus-config

- secretRef:

name: event-bus-service-accountTo deploy the application using the OpenShift Maven Plug-In:

./mvnw package oc:resource oc:build oc:apply -PopenshiftIf you want to deploy the native version of this project:

./mvnw package oc:resource oc:build oc:apply -Pnative,openshift -Dquarkus.native.container-build=trueThe application exposes a simple REST API with the following operations:

- Send messages to a Topic

- Consume messages from a Topic

REST API is available from a Swagger UI at:

http://<OPENSHIFT_ROUTE_SERVICE_HOST>/swagger-ui

NOTE: This application uses an OpenShift route to access it. If you are

running locally the endpoint is http://localhost:8080

To get the route the following command in OpenShift give you the host:

echo http://$(oc get route kafka-clients-quarkus-sample -o jsonpath='{.spec.host}')There are two groups to manage a topic from a Kafka Cluster.

- Producer: Send messageDTOS to a topic

- Consumer: Consume messageDTOS from a topic

Sample REST API to send messages to a Kafka Topic.

- topicName: Topic Name

- messageDTO: Message content based in a custom messageDTO:

Model:

MessageDTO {

key (integer, optional): Key to identify this message,

timestamp (string, optional, read only): Timestamp,

content (string): Content,

partition (integer, optional, read only): Partition number,

offset (integer, optional, read only): Offset in the partition

}

Simple sample producer command for OpenShift:

curl -X POST http://$(oc get route kafka-clients-quarkus-sample -o jsonpath='{.spec.host}')/producer/kafka/messages \

-H "Content-Type:application/json" -d '{"content": "Simple message"}' | jqThe output should be similar to:

{

"content": "Simple message",

"offset": 0,

"partition": 0,

"timestamp": 1666361768868

}Sample REST API to consume messages from a Kafka Topic.

- topicName: Topic Name (Required)

- partition: Number of the partition to consume (Optional)

- commit: Commit messaged consumed. Values: true|false

Simple Sample consumer command in OpenShift:

curl -v "http://$(oc get route kafka-clients-quarkus-sample -o jsonpath='{.spec.host}')/consumer/kafka/messages?commit=true&partition=0" | jqThe output

{

"messages": [

{

"content": "Simple message",

"offset": 0,

"partition": 0,

"timestamp": 1666361768868

},

...

{

"content": "Simple message",

"offset": 3,

"partition": 0,

"timestamp": 1666361833929

}

]

}That's all folks! You have been deployed a full stack of components to produce and consume checked and valid messages using a schema declared. Congratulations!.

If you need to clean up your resources, then it is very easy using the same tools but now in this way:

Undeploy our application:

./mvnw oc:undeploy -Popenshift -DskipTestsDeleting the configuration resources:

oc delete cm event-bus-config

oc delete secret event-bus-service-accountDeleting the application services:

rhoas service-registry delete --name event-bus-service-registry -y

rhoas kafka delete --name event-bus -yTo clean your service account

rhoas service-account delete -y \

--id $(rhoas service-account list | grep event-bus-service-account | awk -F ' ' '{print $1}')- Quarkus - Building applications with Maven

- Red Hat OpenShift Streams for Apache Kafka

- Connect to OpenShift application services with contexts

- Getting started with Red Hat OpenShift Streams for Apache Kafka

- Get started with OpenShift Service Registry

- My own Red Hat OpenShift Application Services CLI Cheat Sheet