AIStore is a lightweight object storage system with the capability to linearly scale out with each added storage node and a special focus on petascale deep learning.

AIStore (AIS for short) is a built from scratch, lightweight storage stack tailored for AI apps. AIS consistently shows balanced I/O distribution and linear scalability across arbitrary numbers of clustered servers, producing performance charts that look as follows:

The picture above comprises 120 HDDs.

The ability to scale linearly with each added disk was, and remains, one of the main incentives behind AIStore. Much of the development is also driven by the ideas to offload dataset transformations to AIS clusters.

- scale out with no downtime and no limitation;

- arbitrary number of extremely lightweight access points;

- highly-available control and data planes, end-to-end data protection, self-healing, n-way mirroring, k/m erasure coding;

- comprehensive native HTTP-based (S3-like) API, as well as

- compliant Amazon S3 API to run unmodified S3 clients and apps;

- automated cluster rebalancing upon any changes in cluster membership, drive failures and attachments, bucket renames;

- ETL offload via offline (dataset to dataset) or inline (on-the-fly) transformations.

Also, AIStore:

- can be deployed on any commodity hardware - effectively, on any Linux machine(s);

- can be immediately populated - i.e., hydrated - from any file-based data source (local or remote, ad-hoc/on-demand or via asynchronus batch);

- provides for easy Kubernetes deployment via a separate GitHub repo with

- step-by-step deployment playbooks, and

- AIS/K8s Operator;

- contains integrated CLI for easy management and monitoring;

- can ad-hoc attach remote AIS clusters, thus gaining immediate access to the respective hosted datasets

- (referred to as global namespace capability);

- natively reads, writes, and lists popular archives including tar, tar.gz, zip, and MessagePack;

- distributed shuffle of those archival formats is also supported;

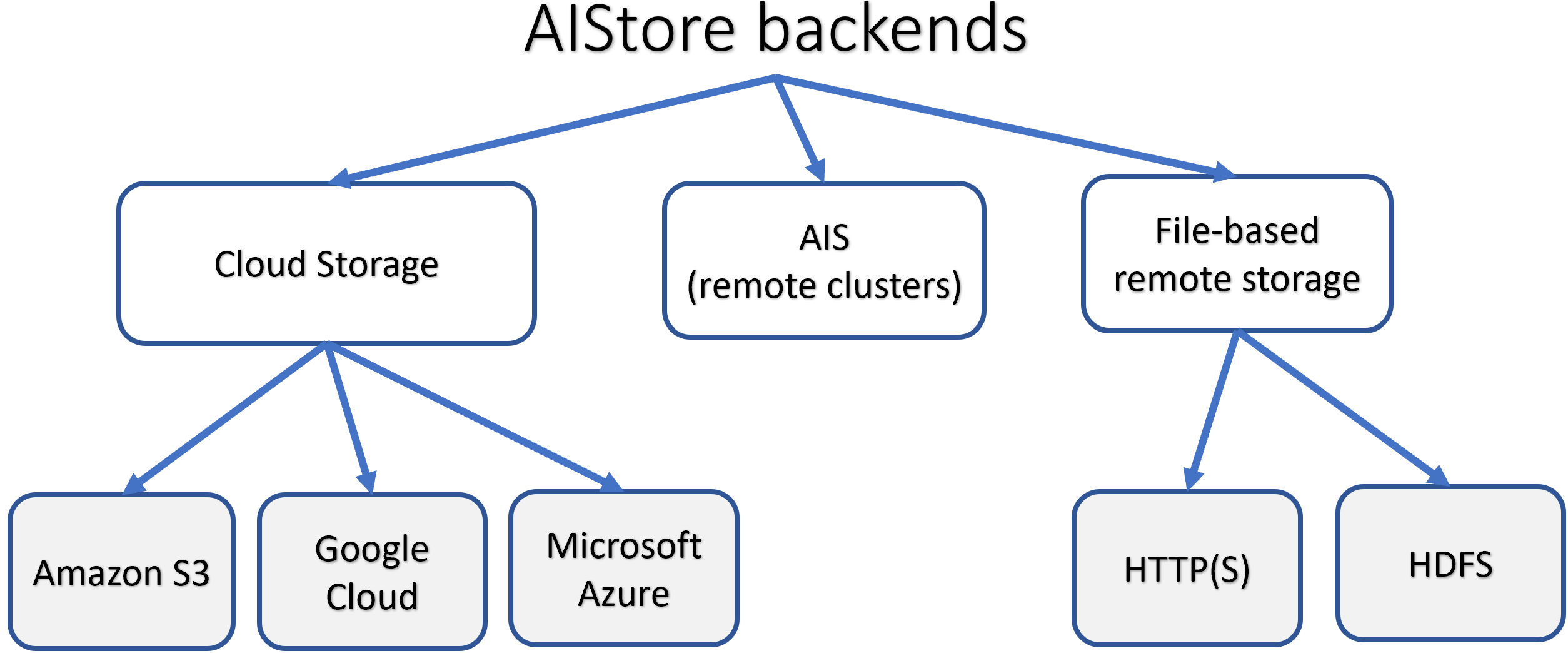

- fully supports Amazon S3, Google Cloud, and Microsoft Azure backends

- providing unified global namespace simultaneously across multiple backends:

- can be deployed as LRU-based fast cache for remote buckets; can be populated on-demand and/or via

prefetchanddownloadAPIs; - can be used as a standalone highly-available protected storage;

- includes MapReduce extension for massively parallel resharding of very large datasets;

- supports existing PyTorch and TensorFlow-based training models.

AIS runs natively on Kubernetes and features open format - thus, the freedom to copy or move your data from AIS at any time using the familiar Linux tar(1), scp(1), rsync(1) and similar.

For AIStore white paper and design philosophy, for introduction to large-scale deep learning and the most recently added features, please see AIStore Overview (where you can also find six alternative ways to work with existing datasets). Videos and animated presentations can be found at videos.

Finally, getting started with AIS takes only a few minutes.

AIS deployment options, as well as intended (development vs. production vs. first-time) usages, are all summarized here.

Since prerequisites boil down to, essentially, having Linux with a disk the deployment options range from all-in-one container to a petascale bare-metal cluster of any size, and from a single VM to multiple racks of high-end servers. But practical use cases require, of course, further consideration and may include:

| Option | Objective |

|---|---|

| Local playground | AIS developers and development, Linux or Mac OS |

| Minimal production-ready deployment | This option utilizes preinstalled docker image and is targeting first-time users or researchers (who could immediately start training their models on smaller datasets) |

| Easy automated GCP/GKE deployment | Developers, first-time users, AI researchers |

| Large-scale production deployment | Requires Kubernetes and is provided via a separate repository: ais-k8s |

Further, there's the capability referred to as global namespace: given HTTP(S) connectivity, AIS clusters can be easily interconnected to "see" each other's datasets. Hence, the idea to start "small" to gradually and incrementally build high-performance shared capacity.

For detailed discussion on supported deployments, please refer to Getting Started.

For performance tuning and preparing AIS nodes for bare-metal deployment, see performance.

When it comes to PyTorch, WebDataset is the preferred AIStore client.

WebDataset is a PyTorch Dataset (IterableDataset) implementation providing efficient access to datasets stored in POSIX tar archives.

Further references include technical blog titled AIStore & ETL: Using WebDataset to train on a sharded dataset where you can also find easy step-by-step instruction.

- Getting Started

- Technical Blog

- API

- CLI

- Create, destroy, list, copy, rename, transform, configure, evict buckets

- GET, PUT, APPEND, PROMOTE, and other operations on objects

- Cluster and node management

- Mountpath (disk) management

- Attach, detach, and monitor remote clusters

- Start, stop, and monitor downloads

- Distributed shuffle

- User account and access management

- Job (aka

xaction) management

- Tutorials

- Power tools and extensions

- Benchmarking and tuning Performance

- Buckets and Backend Providers

- Storage Services

- Cluster Management

- Configuration

- Observability

- For developers

- Getting started

- Docker

- Useful scripts

- Profiling, race-detecting, and more

- Batch operations

- Topics

MIT

Alex Aizman (NVIDIA)