rmldnn is a command-line tool that makes deep-learning models easy to build and fast to train. It does not require knowledge of any deep-learning framework (Pytorch, Tensorflow, Keras, etc) or any Python code. Using rmldnn, one can build deep-learning models on either a small single-GPU laptop or a large supercomputer with hundreds of GPUs or CPUs without any prior knowledge of distributed computing.

In a nutshell, to launch a deep-learning training or inference run, one only needs to do the following at the command line:

rmldnn --config=config.json

The entire run is configured in the JSON file config.json. This file controls everything from hyperparameters to output file names. It is composed of several sections (JSON objects) which configure different aspects of the deep-learning run (e.g., network, optimizers, data loader, etc). More on the configuration file in the concepts section.

- Why rmldnn?

- Benefits

- Who is this for?

- Who is this not for?

- Concepts

- Install

- Usage

- Applications

- Publications

- Talks

- Citation

rmldnn was built from the start with two main design principles in mind:

- Ease-of-use: Simple, code-free, configuration-based command-line interface

- Uncompromising performance: Blazing-fast speeds and HPC-grade scalability on GPU/CPU clusters

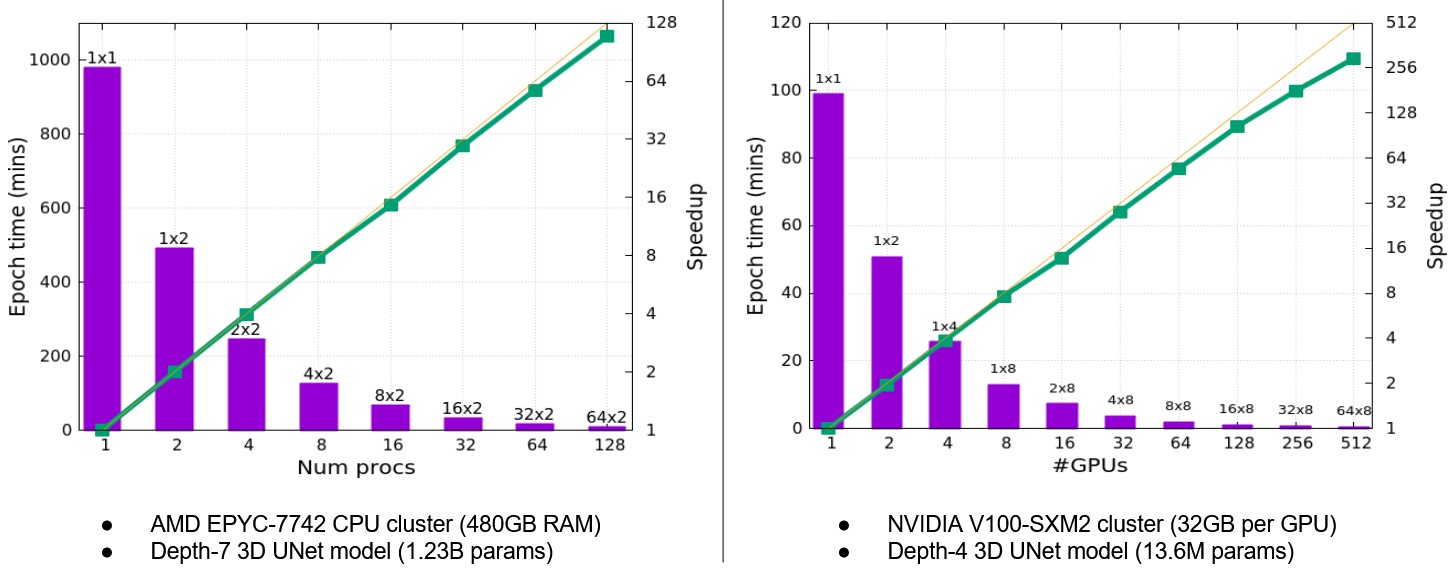

The plots below show rmldnn scalability results (time per epoch as function of number of processes) to train two different 3D Unet models on large scale CPU and GPU clusters, achieving almost linear speedups on up to 128 CPU processes (7680 cores) and on 512 GPUs.

- Does not require any knowledge of deep-learning frameworks (Pytorch, Keras, etc) or Python.

- Is designed with scalability & performance in mind from the get-go.

- Is agnostic to machine and processor architectures, running seamlessly on both CPU and GPU systems.

- Runs on anything from one to hundreds of GPUs or CPUs without any required knowledge of distributed computing, automatically managing data partitioning and communication among nodes.

- Allows building models for different computer vision use-cases like image classification, object detection, image segmentation, autoencoders, and generative networks.

- Researchers who are solving image classification, object detection, or image segmentation problems in their respective fields using deep-learning.

- Data scientists with experience in

scikit-learnand venturing into deep learning. - Data scientists who need to scale their single-process deep-learning solutions to multiple GPUs or CPUs.

- Data scientists who want to train deep-learning models without writing boilerplate code in Python/Pytorch/Tensorflow.

- Newcomers to the field of machine learning who want to experiment with deep-learning without writing any code.

- Data scientists or developers who have experience writing more advanced deep-learning code and need maximum flexibility to implement their own custom layers, optimizers, loss functions, data loaders, etc.

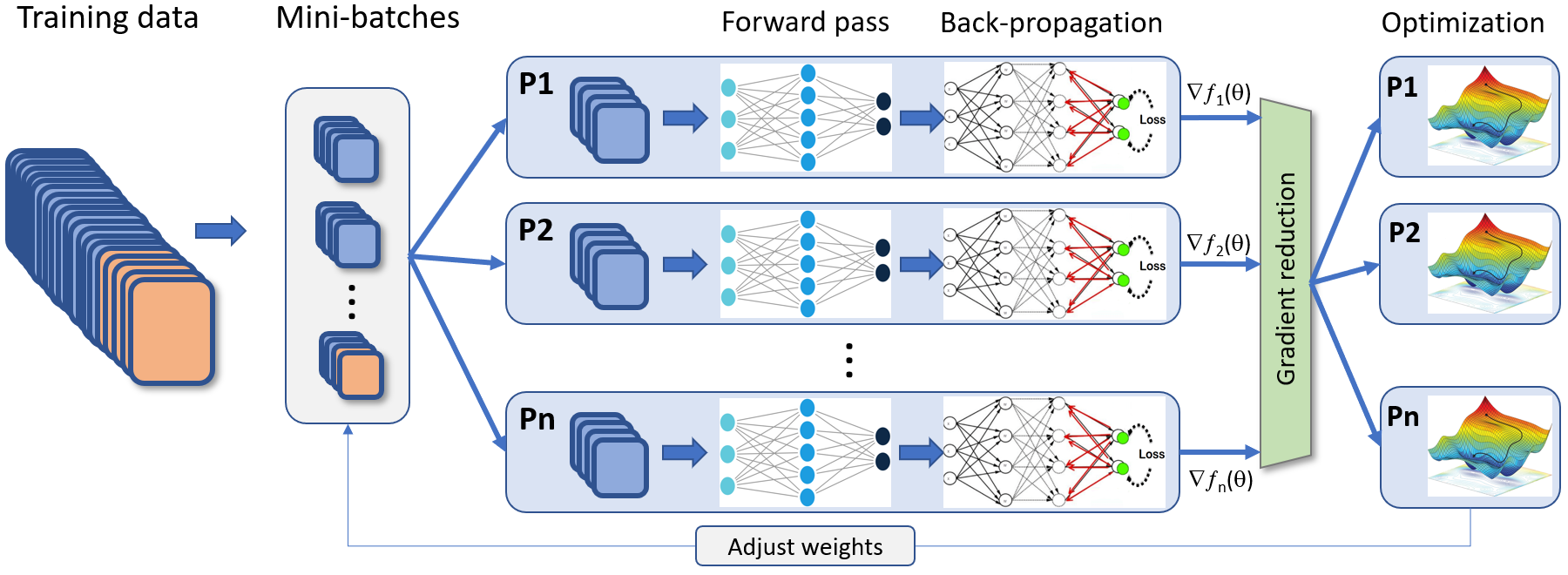

rmldnn implements the data-parallel distributed deep-learning strategy, where multiple replicas of the model are simultaneously trained by independent processes on local subsets of data to minimize a common objective function, as depicted below.

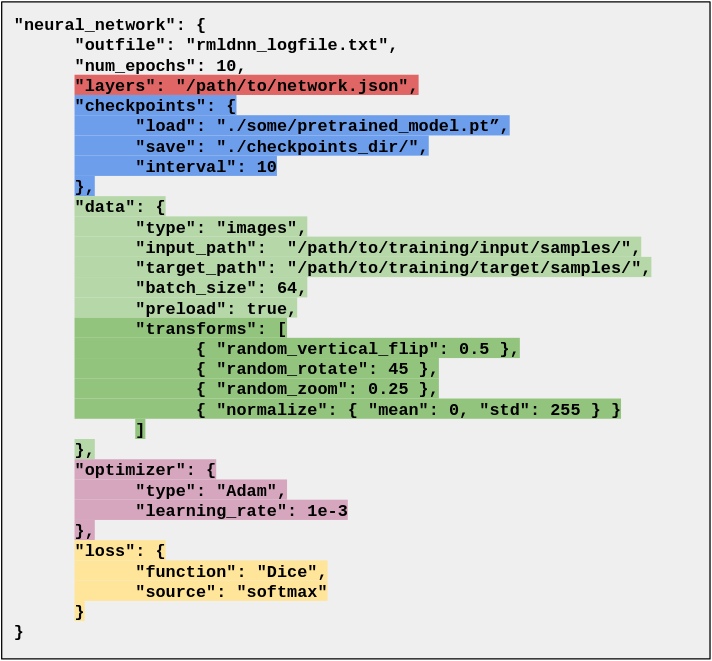

The entire training (or inference) run is configured in the json file passed in as input to rmldnn. This file controls everything from log file names to hyperparameter values. It contains a few json blocks responsible for configuring different modules, e.g., a data block that configures the data-loader (input location, data transforms, etc.), optimizer and loss blocks that control what optimization method and loss function to use, and so on. The neural network is defined as a Keras file and passed into the parameter layers. A typical config file example is shown below, and several other examples are available in the tutorials. Details about all rmldnn options and capabilities can be found in the documentation.

rmldnn is available as Docker and Singularity containers, which substantially simplifies the installation process and increases portability. These containers have been tested on Linux Ubuntu 18.04, and should work on all popular Linux distros. In addition, rmldnn can be run natively on AWS or MS Azure cloud by spinning up a VM using one of our images.

-

Docker

- If not already available, follow instructions here to install Docker on your system.

- Pull the latest rmldnn image from DockerHub:

sudo docker pull rocketml/rmldnn:latest -

Singularity

- If not already available, follow instructions here to install SingularityCE on your system.

- The image can be created by pulling from DockerHub and converting to Singularity in the same step:

sudo singularity build rmldnn.sif docker://rocketml/rmldnn:latest export RMLDNN_IMAGE=`realpath ./rmldnn.sif` -

Azure

-

AWS

The examples below demonstrate how to run rmldnn on a Linux shell using either a container (Docker, Singularity) or a native build on the cloud. We will use the smoke tests in this repo and show how to run them on one or multiple processes.

First, clone the current repo:

git clone https://github.com/rocketmlhq/rmldnn

cd rmldnn/smoke_tests/

-

We will mount the current directory as

/home/ubuntuinside the container, and use that as our work directory. -

Single-process:

- CPU system:

sudo docker run -u $(id -u):$(id -g) -v ${PWD}:/home/ubuntu -w /home/ubuntu --rm \ rocketml/rmldnn:latest rmldnn --config=config_rmldnn_test.json - GPU system:

sudo docker run --gpus=all -u $(id -u):$(id -g) -v ${PWD}:/home/ubuntu -w /home/ubuntu --rm \ rocketml/rmldnn:latest rmldnn --config=config_rmldnn_test.json

- CPU system:

-

Multi-process:

-

CPU system:

Use the

-npoption ofmpirunto indicate how many processes to launch and the variableOMP_NUM_THREADSto indicate how many threads each process will use. E.g., on a system with 32 CPU cores, one might want to launch 4 processes using 8 cores each:sudo docker run --cap-add=SYS_PTRACE -u $(id -u):$(id -g) -v ${PWD}:/home/ubuntu -w /home/ubuntu --rm \ rocketml/rmldnn:latest mpirun -np 4 --bind-to none -x OMP_NUM_THREADS=8 \ rmldnn --config=config_rmldnn_test.json -

GPU system:

Use the variable

CUDA_VISIBLE_DEVICESto indicate which devices to use. E.g., on a 4-GPU system, the following command can be used to launch a 4x parallel run:sudo docker run --cap-add=SYS_PTRACE --gpus=all -u $(id -u):$(id -g) -v ${PWD}:/home/ubuntu -w /home/ubuntu --rm \ rocketml/rmldnn:latest mpirun -np 4 -x CUDA_VISIBLE_DEVICES=0,1,2,3 \ rmldnn --config=config_rmldnn_test.json

-

- Set the environment variable

RMLDNN_IMAGEto the location of the rmldnn Singularity image (see install section). - Single-process:

- CPU system:

singularity exec ${RMLDNN_IMAGE} rmldnn --config=./config_rmldnn_test.json - GPU system:

singularity exec --nv ${RMLDNN_IMAGE} rmldnn --config=./config_rmldnn_test.json

- CPU system:

- Multi-process:

-

CPU system:

Use the

-npoption ofmpirunto indicate how many processes to launch and the variableOMP_NUM_THREADSto indicate how many threads each process will use:singularity exec ${RMLDNN_IMAGE} mpirun -np 4 --bind-to none -x OMP_NUM_THREADS=8 \ rmldnn --config=./config_rmldnn_test.json -

GPU system:

Add the

--nvoption and set the variableCUDA_VISIBLE_DEVICESaccordingly:singularity exec --nv ${RMLDNN_IMAGE} mpirun -np 4 -x CUDA_VISIBLE_DEVICES=0,1,2,3 \ rmldnn --config=./config_rmldnn_test.json

-

- The Azure and AWS AMIs contain a native build of rmldnn on Ubuntu 18.04 Linux, which can be executed directly from the shell

- Single-process:

- CPU or GPU system:

rmldnn --config=./config_rmldnn_test.json

- CPU or GPU system:

- Multi-process:

-

CPU system:

The -np option of mpirun indicates how many processes to launch and the variable OMP_NUM_THREADS controls how many threads per process:

mpirun -np 4 --bind-to none -x OMP_NUM_THREADS=8 \ rmldnn --config=./config_rmldnn_test.json -

GPU system:

The variable CUDA_VISIBLE_DEVICES indicates which devices to use:

mpirun -np 4 -x CUDA_VISIBLE_DEVICES=0,1,2,3 \ rmldnn --config=./config_rmldnn_test.json

-

Please take a look at the tutorials available in this repo for examples of how to use rmldnn to tackle real-world deep-learning problems in the areas of:

- Image classification

- 2D and 3D image segmentation

- Self-supervised learning

- Transfer learning

- Hyper-parameter optimization

- Neural PDE solvers

- Object detection

- Generative adversarial networks (GANs)

-

Sergio Botelho, Ameya Joshi, Biswajit Khara, Vinay Rao, Soumik Sarkar, Chinmay Hegde, Santi Adavani, and Baskar Ganapathysubramanian. Deep generative models that solve pdes: Distributed computing for training large data-free models., 2020 IEEE/ACM Workshop on Machine Learning in High Performance Computing Environments (MLHPC) and Workshop on Artificial Intelligence and Machine Learning for Scientific Applications (AI4S), pp. 50-63. IEEE, 2020. (paper)

-

Aditya Balu, Sergio Botelho, Biswajit Khara, Vinay Rao, Soumik Sarkar, Chinmay Hegde, Adarsh Krishnamurthy, Santi Adavani, and Baskar Ganapathysubramanian. Distributed multigrid neural solvers on megavoxel domains, Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC '21). Association for Computing Machinery, New York, NY, USA, Article 49, 1–14. (paper)

-

Rade, J., Balu, A., Herron, E., Jignasu, A., Botelho, S., Adavani, S., Sarkar, S., Ganapathysubramanian, B. and Krishnamurthy, A., 2021, November. Multigrid Distributed Deep CNNs for Structural Topology Optimization, AAAI 2022 Workshop on AI for Design and Manufacturing (ADAM). (paper)

-

Botelho, S., Das, V., Vanzo, D., Devarakota, P., Rao, V. and Adavani, S., 2021, November. 3D seismic facies classification on CPU and GPU HPC clusters, SPE/AAPG/SEG Asia Pacific Unconventional Resources Technology Conference (paper)

-

Mukherjee, S., Lelièvre, P., Farquharson, C. and Adavani, S., 2021, September. Three-dimensional inversion of geophysical field data on an unstructured mesh using deep learning neural networks, applied to magnetic data, First International Meeting for Applied Geoscience & Energy (pp. 1465-1469). Society of Exploration Geophysicists. (paper)

-

Mukherjee, Souvik, Ronald S. Bell, William N. Barkhouse, Santi Adavani, Peter G. Lelièvre, and Colin G. Farquharson. High-resolution imaging of subsurface infrastructure using deep learning artificial intelligence on drone magnetometry. The Leading Edge 41, no. 7 (2022): 462-471. (paper)

- Scientific Machine Learning talk at Rice Energy HPC conference 2022

- CVPR 2021 Distributed Deep Learning Workshop

- SC21 Scientific Machine Learning Tutorial

- 3D Seismic Facies Classification using Distributed Deep Learning

Please cite rmldnn in your publications if it helps your research:

@software{rmldnn,

author = {{RocketML Inc}},

title = {Rocket{ML} {Deep} {Neural} {Networks}},

url = {https://rocketml.net},

howpublished = {\url{https://github.com/rocketmlhq/rmldnn}}

}