A simple python package for querying Apache Spark's REST API:

- simple interface

- returns DataFrames of task metrics for easy slicing and dicing

- easily access executor logs for post-processing.

spark-pyrest uses pandas.

To install:

$ git clone https://github.com/rokroskar/spark-pyrest.git

$ cd spark-pyrest

$ python setup.py install

from spark_pyrest import SparkPyRest

spr = SparkPyRest(host)spr.app

u'app-20170420091222-0000'spr.stages

[(2,

u'groupByKey '),

(1,

u'partitionBy'),

(0,

u'partitionBy'),

(4, u'runJob at PythonRDD.scala:441'),

(3,

u'distinct')]pr.tasks(1)

| bytesWritten | executorId | executorRunTime | host | localBytesRead | remoteBytesRead | taskId | |

|---|---|---|---|---|---|---|---|

| 0 | 3508265 | 33 | 354254 | 172.19.1.124 | 0 | 112 | 25604 |

| 1 | 3651003 | 56 | 353554 | 172.19.1.74 | 0 | 112 | 24029 |

| 2 | 3905955 | 9 | 347724 | 172.19.1.62 | 0 | 111 | 19719 |

host_tasks = spr.tasks(1)[['host','bytesWritten','remoteBytesRead','executorRunTime']].groupby('host')

host_tasks.describe()| bytesWritten | executorRunTime | remoteBytesRead | ||

|---|---|---|---|---|

| host | ||||

| 172.19.1.100 | count | 1.270000e+02 | 127.000000 | 1.270000e+02 |

| mean | 6.436659e+06 | 337587.913386 | 3.230165e+06 | |

| std | 1.744411e+06 | 114722.662028 | 5.590263e+05 | |

| min | 0.000000e+00 | 0.000000 | 1.098278e+06 | |

| 25% | 6.426009e+06 | 292290.000000 | 2.967360e+06 | |

| 50% | 6.805269e+06 | 348174.000000 | 3.315189e+06 | |

| 75% | 7.160529e+06 | 420091.500000 | 3.582200e+06 | |

| max | 8.050828e+06 | 500831.000000 | 4.775107e+06 | |

| 172.19.1.101 | count | 1.300000e+02 | 130.000000 | 1.300000e+02 |

| mean | 6.470575e+06 | 335401.584615 | 3.178066e+06 | |

| std | 1.725755e+06 | 115268.286668 | 6.332040e+05 | |

| min | 0.000000e+00 | 0.000000 | 0.000000e+00 | |

| 25% | 6.534020e+06 | 290462.750000 | 2.965009e+06 | |

| 50% | 6.880597e+06 | 356160.000000 | 3.327600e+06 | |

| 75% | 7.197086e+06 | 407338.750000 | 3.558479e+06 | |

| max | 7.983406e+06 | 515372.000000 | 4.526526e+06 |

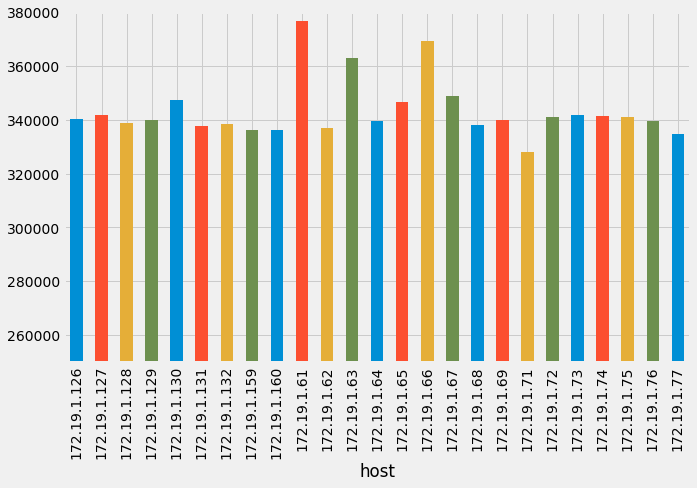

mean_runtime = host_tasks.mean()['executorRunTime']

mean_runtime.plot(kind='bar')If your application prints its own metrics to

stdout/stderr, you need to be able to grab the executor logs to see these metrics. The logs are located on the hosts that make up your cluster, so accessing them programmatically can be tedious. You can see them through the Spark web UI of course, but processing them that way is not really useful. Using the executor_log method of SparkPyRest, you can grab the full contents

of the log for easy post-processing/data extraction.

log = spr.executor_log(0)

print log==== Bytes 0-301839 of 301839 of /tmp/work/app-20170420091222-0000/0/stderr ====

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

17/04/20 09:12:23 INFO CoarseGrainedExecutorBackend: Started daemon with process name: 14086@x04y08

17/04/20 09:12:23 INFO SignalUtils: Registered signal handler for TERM

17/04/20 09:12:23 INFO SignalUtils: Registered signal handler for HUP

17/04/20 09:12:23 INFO SignalUtils: Registered signal handler for INT

17/04/20 09:12:23 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/04/20 09:12:23 INFO SecurityManager: Changing view acls to: roskar

17/04/20 09:12:23 INFO SecurityManager: Changing modify acls to: roskar

17/04/20 09:12:23 INFO SecurityManager: Changing view acls groups to:

17/04/20 09:12:23 INFO SecurityManager: Changing modify acls groups to:

Please submit an issue if you discover a bug or have a feature request! Pull requests also very welcome.