A Stable Diffusion desktop frontend with inpainting, img2img and more!

trailer.mp4

- enter a prompt

- in the square with transparent texture click and scroll the mouse wheel to determine image size.

If nothing happens when clicking on the generate button you should take a look at the output

Problem I encountered :

Access to model runwayml/stable-diffusion-v1-5 is restricted and you are not in the authorized list. Visit https://huggingface.co/runwayml/stable-diffusion-v1-5 to ask for access.Easy fix => go to url and request access

sudo apt update

sudo apt upgrade -y

sudo apt install python3-pip -y

sudo pip install --upgrade pipClose and reopen your shell

pip -V

pip 22.3.1 from /usr/local/lib/python3.8/dist-packages/pip (python 3.8)Note : small diff from doc

pytorch=>torch

python3 -m pip install --upgrade PyQt5 numpy torch Pillow opencv-python requests flask diffusers transformers protobuf qasync httpxgit clone REPO_URL (i encourage you to fork)

cd LOCAL_REPO_PATH

python3 unstablefusion.py

If it work congratulations ! Else here are the 3 troubleshooting I encountered

error, run this:

pip uninstall opencv-python (solve a xcb compatibility issue)

pip install opencv-python-headless (solve a xcb compatibility issue)

Close shell and retry

You may have a similar looking error but a bit more precise, see next step

Troubleshooting 2 (linux Could not load the Qt platform plugin "xcb" in "" even though it was found.)

I needed to install one more dependency

sudo apt-get install libxcb-xinerama0

Troubleshooting 3 (linux under WSL) The problem may be that you do not yet have a way to run GUI apps.

Follow https://techcommunity.microsoft.com/t5/windows-dev-appconsult/running-wsl-gui-apps-on-windows-10/ba-p/1493242 to install and configure VcXsrv Windows X Server.

-

Follow the configuration as described

-

After running and configuring you should see the server running in your taskbar

# DISPLAY var for vcXsrv

export DISPLAY=$(ip route|awk '/^default/{print $3}'):0.0echo xfce4-session > ~/.xsession

Close and reopen your shell and the following command should give you your host machine ip

echo $DISPLAY

> 172.27.117.1:0.0 (may be different)sudo apt-get install x11-apps

xclock

cd LOCAL_REPO_PATH

python3 unstablefusion.py

Success ! :D

- Install the dependencies (for example using

pip). The dependencies include :

PyQt5,numpy,pytorch,Pillow,opencv-python,requests,flask,diffusers,transformers,protobuf

Note that if you want to run StableDiffusion on Windows locally, use requirements-localgpu-win64.txt

pip install -r requirements-localgpu-win64.txt

-

Create a huggingface account and an access token, if you haven't done so already. Request access to the StableDiffusion model at CompVis/stable-diffusion-v1-4.

-

Clone this repository and run

python unstablefusion.py

-

Install the dependencies (see the previous section)

-

Open this notebook and run it (you need to enter your huggingface token when asked).

-

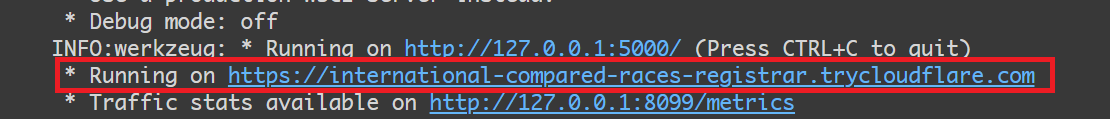

When you run the last cell, you will be given a url like this:

-

Run

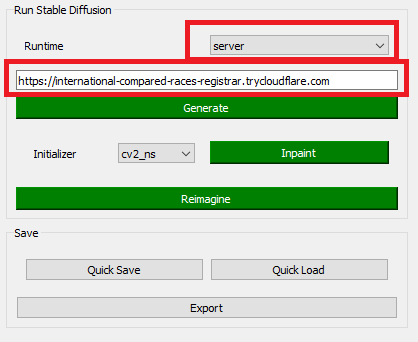

python unstablefusion.py -

In the runtime section, select server and enter the address you copied in the server field. Like this:

- You can select a box by clicking on the screen. All of your operations will be limited to this box. You can resize the box using mouse wheel.

- You can erase the selected box by right clicking, or paint into it by middle clicking (the paint color can be configured using

Select Colorbutton) - To generate an image, select the destination box, enter the prompt in the

prompttext field and press theGenerate button(inpainting and reimagining work similarly) - You can undo/redo by pressing the

undo/redobutton or pressingControl+Z/Control+Shift+Zon your keyboard. In fact most other functions are bound to keys as well (you can configure them inkeys.jsonfile) Increase Size/Decrease Sizebuttons adjust the size of the image by adding/removing extra space in the margins (and not by scaling, this is useful when you want to add more detail around an image)- You can open a scratchpad by pressing

Show Scratchpadbutton. This window is capable of doing everything the main window can (using keyboard shortcuts only). The selected box in scratch pad will be mirrored and scaled into the selected box in the main window. This is useful when trying to import another generated/local image into the main image.

Admittedly, the UI for advanced inpainting is a little unintuitive. Here is how to works:

- You clear the part of the image that you want to inpaint (just like normal inpainting)

- Select the target box (again, like normal inpainting), but instead of clicking on the inpaint button, click on

Save Maskbutton. From now on, the current mask and current selected box will be used for inpainting no matter how you change the box/image (until you pressForget Maskbutton) - This means that you are free to edit the initial image as you please using other operations. For example, you can autofill the masked area using

Autofillbutton, or manually paint the target area or paste any image from scratchpad. Since this initial image will be used to initialize the masked part, it will heavily affect the final result. Therefore by controlling this initial image, you can modify the final result to your will.