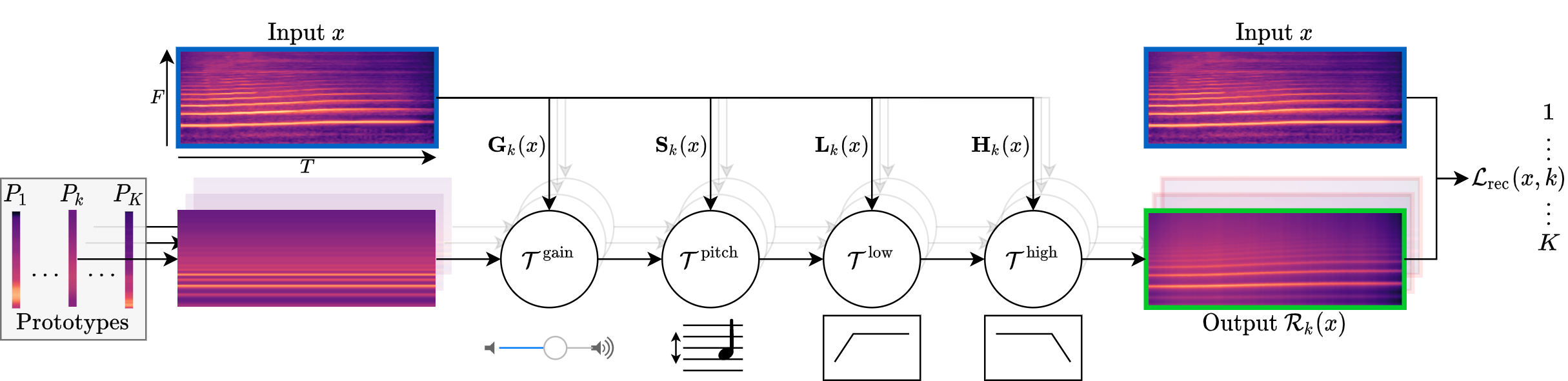

Official PyTorch implementation of the paper "A Model You Can Hear: Audio Identification with Playable Prototypes".

Please visit our webpage for more details.

git clone git@github.com:romainloiseau/a-model-you-can-hear.git --recursive

This implementation uses Pytorch.

Optional: some monitoring routines are implemented with tensorboard.

Note: this implementation uses pytorch_lightning for all training routines and hydra to manage configuration files and command line arguments.

To train our best model, launch :

python main.py \

+experiment={$dataset}_ours_{$supervision}with dataset in {libri, sol} and supervision in {sup, unsup}

To test the model, launch :

python test.py \

+experiment={$dataset}_ours_{$supervision} \

model.load_weights="/path/to/trained/weights.ckpt"Note: pretrained weights to come in pretrained_models/

@article{loiseau22online,

title={A Model You Can Hear: Audio Identification with Playable Prototypes.},

author={Romain Loiseau and Baptiste Bouvier and Yan Teytaut and Elliot Vincent and Mathieu Aubry and Loic Landrieu},

journal={ISMIR},

year={2022}

}