LLAMA is a deployable service which artificially produces traffic for measuring network performance between endpoints.

LLAMA uses UDP socket level operations to support multiple QoS classes. UDP datagrams are fast, efficient, and will hash across ECMP paths in large networks to uncover faults and erring interfaces. LLAMA is written in pure Python for maintainability.

LLAMA will eventually have all those capabilities, but not yet.

For instance, there it does not currently provide UDP or QOS

functionality, but will send test traffic using hping3

It's currently being tested in Alpha at Dropbox through

experimental correlation. See the TODO list below for more plans.

Measure the following between groups of endpoints across a network:

- round-trip latency

- packet loss

- A

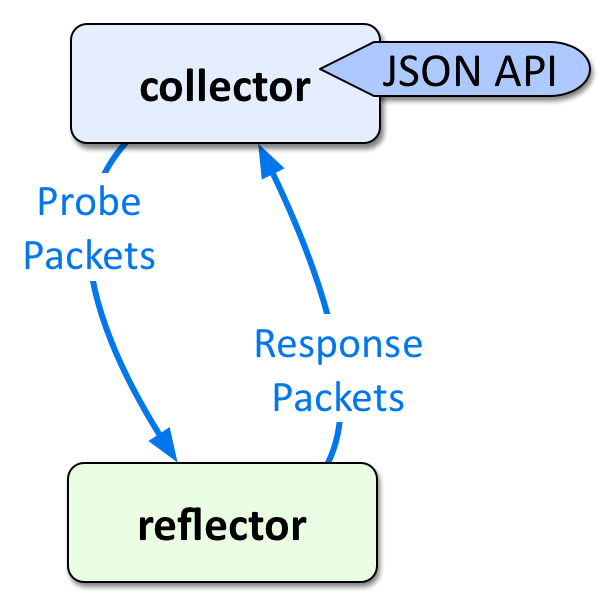

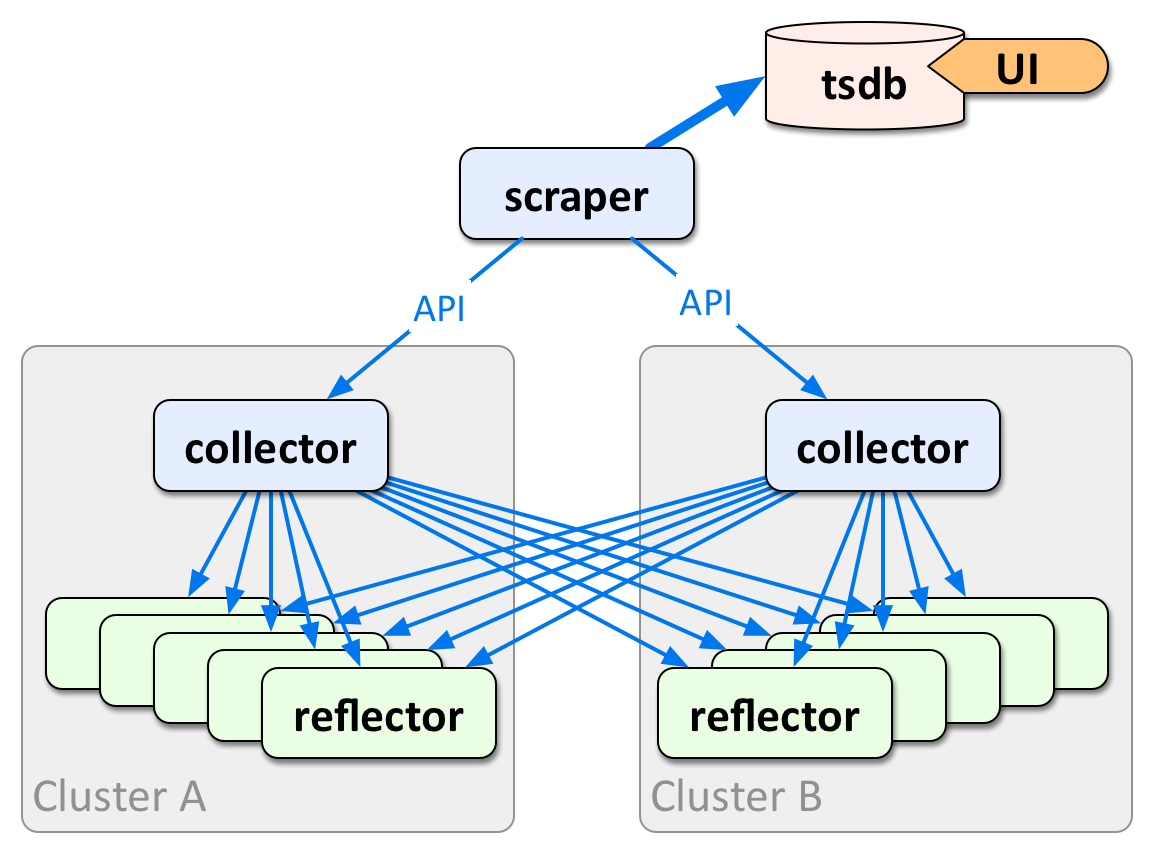

collectorsends traffic and produces measurements - A

reflectorreplies to thecollector - A

scraperplaces measurements fromcollectorsinto a TSDB (timeseries database)

|

|

|---|

In order to built a minimally viable product first, the following decisions were made:

- Python for maintainability (still uncovering how this will scale)

- Initially TCP (hping3), then UDP (sockets)

- InfluxDB for timeseries database

- Grafana for UI, later custom web UI

- ICMP: send echo-request; reflector sends back echo-reply (IP stack handles this natively)

- TCP: send

TCP SYNtotcp/0; reflector sends backTCP RST+ACK; source port increments (IP stack handles natively) - UDP: send

UDPdatagram to receiving port on reflector; reflector replies; source port increments (relies on Reflector agent)

Sending ICMP pings or sending TCP/UDP traffic all result in different behaviors. ICMP is useful to test reachability but generally not useful for testing multiple ECMP paths in a large or complex network fabric.

TCP can test ECMP paths, but in order to work without a reflector agent, needs to trick the TCP/IP stack on the reflecting host by sending to tcp/0. TCP starts breaking down at high transmission volumes because the host fails to respond to some SYN packets with RST+ACK. However, the approach with TCP fits for an MVP model.

UDP can be supported with a reflector agent which knows how to respond quickly to UDP datagrams. There's no trickery here -- UDP was designed to work

| ICMP | TCP | UDP | |

|---|---|---|---|

| Easy implementation | ✓ | ✓ | |

| Hashes across LACP/ECMP paths | ✓ | ✓ | |

| Works without Reflector agent | ✓ | ✓ |

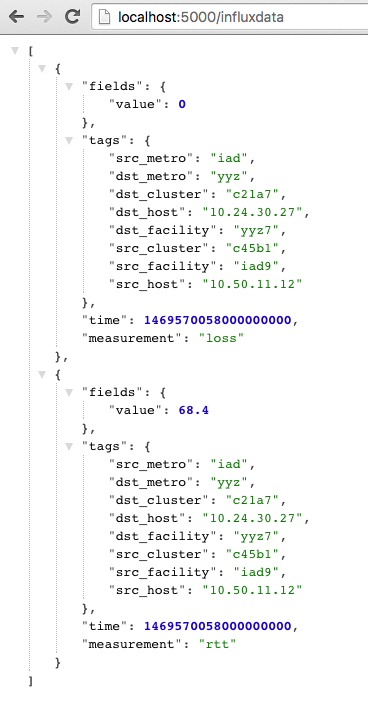

NOTE: The Collector agent could be easily extended to support other timeseries databases. This could be a great entry point for plugins.

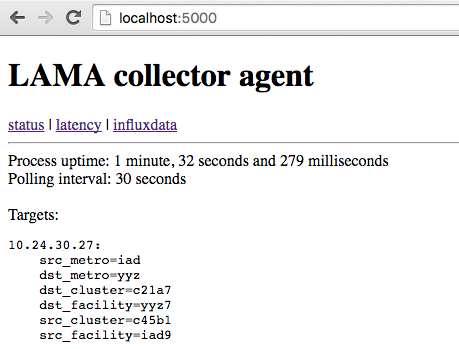

The configuration for each collector is just a simple YAML file, which could be easily generated at scale for larger networks. Each target is comprised of a hostname (or IP address) and key=value tags. The tags are automatically exposed through the Collector API to later become part of timeseries data. src_hostname and dst_hostname are automatically added by the Collector.

# LLAMA Collector Config for host: collector01

reflector01:

rack: 01

cluster: aa

datacenter: iad

reflector02:

rack: 01

cluster: bb

datacenter: iad

TBD

- Implement MVP product

- TCP library (using

hping3in a shell) - Collector agent

- Scraper agent

- JSON API for InfluxDB (

/influxdata) - JSON API for generic data (

/latency)

- TCP library (using

- Implement UDP library (using sockets)

- Implement Reflector UDP agent

- Write bin runscripts for UDP Sender/Reflector CLI utilities

- Hook UDP library into Collector process

- Integrate Travis CI tests

- Add support for QOS

- Add monitoring timeseries for Collectors

- Write matrix-like UI for InfluxDB timeseries

- Document timeseries aggregation to pinpoint loss

- Inspired by: https://www.youtube.com/watch?v=N0lZrJVdI9A

- Concepts borrowed from: https://github.com/facebook/UdpPinger/