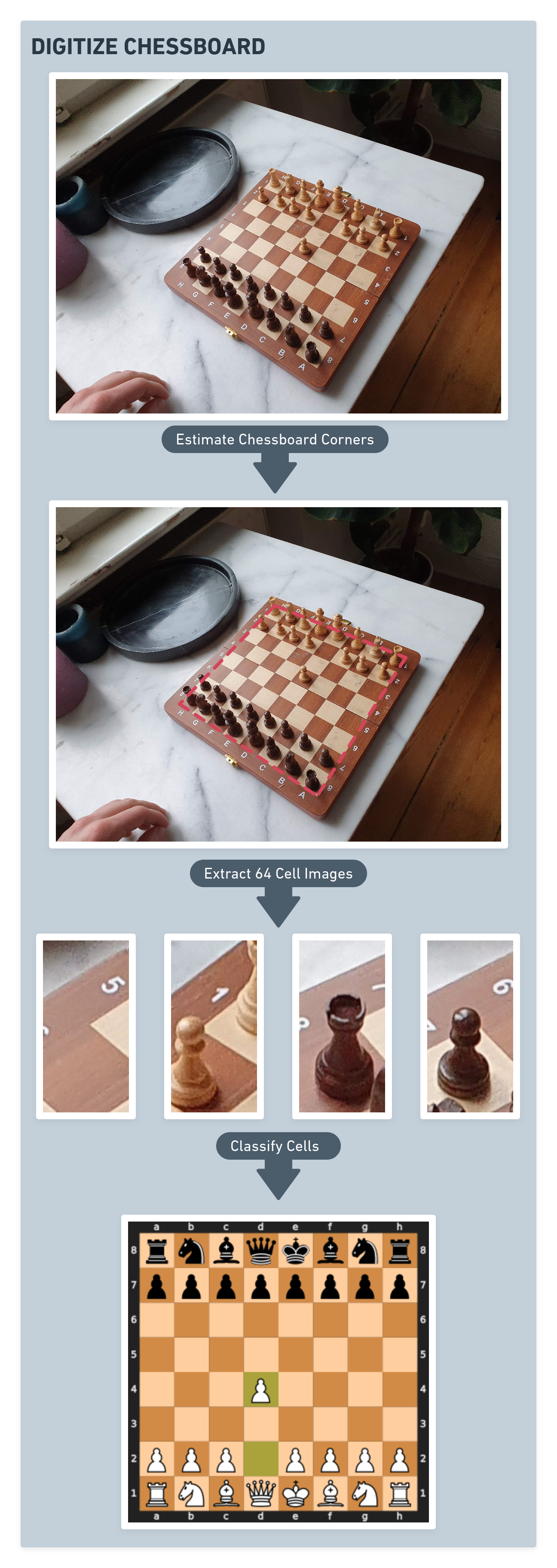

Computer-Vision pipeline for extracting chess relevant information from images or videos

The goal of this repo is to extract and digitize a chess match from images or a video source. Images can be taken from any perspective of the board as long as all pieces are still partly visible. The digitization makes it possible to play against a chessbot or against someone online on a physical chessboard.

The digitization is split into 3 parts. First the 4 corners of the chessboard are detected using a neural net performing pose estimation. The board is then split into 64 images representing each checkerboard cell. These images are classified with another neural net.

In the last step the proposed estimated board will be compared to the current legal board moves. This fusion of information can greatly increase robustness. If a proposed move passes this check it will be appended to the stack of moves.

A YOLO based hand detector is currently being tested to avoid wrong board estimations during a chess move.

Processing of a frame takes roughly 0.8 seconds on a Laptop with i7-8550u and an MX150. At this point the detection and classification models are only trained on this specific chessboard.

To get a local copy up and running follow these steps.

- Clone the repo

git clone https://github.com/aelmiger/chessboard2fen.git cd chessboard2fen - Install requirements

pip install -r requirements.txt

It is important that the Tensorflow version is 2.2

For a live analysis of the current chessboard place the stockfish binaries of your operating system here: engine/stockfish

python3 detectionScript.pyDistributed under the MIT License. See LICENSE for more information.

Anton Elmiger - anton.elmiger@gmail.com - email