A light weight library that exposes JDBC API with the convenience of Kotlin and gets out of the way when it is not needed.

For developers who prefer to have their database access through SQL where they can read it, validate it and view its query plans instead of working through cumbersome ORM or limited frameworks which obfuscate SQL with their non-standard cryptic syntax.

Refactored from KotliQuery which is an excellent idea

but for my use I needed to simplify its implementation to make adding functionality easier

without having to create a ton of intermediate classes, add result set to JSON conversion

without intermediate objects, add stored procedure calls with in/inout/out parameters and

ability to process multiple result sets.

Convenient models with simple syntax which are aware of primary key columns, auto generated

columns, columns with defaults and nullable columns. Models protected property db to define

properties via provideDelegate. See Convenient Models

import java.sql.Timestamp

// dbCase = true if database columns same as properties

// dbCase = false if database columns are snake-case versions of property names

class ValidModel(quote:String? = null) : Model<ValidModel>("tableName", dbCase = true, quote = quote) {

var processId: Long? by db.autoKey

var title: String by db

var version: String by db

var optional: Int? by db

var hasOwnColumnName: Int? by db.column("own_name")

var updatedAt: Timestamp? by db.auto

var createdAt: Timestamp? by db.auto

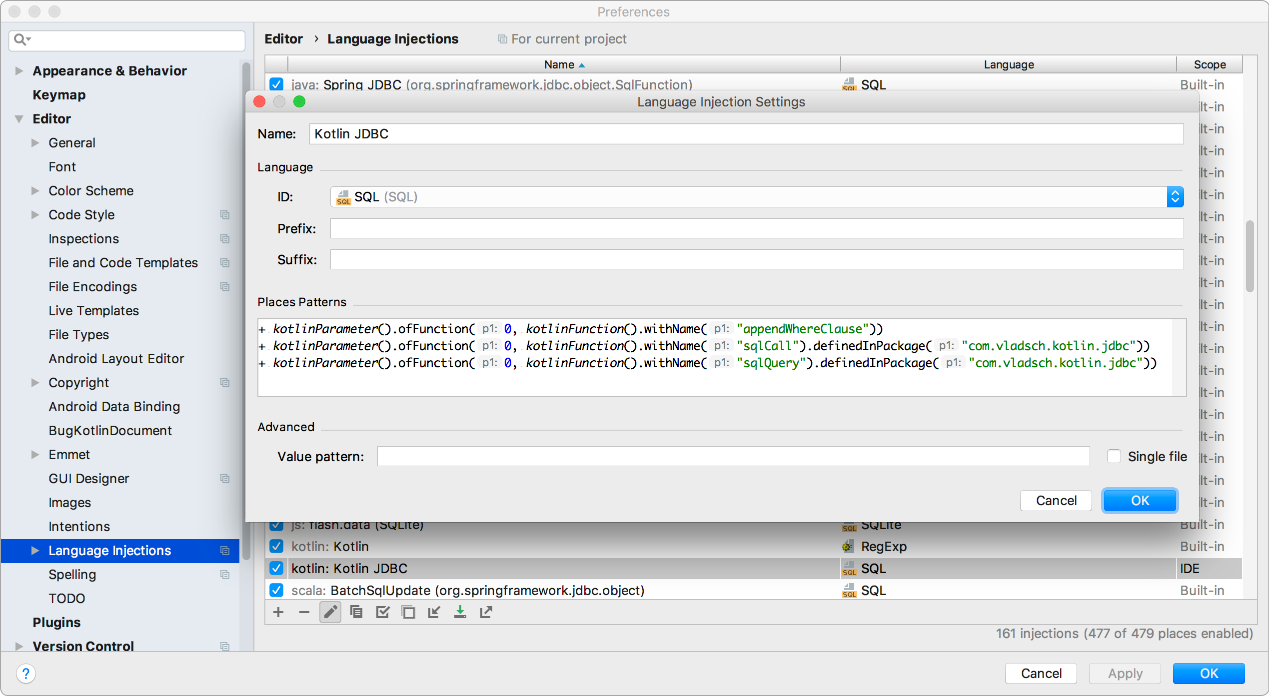

}If you are using IntelliJ IDEA IDE then defining a Language

Injection for the sql factory functions will automatically apply syntax highlighting,

completions and annotations to the SQL strings passed to sqlQuery() and sqlCall(), making it

even easier to work with SQL queries. See

Configuring SQL Language Injections

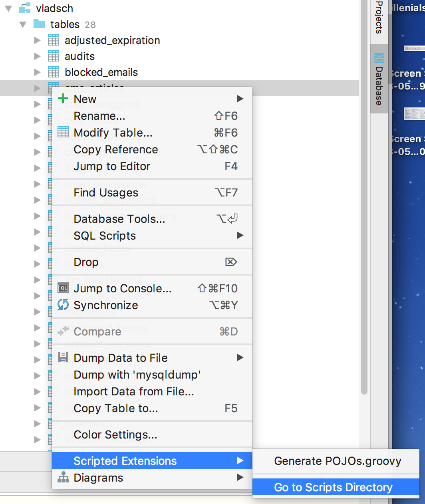

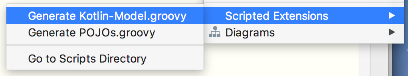

IntelliJ Ultimate Database Tools extension script for conversion of SQL tables to a Model is also available. See Installing IntelliJ Ultimate Database Tools Extension Script.

The library provides a simple migration command processor to implement migrate/rollback functionality with version tracking with each version containing a copy of database entities: functions, procedures, tables, triggers and views. See Migrations

<dependency>

<groupId>com.vladsch.kotlin-jdbc</groupId>

<artifactId>kotlin-jdbc</artifactId>

<version>0.4.2</version>

</dependency>

compile "com.vladsch.kotlin-jdbc:kotlin-jdbc:0.4.2"Session object, thin wrapper of java.sql.Connection instance, runs queries, optionally

converts results into instances, lists, hash maps with corresponding json versions as json

objects or json arrays.

import com.vladsch.kotlin.jdbc.*

val session = session("jdbc:h2:mem:hello", "user", "pass") HikariCP is an excellent choice for connection pool implementation. It is blazing fast and easy to use.

HikariCP.default("jdbc:h2:mem:hello", "user", "pass")

using(session(HikariCP.dataSource())) { session ->

// working with the session

}Define default data source for session and use shorter code:

HikariCP.default("jdbc:h2:mem:hello", "user", "pass")

// define default data source factory to allow use of session() for default

SessionImpl.defaultDataSource = { HikariCP.dataSource() }

usingDefault { session ->

// working with the session

}session.execute(

sqlQuery("""

create table members (

id serial not null primary key,

name varchar(64),

created_at timestamp not null

)

""")) // returns Booleanval insertQuery: String = "insert into members (name, created_at) values (?, ?)"

session.update(sqlQuery(insertQuery, "Alice", Date())) // returns effected row count

session.update(sqlQuery(insertQuery, "Bob", Date()))Prepare select query execution in the following steps:

- Create

SqlQueryorSqlCallobject by usingsqlQuery()orsqlCall()factory - run the query using

session.listorsession.firstpassing it extractor function ((Row) -> A)

val allIdsQuery = sqlQuery("select id from members")

val ids: List<Int> = session.list(allIdsQuery) { row -> row.int("id") }Extractor function can be used to return any type of result type from a ResultSet.

data class Member(

val id: Int,

val name: String?,

val createdAt: java.time.ZonedDateTime)

val toMember: (Row) -> Member = { row ->

Member(

row.int("id"),

row.stringOrNull("name"),

row.zonedDateTime("created_at")

)

}

val allMembersQuery = sqlQuery("select id, name, created_at from members")

val members: List<Member> = session.list(allMembersQuery, toMember)val aliceQuery = sqlQuery("select id, name, created_at from members where name = ?", "Alice")

val alice: Member? = session.first(aliceQuery, toMember)Alternative syntax is supported to allow named parameters in all queries.

val query = sqlQuery("""

SELECT id, name, created_at FROM members

WHERE (:name IS NOT NULL OR name = :name) AND (:age IS NOT NULL OR age = :age)

""", mapOf("name" to "Alice"))In the query above, the param age is not supplied on purpose.

Performance-wise this syntax is slightly slower to prepare the statement and a tiny bit more memory-consuming, due to param mapping. Use it if readability is a priority.

This method converts the pattern name to an indexed ? parameter and is not based on

"artificial" string replacement.

SqlCall instances take an additional map of out parameters. The value used for the out

parameter is only significant for its type used when getting the results back. For inout

parameters pass the name in both maps.

sqlCall("""call storedProc(:inParam,:inOutParam,:outParam)""",

mapOf("inParam" to "Alice", "inOutParam" to "Bob"),

mapOf("inOutParam" to "", "outParam" to ""))For convenience there are methods to pass parameters as in, inout, out as a list of pairs or maps:

sqlCall("""call storedProc(:inParam,:inOutParam,:outParam)""")

.inParams("inParam" to "Alice")

.inOutParms("inOutParam" to "Bob")

.outParams("outParam" to "")However, the first method is fastest because it sets all the parameters with the least run-time processing.

Automatic expansion of sqlQuery and sqlCall collection valued named parameters to their

contained values will be performed. This allows collections to be used where individual

parameters are specified:

sqlQuery("SELECT * FROM Table WHERE column in (:list)").inParams("list" to listOf(1,2,3))Will be expanded to SELECT * FROM Table WHERE column in (?,?,?) with parameters of 1, 2, 3

passed to the prepared statement.

In the case, the parameter type has to be explicitly stated, there's a wrapper class -

Parameter that will help provide explicit type information.

val param = Parameter(param, String::class.java)

sqlQuery("""select id, name

from members

where ? is null or ? = name""",

param, param)or also with the helper function param

sqlQuery("""select id, name

from members

where ? is null or ? = name""",

null.param<String>(), null.param<String>())This can be useful in situations similar to one described here.

#forEach allows you to make some side-effect in iterations. This API is useful for handling a

ResultSet one row at a time.

session.forEach(sqlQuery("select id from members")) { row ->

// working with large data set

}As an alternative when you need to modify a small amount of values or columns, and then pass the results as JSON to the front-end, you can convert the result set to JSON object or array and modify the data in place.

This library uses the boxed-json library's

MutableJsObject and MutableJsArray which allow modifications to the JsonValues without

having to copy the object.

Session object provides transaction block. Transactions are automatically committed if not

explicitly committed or cancelled inside the block. Any uncaught exceptions will cause the

transaction to be automatically rolled back.

The Transaction instance is a session with added java.sql.Connection transaction methods for

convenience.

session.transaction { tx ->

// begin

tx.update(sqlQuery("insert into members (name, created_at) values (?, ?)", "Alice", Date()))

}

session.transaction { tx ->

// begin

tx.update(sqlQuery("update members set name = ? where id = ?", "Chris", 1))

throw RuntimeException() // rollback

}SQL queries come in two forms: SqlQuery for all DDL and DML. SqlCall is for calling stored

procedures with in/inout/out parameters to pass to the procedure and processing inout/out

parameters after execution with optional processing of multiple result sets returned by the

procedure.

All queries are executed through the Session instance or its sub-class Transaction. The

session has separate methods for different types of query execution and results:

session.queryused to execute queries and processing a result setsession.executeused to execute queries not expecting a result setsession.updateused to execute update queries

Convenience methods that process result sets which use an extractor which take a ResultSet Row and return an instance of something:

session.listused to return a list of extracted data from rowssession.firstused to return a single instance from head of result setsession.countused to return the count of rows when you don't need more than that and are too lazy to write a count querysession.hashMapsame as list but used to return a hash map keyed on column(s) from the result setsession.jsonArraysame as list but returns an array ofJsonObjectsholding each row datasession.jsonObjectsame ashashMapexcept theJsonObjectfirst level properties are a string of the keyed column(s) from each row.

Iteration helpers which will invoke a consumer for every row of result set data:

session.forEachto iterate over each row or a result set from anSqlQueryorSqlCallsession.forEachto iterate over each result set from aSqlCalland to process inout/out parameters.

Update and getting generated key(s):

session.updateGetIdto execute an update query and get the first column of the first row of the generated keys result set and return its integer valuesession.updateGetIdsto execute an update query and get the first column of all the rows of the generated keys result set and return a list of integerssession.updateGetKeyto execute an update query and get the keys (using an extractor) of the first row of the generated keys result set and return its valuesession.updateGetKeysto execute an update query and get the keys (using an extractor) of all the rows of the generated keys result set and return them as a list

A base Model class can be used to define models which know how to set their properties from a

Row result set row, from other Models, understand auto generated, key columns and columns

with default values; can generate INSERT, UPDATE, DELETE and SELECT queries for a

model instance with validation of required fields and minimal required columns for an update.

Using these models is a convenience not a requirement since it does create overhead by building the model's properties for every instance.

- Define model's properties by using

by db. The nullability of the property type dictates whether the property can be omitted or set to null - Key properties by:

by db.key. These will be used forWHERElist forUPDATE,DELETEorSELECTfor reload query generation - Auto generated (left out of update and insert column list) properties by:

by db.auto - Auto generated Key (key column and auto generated) by:

by db.key.auto,by db.autoKeyorby db.auto.key - Columns which have default values by:

by db.default. These won't raise an exception forINSERTquery generation if they are missing from the model's defined property set. A function alternativeby db.default(value)will provide a default value which will be used for insert query if an explicit value is not provided for the property.

If column names are the same as the property names then set dbCase = true for the Model

constructor argument. If column names are snake-case versions of camelcase property names

(lowercase with underscores between words) then set dbCase = false and the model will generate

correct column names automatically. When needed, you can provide a column name explicitly via

.column("columnName") making it independent of the property name. This function is available

on any delegate provider so it can be combined with key, auto and default properties: model,

model.key, model.auto, model.default

By default models allow public setters on properties marked auto or autoKey. To add

validation forcing all auto properties to have no set method or have private set pass

false for allowSetAuto second parameter to model constructor.

Any property marked as auto generated will not be used in UPDATE or INSERT queries.

Column and table names are not quoted by default. If the same database type is used on all

connections then quoting should be set during initialization through Model.databaseQuoting.

When multiple database quoting types are used for different sessions then quote string to be used by models should be passed to the model constructor based on the database type for the session. This means that models with different quoting strings.

For IntelliJ Ultimate a Database extension script can be installed which will generate models from the context menu of any table in the database tools window. See Installing IntelliJ Ultimate Database Tools Extension Script

The model's companion implements helper functions for converting result set row to the data class, JSON object and creating list queries.

Result set row to model/json/data conversion:

These return conversion function without identifier quoting option:

val toModel: (Row) -> M = toModel()val toData: (Row) -> D = toData()val toJson: (Row) -> JsonObject = toJson()

These return conversion function with identifier quoting option:

fun toModel(quote: String? = null): (Row) -> Mfun toData(quote: String? = null): (Row) -> Dfun toJson(quote: String? = null): (Row) -> JsonObject

List Query Helpers:

fun listQuery(vararg params: Pair<String, Any?>, quote:String = ModelProperties.databaseQuoting): SqlQueryfun listQuery(params: Map<String, Any?>, quote:String = ModelProperties.databaseQuoting): SqlQueryfun listQuery(whereClause:String, vararg params: Pair<String, Any?>, quote:String = ModelProperties.databaseQuoting): SqlQueryfun listQuery(whereClause:String, params: Map<String, Any?>, quote:String = ModelProperties.databaseQuoting): SqlQuery

List results:

fun list(session: Session, vararg params: Pair<String, Any?>, quote:String = ModelProperties.databaseQuoting): List<D>fun list(session: Session, params: Map<String, Any?>, quote:String = ModelProperties.databaseQuoting): List<D>fun list(session: Session, whereClause:String, vararg params: Pair<String, Any?>, quote:String = ModelProperties.databaseQuoting): List<D>fun list(session: Session, whereClause:String, params: Map<String, Any?>, quote:String = ModelProperties.databaseQuoting): List<D>

JSON Array results:

fun jsonArray(session: Session, vararg params: Pair<String, Any?>, quote:String = ModelProperties.databaseQuoting): JsonArrayfun jsonArray(session: Session, params: Map<String, Any?>, quote:String = ModelProperties.databaseQuoting): JsonArrayfun jsonArray(session: Session, whereClause:String, vararg params: Pair<String, Any?>, quote:String = ModelProperties.databaseQuoting): JsonArrayfun jsonArray(session: Session, whereClause:String, params: Map<String, Any?>, quote:String = ModelProperties.databaseQuoting): JsonArray

data class ValidData(

val processId: Long?,

val title: String,

val version: String,

val batch: Int?,

val updatedAt: String?,

val createdAt: String?

)

class ValidModel(quote:String? = null) : Model<ValidModel>(tableName, dbCase = true, quote = quote) {

var processId: Long? by db.auto.key

var title: String by db

var version: String by db

var batch: Int? by db.default(1)

var updatedAt: String? by db.auto

var createdAt: String? by db.auto

fun toData() = ValidData(

processId,

title,

version,

batch,

updatedAt,

createdAt

)

companion object : ModelCompanion<ValidModel, ValidData> {

override val tableName = "tableName"

override fun createModel(quote:String?): ValidModel = ValidModel(quote)

override fun createData(model: ValidModel): ValidData = model.toData()

}

}

fun useModel() {

using(session(HikariCP.default())) { session ->

// get all rows from table as list

val modelList = ValidModel.list(session)

val model = ValidModel()

model.title = "title text"

model.version = "V1.0"

// execute an insert and set model's key properties from the keys returned by the database

// batch will be set to 1 since it is not set in properties

model.insert(session)

// this will delete the model and clear auto.key properties

model.delete(session)

// this will delete the model but not clear auto.key properties

model.deleteKeepAutoKeys(session)

// execute select query for model (based on keys) and load model

model.select(session)

// just insert, don't bother getting keys

model.insertIgnoreKeys(session)

// take a snapshot of current properties

model.snapshot()

model.version = "V2.0"

// will only update version since it is the only one changed, does automatic snapshot after update

model.update(session)

// will only update version since it is the only one changed but will reload model from database

// if updatedAt field is timestamped on update then it will be loaded with a new value

model.version = "V3.0"

model.updateReload(session)

}

}You can manually add @Language("SQL") annotation to strings or add a language injection

configuration:

The places patterns text is:

+ kotlinParameter().ofFunction(0, kotlinFunction().withName("appendWhereClause"))

+ kotlinParameter().ofFunction(0, kotlinFunction().withName("sqlCall").definedInPackage("com.vladsch.kotlin.jdbc"))

+ kotlinParameter().ofFunction(0, kotlinFunction().withName("sqlQuery").definedInPackage("com.vladsch.kotlin.jdbc"))

To get full benefit of SQL completions you should also define a database source to the database against which you are developing (or a local clone of it) and configure the SQL dialect for the database you are using.

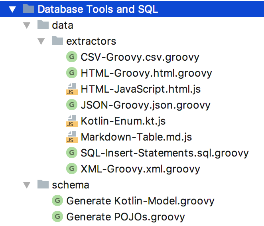

Download the groovy script for generating a kotlin-jdbc model:

Generate Kotlin-Model.groovy

In database tool window, right click on a table and select Scripted Extensions > Go to scripts directory and copy the script to this location.

It will appear in the Scripted Extensions pop-up menu. For best results use the native schema

introspection instead of JDBC in connection configuration.

Add Generate Scala-Slick-Model.groovy for generating a Scala/Slick database model.

Kotlin-Enum.kt.js to

convert result set data to Kotlin Enum definition. You need to add it to the data/extractors

directory

It uses the first column name as the enum name (id suffix stripped and last word pluralized). The first column which contains all non-numeric values will be used for names of the enum values (converted to screaming snake case), if no such column exists then the names will be the screaming snake case of the enum name with id values appended.

All columns will be included in the enum value constructor and search functions in companion object for an enum value of a given column. Easier to show than explain:

Result set:

| changeHistoryTypeId | type |

|---|---|

| 1 | Process |

| 2 | File |

| 3 | Client |

| 4 | User |

| 5 | ProcessInstance |

Generated Kotlin enum:

enum class ChangeHistoryTypes(val id: Int, val type: String) {

PROCESS(1, "Process"),

FILE(2, "File"),

CLIENT(3, "Client"),

USER(4, "User"),

PROCESS_INSTANCE(5, "ProcessInstance");

companion object {

fun changeHistoryTypeId(id: Int): ChangeHistoryTypes? = values().find { it.id == id }

fun type(type: String): ChangeHistoryTypes? = values().find { it.type == type }

}

}A script for generating a JavaScript enum based on enumerated-type npm package will generate

an enum usable in JavaScript

JavaScript-Enumerated-Value-Type.kt.js

A script for generating a markdown table for the table data Markdown-Table.md.js Note: the table above was generated with this script.

DbEntityExtractor interface. Currently only MySql version is implemented by

MySqlEntityExtractor limiting migration functionality to MySql data sources.

Migrations are implemented by the Migrations.dbCommand(String[]) function. Migrations

constructor is provided with the database session, DbEntityExtractor instance and

resourceClass instance holding the database migration version resource files.

When a migration command is run for the first time, it will create a migration table where all

migration operation will be stored and used for determining which operations should be performed

to bring the database to a specific version.

Each version of the database entities is stored in a sub-directory with the version format:

Vv_m_p_meta, where v is version number integer, m is minor version integer, p is patch version

integer and meta is any string. Only the Vv portion is required. Minor, patch and meta are

optional. The underscore separating version parts belongs to the next element. i.e. the correct

version is V1 not V1_, V1_2 and not V1_2_, etc.

Each version has the following directory structure and database entity script naming conventions:

db/

└── V0_0_0

├── functions

│ └── sample-function.udf.sql

├── migrations

│ ├── 0.sample-migration.down.sql

│ └── 0.sample-migration.up.sql

├── procedures

│ └── sample-stored-procedure.prc.sql

├── tables

│ └── sample-table.tbl.sql

├── triggers

│ └── sample-trigger.trg.sql

└── views

└── sample-view.view.sql

Database Entities:

| Database Entity | Directory | File Extension |

|---|---|---|

| function | functions | .udf.sql |

| stored procedure | procedures | .prc.sql |

| table | tables | .tbl.sql |

| trigger | triggers | .trg.sql |

| view | views | .view.sql |

All entity scripts for a particular version will be run when the database is migrated (up or down) to that version as the final version. Any entities in the database which do not have a corresponding script file, will be deleted from the database.

For example, if the database is migrated from V1 to V5 with intermediate versions: V2,

V3 and V4 then up migration scripts for versions V2, V3, V4 and V5 will be run and

only the scripts for database entities of V5 will be run.

Migration Scripts:

Both up/down migration scripts are located in the migrations directory and are distinguished

by their extension: .up.sql and .down.sql.

The files in this directory are executed in sorted order and all files should have an integer prefix, optionally followed by descriptive text. Files which have an integer prefix will be sorted in numerical order, otherwise alphabetic order.

When applying up migration scripts these are executed in increasing file name order.

During a rollback operation, only down scripts whose up script execution has been recorded in

the migrations table will be executed. All down scripts are executed in decreasing file name

order.

Migration script files are split on ; delimiters and each part run as a separate query, if

successful then an entry for this fact is added to the migration table.

After all migrations/rollback scripts have been applied for all required versions, the database entity scripts (excluding tables) for the resulting version will be run. This means that migrations are only required to migrate the table schema and table data. Other entities will be updated via their own scripts.

After migration/rollback it is a good idea to run update-schema command to copy all the entity

scripts to the schema directory, located on the same level as the version sub-directories.

Contents of this directory are intended to be under VCS and used to track changes to database

from version to version. Individual version directories are immutable for a given version and

therefore not useful for VCS based modification tracking tools.

To make debugging of rollback/migration scripts easier, after each migration/rollback the

resulting database tables are validated against the corresponding version's tables/ directory

contents and an error is recorded if the validation fails. The validation will ignore

differences caused by re-ordering of lines, this is used to eliminate column, key and constraint

order changes from causing validation failures.

To generate contents for the tables/ directory of the current version or a specific version,

run the dump-tables command.

Commands:

-

init - initialize migrations table and migrate all to given version or latest version based on database table match to table schemas contained in versions

-

path "resources/db" - set path to resources/db directory of the project where version information is stored.

-

version "versionID" - set specific version for following commands

"versionID" must be of the regex form

V\d+(_\d+(_\d+(_.*)?)?)?where the

\d+are major, minor, patch versions with the trailing.*being the version metadata. Versions are compared using numeric comparison for major, minor and patch.The metadata if present will be compared using regular string comparison, ie. normal sort.

-

new-major - create a new version directory with major version incremented, from current or requested version.

-

new-minor - create a new version directory with minor version incremented, from current or requested version.

-

new-patch - create a new version directory with patch version incremented, from current or requested version.

-

new-version - create a new version directory for the requested version. The directory cannot already exist. If the version is not provided then the current version with its patch version number incremented will be used.

All entity directories will be created, including migrations.

If there is a previous version to the one requested then all its entity scripts will be copied to the new version directory.

-

new-evolution "play/evolutions/directory" create a new play evolution file from current or requested version migrations and rollbacks in the requested directory.

-

new-migration "title" - create a new up/down migration script files in the requested (or current) version's migrations directory. The file name will be in the form: N.title.D.sql where N is numeric integer 1..., D is up or down and title is the title passed command. Placeholders in the file:

__VERSION__will be replaced with the version for which this file is generated and__TITLE__with the "title" passed to the command. -

new-function "name" - create a new function file using resources/db/templates customized template or built-in if none Placeholders in the file:

__VERSION__will be replaced with the version for which this file is generated and__NAME__with the "name" passed to the command. -

new-procedure "name" - create a new procedure file using resources/db/templates customized template or built-in if none Placeholders in the file:

__VERSION__will be replaced with the version for which this file is generated and__NAME__with the "name" passed to the command. -

new-trigger "name" - create a new trigger file using resources/db/templates customized template or built-in if none Placeholders in the file:

__VERSION__will be replaced with the version for which this file is generated and__NAME__with the "name" passed to the command. -

new-view "name" - create a new view file using resources/db/templates customized template or built-in if none Placeholders in the file:

__VERSION__will be replaced with the version for which this file is generated and__NAME__with the "name" passed to the command. -

migrate - migrate to given version or to latest version

-

rollback - rollback to given version or to previous version

-

dump-tables - dump database tables

-

create-tables - create all tables which exist in the version tables directory and which do not exist in the database

-

validate-tables - validate that version table scripts and database agree

-

update-all - update all: functions, views, procedures, triggers. This runs the scripts corresponding to the database object for the requested version.

-

update-procedures

update-procs - update stored procedures -

update-functions

update-funcs - update functions -

update-triggers - update triggers

-

update-schema - update

schemadirectory with entities from selected version (or current if none given) -

update-views - update views

-

exit - exit application

Place your files in the resources/db directory and name it templates the layout is the same

as a version directory with the template files named sample:

db/

└── templates/

├── functions/

│ └── sample.udf.sql

├── migrations/

│ ├── 0.sample.down.sql

│ └── 0.sample.up.sql

├── procedures/

│ └── sample.prc.sql

├── triggers/

│ └── sample.trg.sql

└── views/

└── sample.view.sql

(The MIT License)

Copyright (c) 2018 - Vladimir Schneider

Copyright (c) 2015 - Kazuhiro Sera