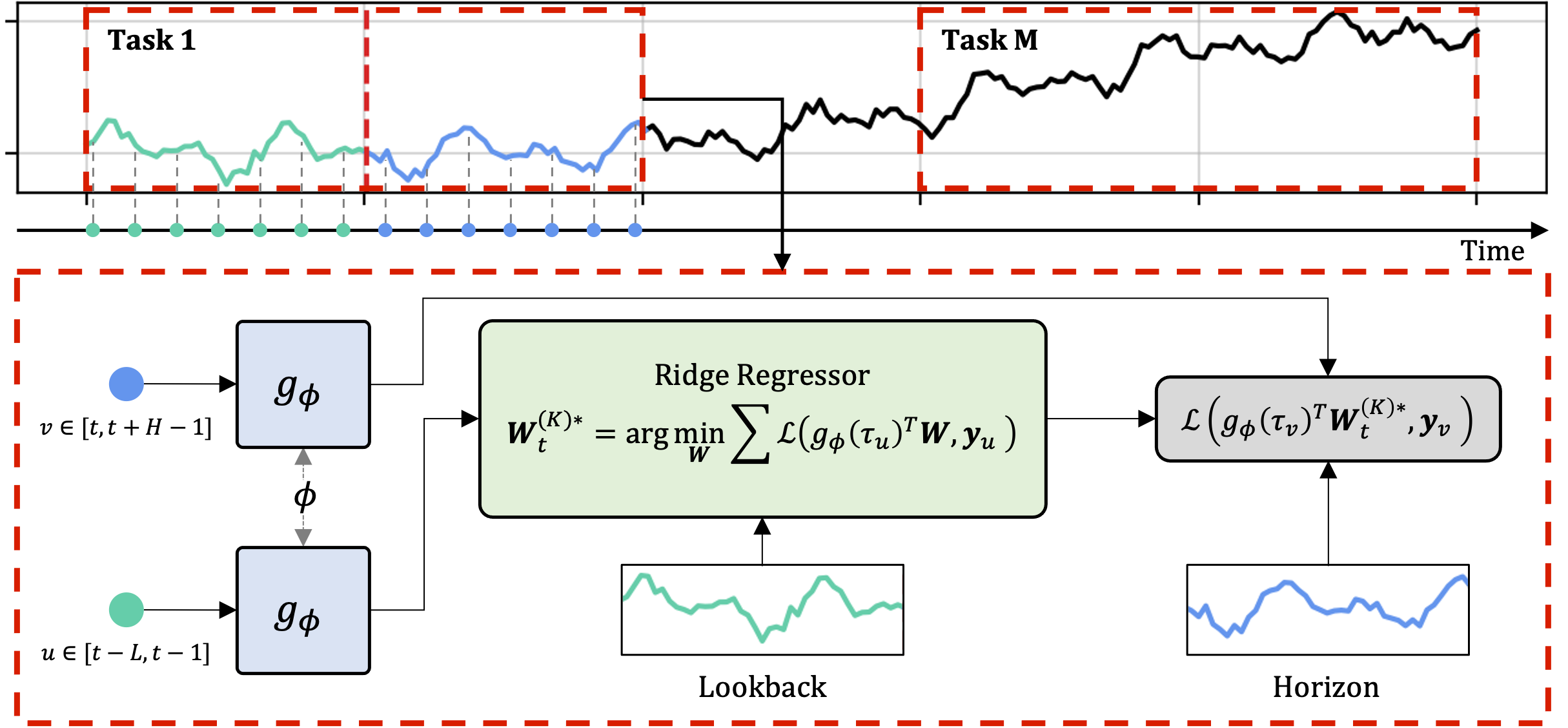

Figure 1. Overall approach of DeepTIMe.

Official PyTorch code repository for the DeepTIMe paper.

- DeepTIMe is a deep time-index based model trained via a meta-learning formulation, yielding a strong method for non-stationary time-series forecasting.

- Experiments on real world datases in the long sequence time-series forecasting setting demonstrates that DeepTIMe achieves competitive results with state-of-the-art methods and is highly efficient.

Dependencies for this project can be installed by:

pip install -r requirements.txtTo get started, you will need to download the datasets as described in our paper:

- Pre-processed datasets can be downloaded from the following links, Tsinghua Cloud or Google Drive, as obtained from Autoformer's GitHub repository.

- Place the downloaded datasets into the

storage/datasets/folder, e.g.storage/datasets/ETT-small/ETTm2.csv.

We provide some scripts to quickly reproduce the results reported in our paper. There are two options, to run the full hyperparameter search, or to directly run the experiments with hyperparameters provided in the configuration files.

Option A: Run the full hyperparameter search.

- Run the following command to generate the experiments:

make build-all path=experiments/configs/hp_search. - Run the following script to perform training and evaluation:

./run_hp_search.sh(you may need to runchmod u+x run_hp_search.shfirst).

Option B: Directly run the experiments with hyperparameters provided in the configuration files.

- Run the following command to generate the experiments:

make build-all path=experiments/configs. - Run the following script to perform training and evaluation:

./run.sh(you may need to runchmod u+x run.shfirst).

Finally, results can be viewed on tensorboard by running tensorboard --logdir storage/experiments/, or in

the storage/experiments/experiment_name/metrics.npy file.

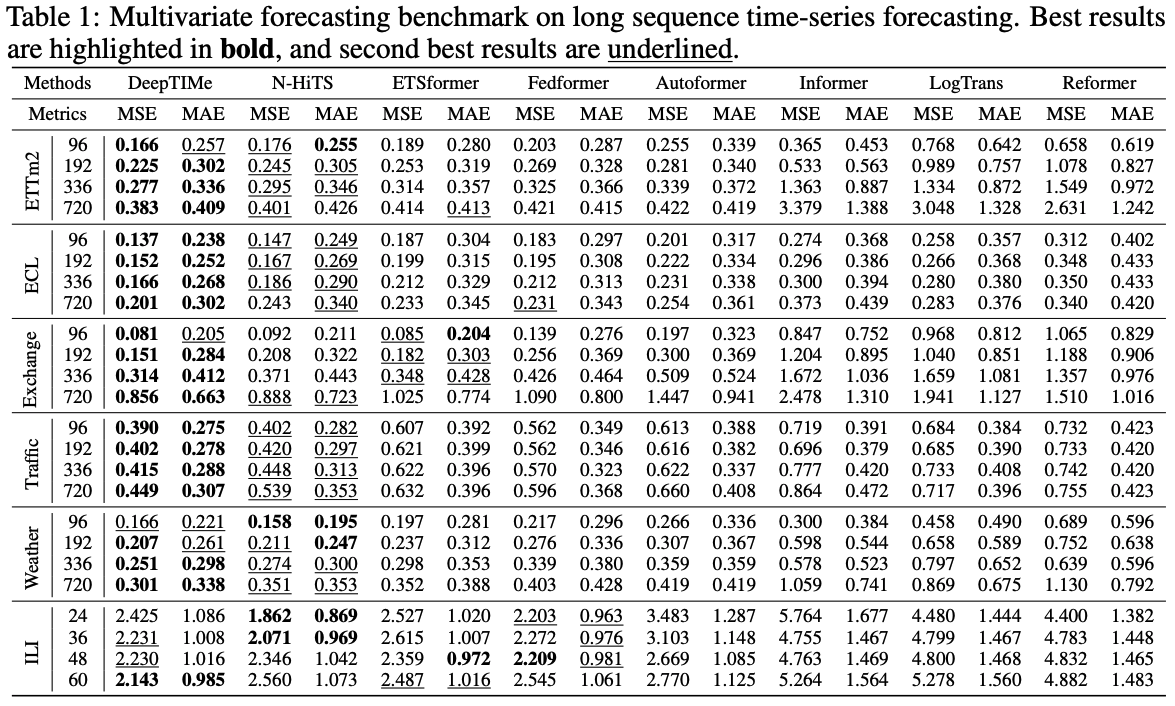

We conduct extensive experiments on both synthetic and real world datasets, showing that DeepTIMe has extremely competitive performance, achieving state-of-the-art results on 20 out of 24 settings for the multivariate forecasting benchmark based on MSE.

Further details of the code repository can be found here. The codebase is structured to generate experiments from

a .gin configuration file based on the build.variables_dict argument.

- First, build the experiment from a config file. We provide 2 ways to build an experiment.

- Build a single config file:

make build config=experiments/configs/folder_name/file_name.gin - Build a group of config files:

make build-all path=experiments/configs/folder_name

- Build a single config file:

- Next, run the experiment using the following command

Alternatively, the first step generates a command file found in

python -m experiments.forecast --config_path=storage/experiments/experiment_name/config.gin run

storage/experiments/experiment_name/command, which you can use by the following command,make run command=storage/experiments/experiment_name/command

- Finally, you can observe the results on tensorboard

or view the

tensorboard --logdir storage/experiments/

storage/experiments/deeptime/experiment_name/metrics.npyfile.

The implementation of DeepTIMe relies on resources from the following codebases and repositories, we thank the original authors for open-sourcing their work.

- https://github.com/ElementAI/N-BEATS

- https://github.com/zhouhaoyi/Informer2020

- https://github.com/thuml/Autoformer

Please consider citing if you find this code useful to your research.

@article{woo2022deeptime,

title={DeepTIMe: Deep Time-Index Meta-Learning for Non-Stationary Time-Series Forecasting},

author={Gerald Woo and Chenghao Liu and Doyen Sahoo and Akshat Kumar and Steven C. H. Hoi},

year={2022},

url={https://arxiv.org/abs/2207.06046},

}