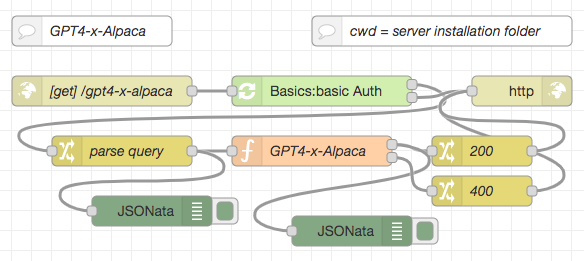

Node-RED Flow (and web page example) for the Alpaca GPT4 AI model

This repository contains a function node for Node-RED which can be used to run the Stanford Alpaca GPT4 model (a fine-tuned variant of the LLaMA model trained with transcripts of GPT-4 sessions) using llama.cpp within a Node-RED flow. Inference is done on the CPU (without requiring any special hardware) and still completes within a few seconds on a reasonably powerful computer.

Additionally, this repo also contains function nodes to tokenize a prompt or to calculate embeddings based on the GPT4-x-Alpaca model.

Having the inference, tokenization and embedding calculation as a self-contained function node gives you the possibility to create your own user interface or even use it as part of an autonomous agent.

Warning: this flow needs a 13B model - use it only if you have at least 16GB of RAM, more is highly recommended

Nota bene: these flows do not contain the actual model. You will have to download your own copy from HuggingFace.

If you like, you may also check out similar nodes and flows for other AI models as there are

- Meta AI LLaMA

- Stanford Alpaca (trained with GPT-3)

- Nomic AI GPT4All (filtered version)

- Nomic AI GPT4All (unfiltered version)

- Nomic AI GPT4All-J

- Vicuna

- OpenLLaMA

- WizardLM

Just a small note: if you like this work and plan to use it, consider "starring" this repository (you will find the "Star" button on the top right of this page), so that I know which of my repositories to take most care of.

Start by creating a subfolder called ai within the installation folder of your Node-RED server. This subfolder will later store both the executable and the actual model. Using such a subfolder helps keeping the folder structure of your server clean if you decide to play with other AI models as well.

The actual "heavy lifting" is done by llama.cpp. Simply follow the instructions found in section Usage of the llama.cpp docs to build the main executable for your platform.

Afterwards, rename

maintollama,tokenizationtollama-tokenizationandembeddingtollama-embeddings

and copy them into the subfolder ai you created before.

Just download the model from HuggingFace - it already has the proper format.

Nota bene: right now, the function node supports the 7B model only - but this may easily be changed in the function source

Afterwards, rename the file ggml-model-q4_1.bin to ggml-gpt4-x-alpaca-13b-q4_1.bin and move (or copy) it into the same subfolder ai where you already placed the llama executable.

Finally, open the Flow Editor of your Node-RED server and import the contents of GPT4-x-Alpaca-Function.json. After deploying your changes, you are ready to run Alpaca GPT4 inferences directly from within Node-RED.

Additionally, you may also import the contents of GPT4-x-Alpaca-Tokenization.json if you want to tokenize prompts, or of GPT4-x-Alpaca-Embeddings.json if you want to calculate embeddings for a given text.

All function nodes expect their parameters as properties of the msg object. The prompt itself (or the input text to tokenize or calculate embeddings from) is expected in msg.payload and will later be replaced by the function result.

All properties (except prompt or input text) are optional. If given, they should be strings (even if they contain numbers), this makes it simpler to extract them from an HTTP request.

Inference supports the following properties:

payload- this is the actual promptseed- seed value for the internal pseudo random number generator (integer, default: -1, use random seed for <= 0)threads- number of threads to use during computation (integer ≧ 1, default: 4)context- size of the prompt context (0...2048, default: 512)keep- number of tokens to keep from the initial prompt (integer ≧ -1, default: 0, -1 = all)predict- number of tokens to predict (integer ≧ -1, default: 128, -1 = infinity)topk- top-k sampling limit (integer ≧ 1, default: 40)topp- top-p sampling limit (0.0...1.0, default: 0.9)temperature- temperature (0.0...2.0, default: 0.8)batches- batch size for prompt processing (integer ≧ 1, default: 8)

Tokenization supports the following properties:

payload- this is the actual input textthreads- number of threads to use during computation (integer ≧ 1, default: 4)context- size of the prompt context (0...2048, default: 512)

Embeddings calculation supports the following properties:

payload- this is the actual input textseed- seed value for the internal pseudo random number generator (integer, default: -1, use random seed for <= 0)threads- number of threads to use during computation (integer ≧ 1, default: 4)context- size of the prompt context (0...2048, default: 512)

The file GPT4-x-Alpaca-HTTP-Endpoint.json contains an example which uses the GPT4-x-Alpaca function node to answer HTTP requests. The prompt itself and any inference parameters have to be passed as query parameters, the result of the inference will then be returned in the body of the HTTP response.

Nota bene: the screenshot from above shows a modified version of this flow including an authentication node from the author's Node-RED Authorization Examples, the flow in GPT4-x-Alpaca-HTTP-Endpoint.json comes without any authentication.

The following parameters are supported (most of them will be copied into a msg property of the same name):

prompt- will be copied intomsg.payloadseed- will be copied intomsg.seedthreads- will be copied intomsg.threadscontext- will be copied intomsg.contextkeep- will be copied intomsg.keeppredict- will be copied intomsg.predicttopk- will be copied intomsg.topktopp- will be copied intomsg.topptemperature- will be copied intomsg.temperaturebatches- will be copied intomsg.batches

In order to install this flow, simply open the Flow Editor of your Node-RED server and import the contents of GPT4-x-Alpaca-HTTP-Endpoint.json

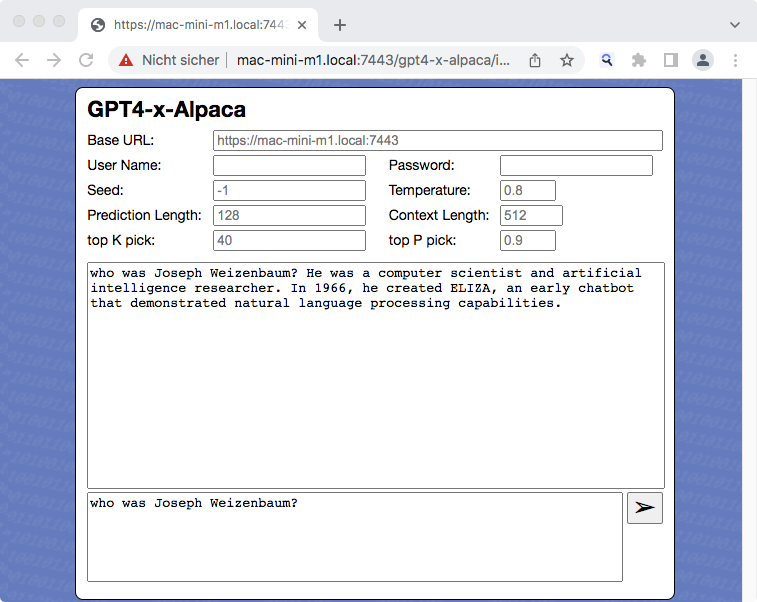

The file GPT4-x-Alpaca.html contains a trivial web page which can act as a user interface for the HTTP endpoint.

Ideally, this page should be served from the same Node-RED server that also accepts the HTTP requests for Alpaca GPT4, but this is not strictly necessary.

The input fields Base URL, User Name and Password can be used if web server and Node-RED server are at different locations: just enter the base URL of your Node-RED HTTP endpoint (without the trailing alpaca) and, if that server requires basic authentication, your user name and your password in the related input fields before you send your first prompt - otherwise, just leave all these fields empty.

The input fields Seed, Temperature, Prediction Length, Context Length, top K pick and top P pick may be used to customize some of the parameters described above - if left empty, their "placeholders" show the respective default values.

The largest field will show a transcript of your current dialog with the inference node.

Finally, the bottommost input field may be used to enter a prompt - if one is present, the "Send" button becomes enabled: press it to submit your prompt, then wait for a response.

Nota bene: inference is still done on the Node-RED server, not within your browser!