Pytorch codes for Open-set Adversarial Defense (pdf) in ECCV 2020

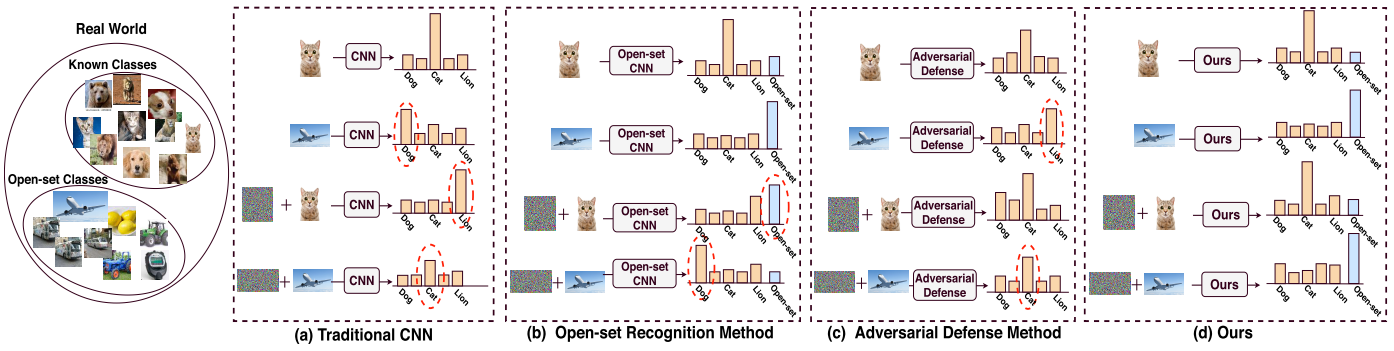

Challenges in open-set recogntion and adversarial robustness. (a) Conventional CNN classifiers fail in the presence of both open-set and adversarial images. (b) Open-set recognition methods can successfully identify open-set samples, but fail on adversarial samples. (c) Adversarial defense methods are unable to identify open-set samples. (d) Proposed method can identify open-set images and it is robust to adversarial images.

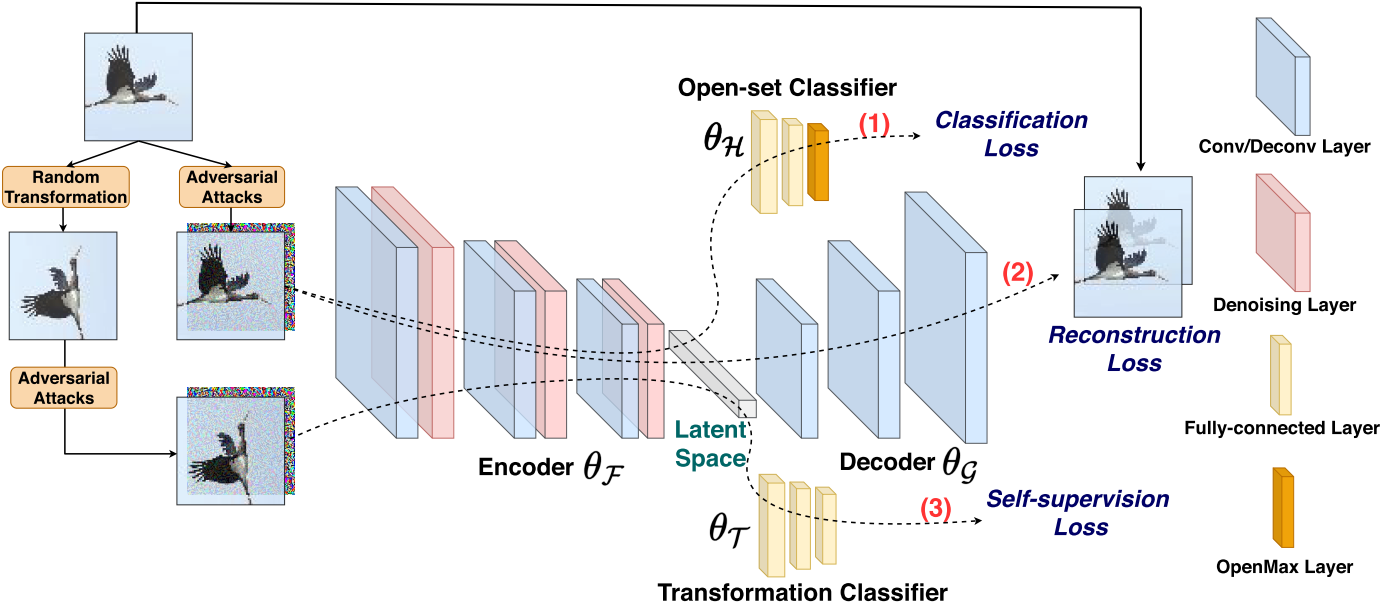

Thus this paper proposes a new research problem named Open-Set Adversarial Defense (OSAD) where adversarial attacks are studied under an open-set setting. We propose an Open-Set Defense Network (OSDN) that learns a latent feature space that is robust to adversarial attacks and informative to identify open-set samples.

Network structure of the proposed Open-Set Defense Network (OSDN). It consists of four components: encoder, decoder, open-set classifier and transformation classifier.

-

Prerequisites: Python3.6, pytorch=1.2, Numpy, libmr

-

The source code folders:

- "models": Contains the network architectures of proposed Open-Set Defense Network.

- "advertorch": Contains adversarial attacks such as FGSM and PGD. Thanks the codes from: https://github.com/BorealisAI/advertorch

- "OpensetMethods": Contains the open-max function. Thanks the codes from: https://github.com/lwneal/counterfactual-open-set

- "datasets": Contains datasets

- "misc": Contains initialization and some preprocessing functions

To run the train file: python train.py

To run the test file: python test.py

It will generate .txt file that contains the score for close-set accuracy and AUROC.

Please kindly cite this paper in your publications if it helps your research:

@inproceedings{shao2020open,

title={Open-set adversarial defense},

author={Shao, Rui and Perera, Pramuditha and Yuen, Pong C and Patel, Vishal M},

booktitle={European Conference on Computer Vision},

pages={682--698},

year={2020},

organization={Springer}

}

Contact: rshaojimmy@gmail.com