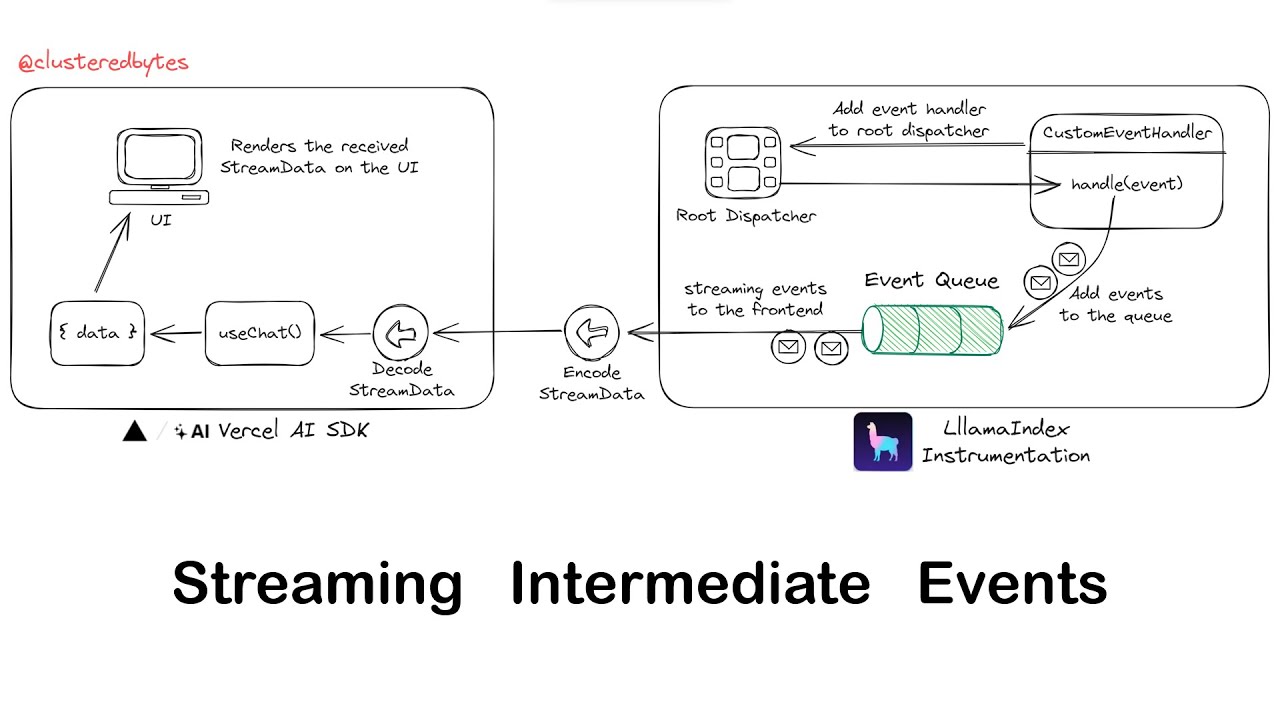

In this tutorial, we'll see how to use LlamaIndex Instrumentation module to send intermediate steps in a RAG pipeline to the frontend for an intuitive user experience.

Full video tutorial under 3 minutes 🔥👇

We use Server-Sent Events which will be recieved by Vercel AI SDK on the frontend.

First clone the repo:

git clone https://github.com/rsrohan99/rag-stream-intermediate-events-tutorial.git

cd rag-stream-intermediate-events-tutorialcd into the backend directory

cd backendcp .env.example .envOPENAI_API_KEY=****poetry installpoetry run python app/engine/generate.pypoetry run python main.pycd into the frontend directory

cd frontendcp .env.example .envnpm inpm run dev