IEPT: Instance-Level and Episode-Level Pretext Tasks For Few-Shot Learning (ICLR2021)

Manli Zhang, Jianhong Zhang, Zhiwu Lu, Tao Xiang, Mingyu Ding, Songfang Huang

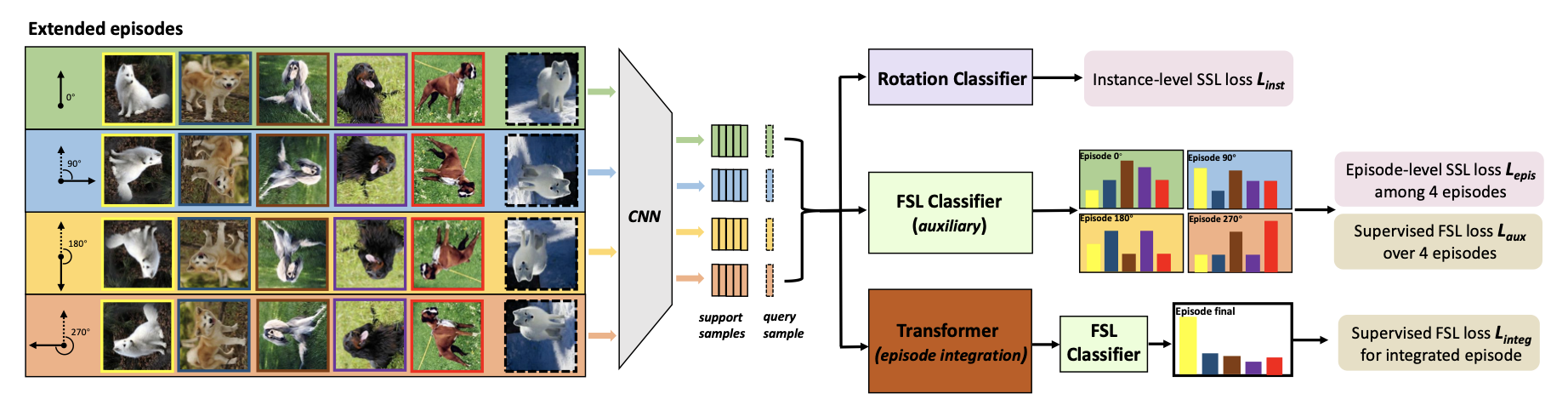

Abstract: In this work, we propose a novel Instance-level and Episode-level Pretext Task (IEPT) framework that seamlessly integrates SSL into FSL. Specifically, given an FSL episode, we first apply geometric transformations to each instance to generate extended episodes. At the instancelevel, transformation recognition is performed as per standard SSL. Importantly, at the episode-level, two SSL-FSL hybrid learning objectives are devised: (1) The consistency across the predictions of an FSL classifier from different extended episodes is maximized as an episode-level pretext task. (2) The features extracted from each instance across different episodes are integrated to construct a single FSL classifier for meta-learning.

The following items are required:

-

Package: PyTorch-0.4+, torchvision, pyyaml and tensorboardX

-

Dataset: please download raw dataset and put images into the folder data/miniImagenet/images, and the split .csv file into the folder data/miniImagenet/split.

-

Pre-trained weights: please download the pre-trained weights of the encoder and put them under the saves/initialization/mini-imagenet folder.

The MiniImageNet dataset is a subset of the ImageNet that includes a total number of 100 classes and 600 examples per class. We follow the previous setup, and use 64 classes as SEEN categories, 16 and 20 as two sets of UNSEEN categories for model validation and evaluation respectively. Note that we use the raw images which are resized to 92*92 and then center croped to 84*84.

All the hyper-parameters for training our model are writen in the config/*.yaml files. We can train the 5way 1shot setting on MiniImageNet by running :

$ python train.py --way 5 --shot 1 --cfg_file ./config/mini-imagenet/conv64.yaml

You can run eval.py to evaluate a given model by:

$ python eval.py --model_type ConvNet --dataset MiniImageNet --model_path ./checkpoints/XXX/XXX.pth --shot 1 --way 5 --gpu 0 --embed_size 64

where the trained model is stored as ./checkpoints/XXX/XXX.pth .

If this repo helps in your work, please cite the following paper:

@inproceedings{

zhang2021iept,

title={{IEPT}: Instance-Level and Episode-Level Pretext Tasks for Few-Shot Learning},

author={Manli Zhang and Jianhong Zhang and Zhiwu Lu and Tao Xiang and Mingyu Ding and Songfang Huang},

booktitle={International Conference on Learning Representations (ICLR)},

year={2021},

url={https://openreview.net/forum?id=xzqLpqRzxLq}

}

We thank the following repos for providing helpful components/functions in our work.