https://www.rungalileo.io/hallucinationindex

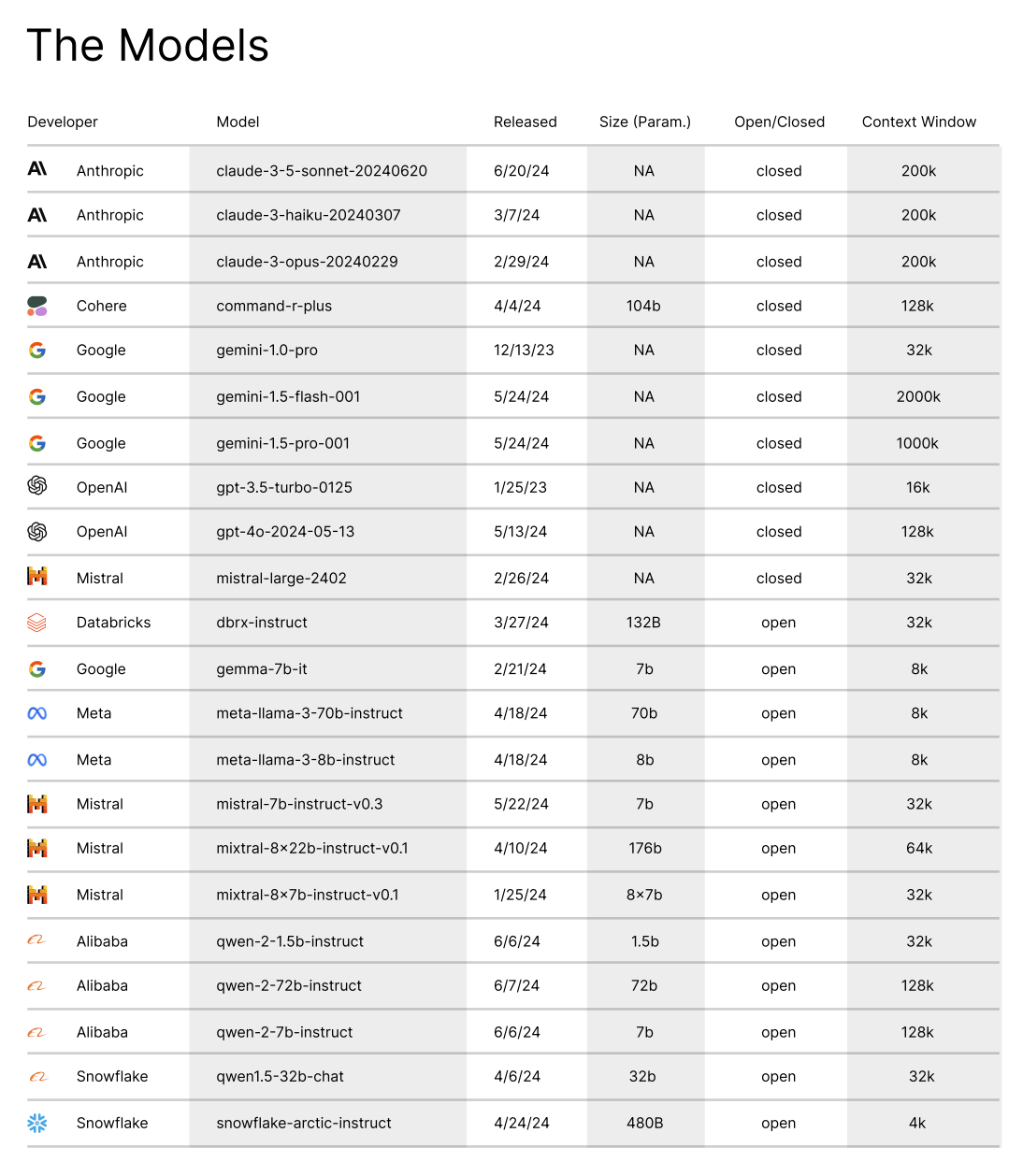

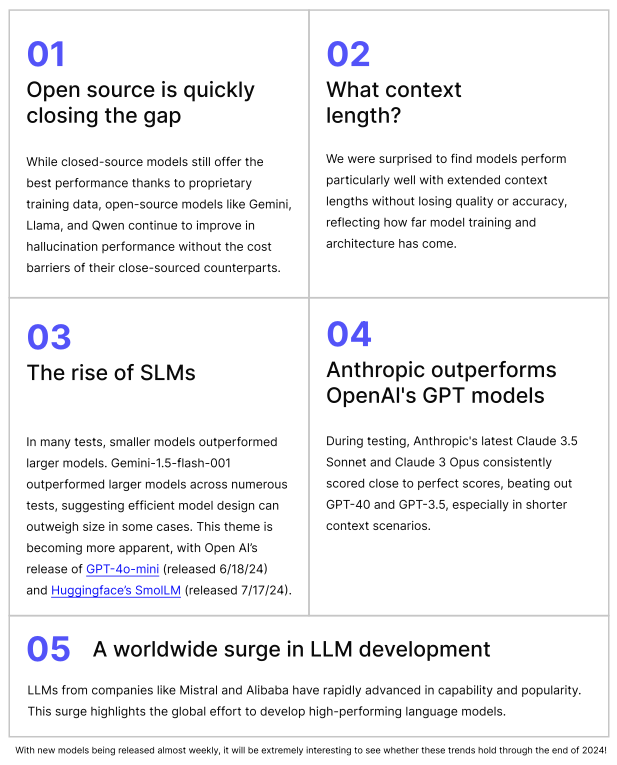

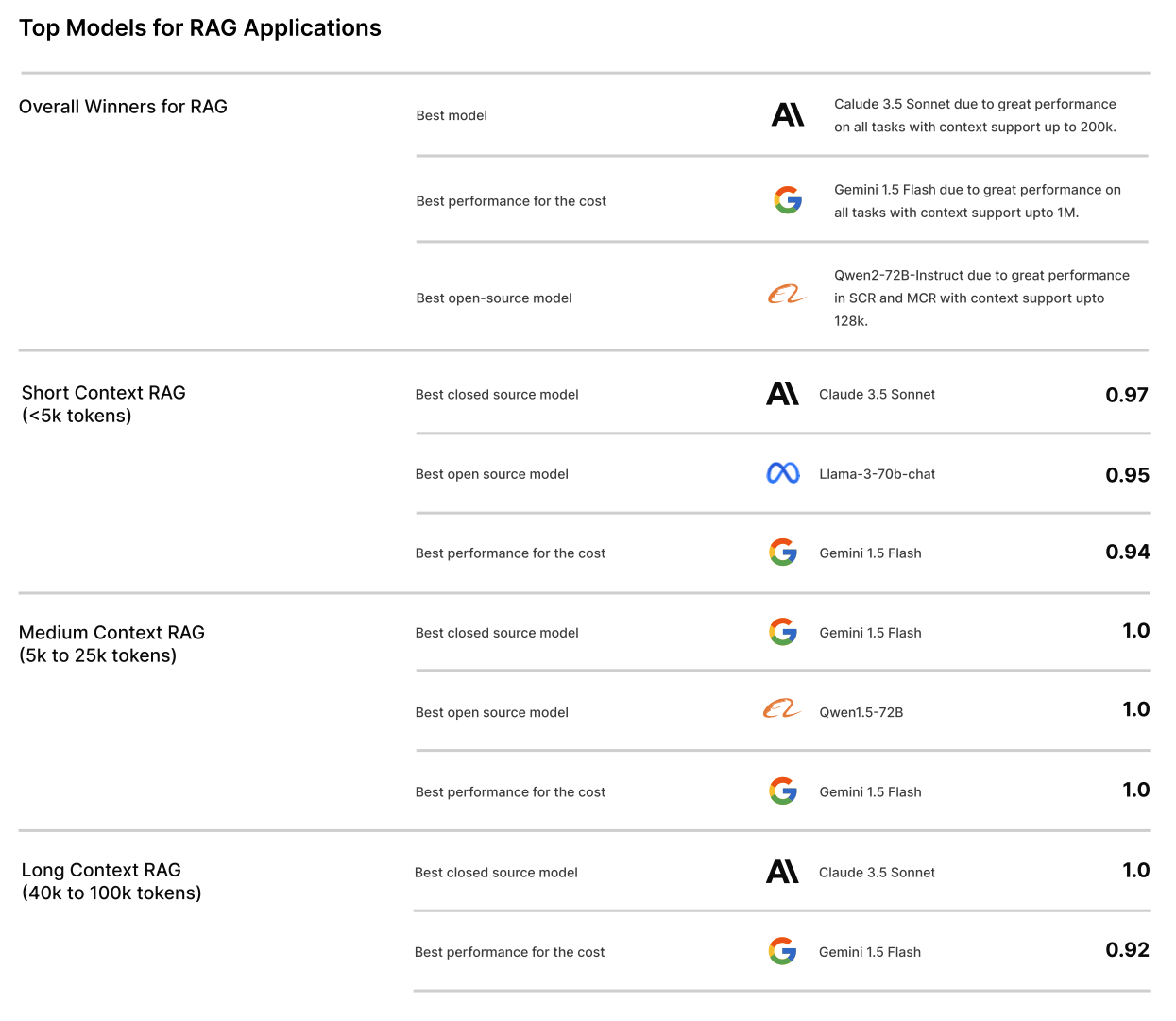

There were two key LLM attributes we wanted to test as part of this Index - context length and open vs. closed-source.

With the rising popularity of RAG, we wanted to see how context length affects model performance. Providing an LLM with context data is akin to giving a student a cheat sheet for an open-book exam. We tested three scenarios:

| Context Length | Task Description |

|---|---|

| Short Context | Provide the LLM with < 5k tokens of context data, equivalent to a few pages of information. |

| Medium Context | Provide the LLM with 5k - 25k tokens of context data, equivalent to a book chapter. |

| Long Context | Provide the LLM with 40k - 100k tokens of context data, equivalent to an entire book. |

The open-source vs. closed-source software debate has waged on since the Free Software Movement (FSM) in the late 1980s. This debate has reached a fever pitch during the LLM Arms Race. The assumption is closed-source LLMs, with their access to proprietary training data, will perform better, but we wanted to put this assumption to the test.

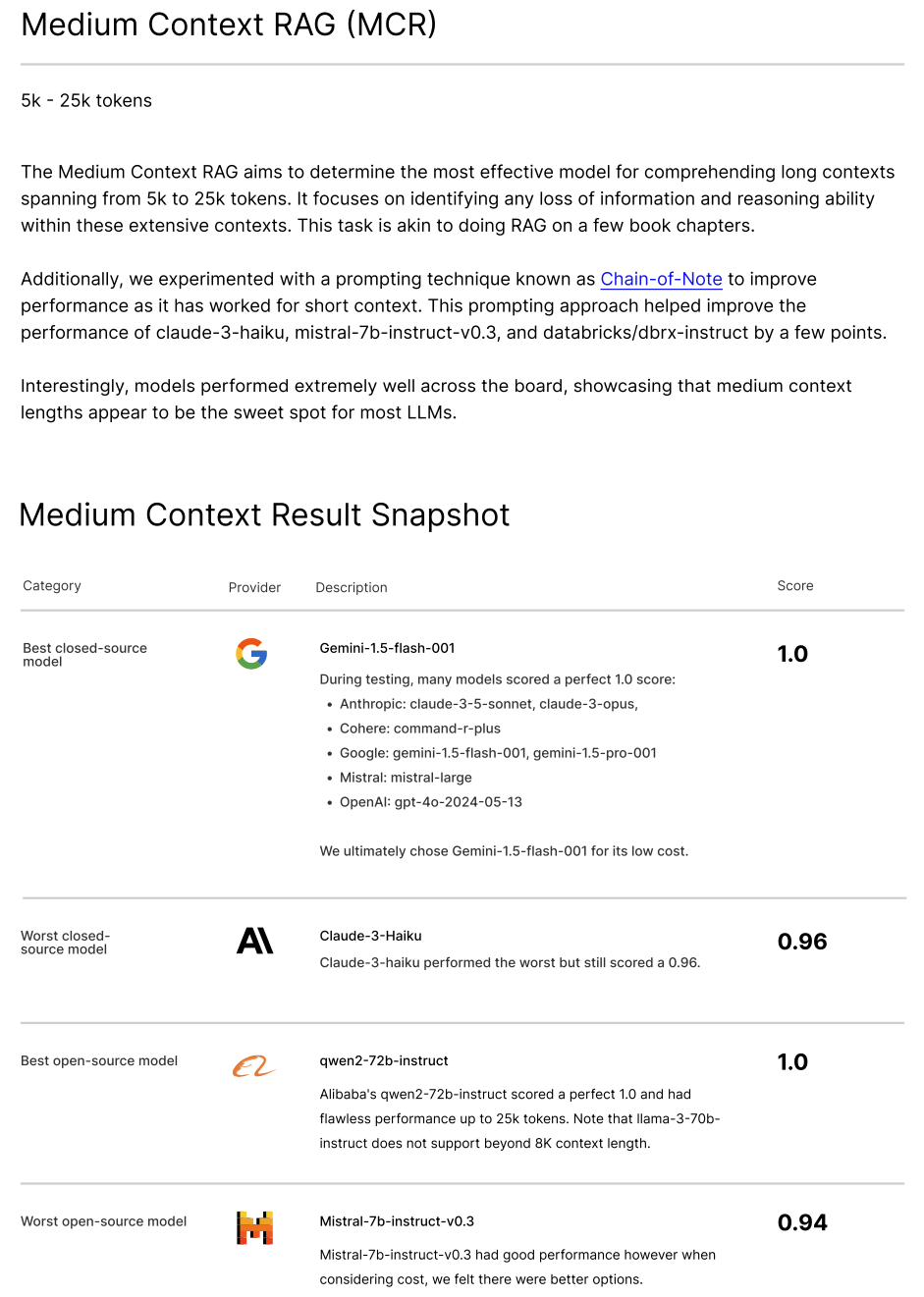

We experimented with a prompting technique known as Chain-of-Note, which has shown promise for enhancing performance in short-context scenarios, to see if it similarly benefits medium and long contexts.

We tested 22 models, 10 closed-source models and 12 open-source models, from leading foundation model brands like OpenAI, Anthropic, Meta, Google, Mistral, and more.

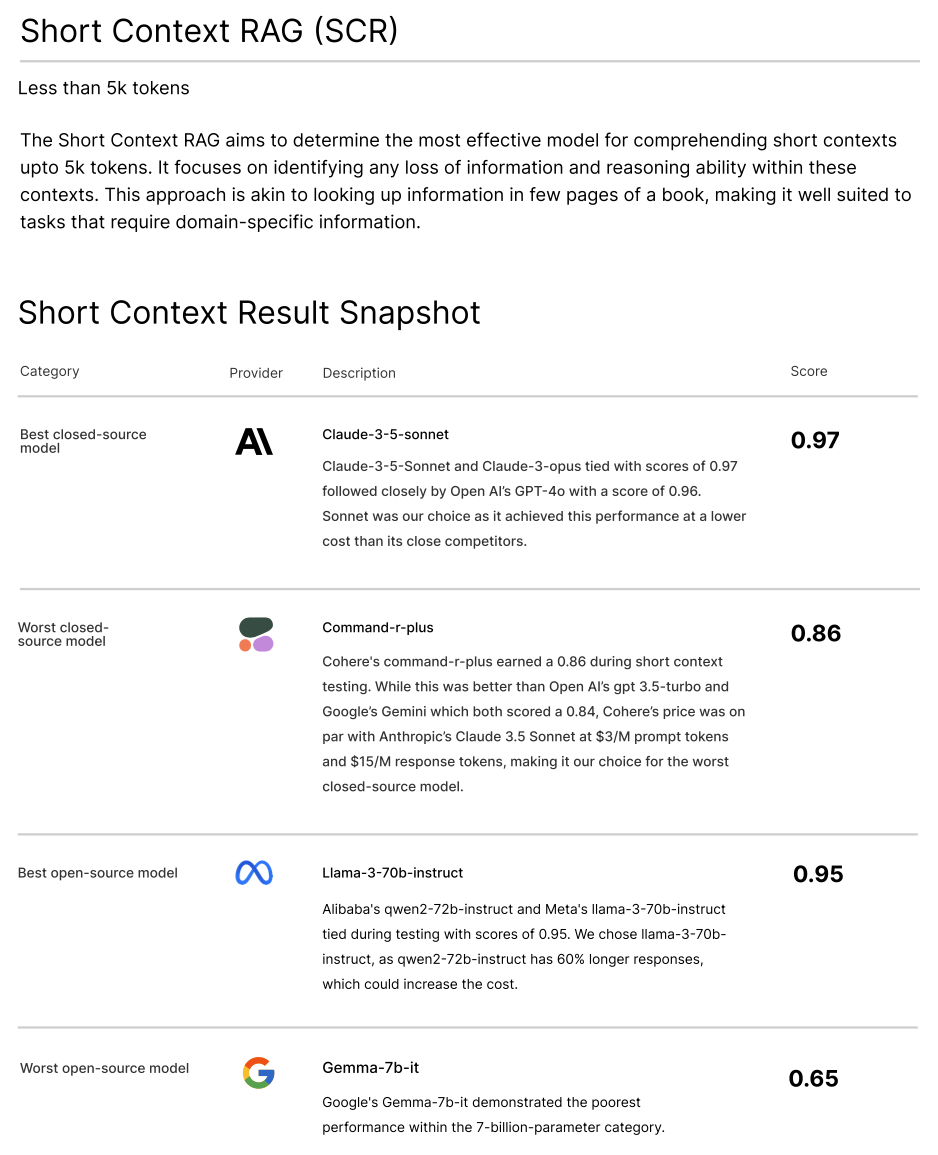

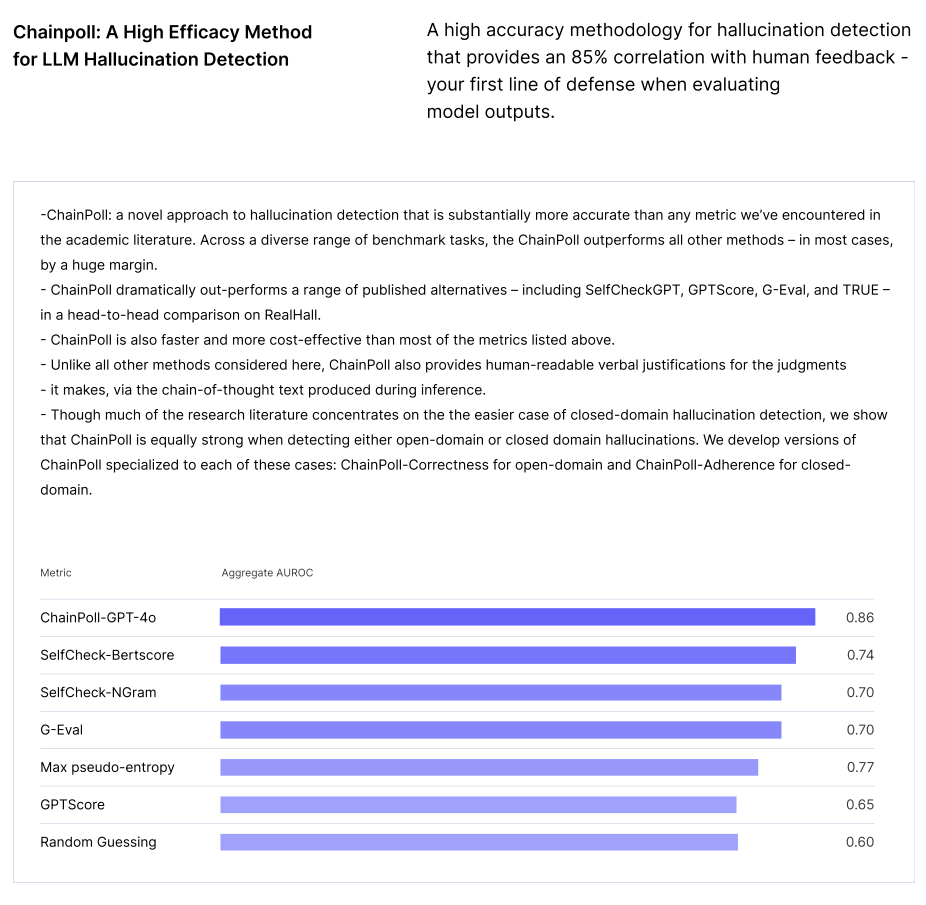

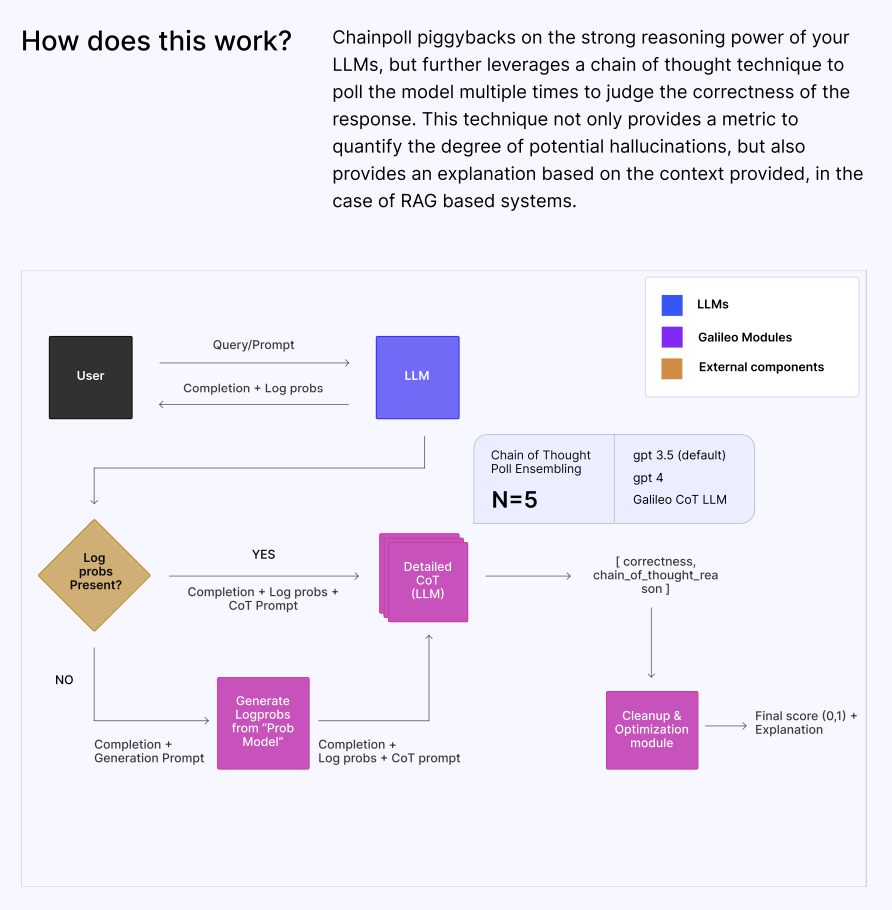

We evaluated SCR using a rigorous set of datasets to test the model's robustness in handling short contexts. One of our key methodologies was Chainpoll with GPT-4o. This involves polling the model multiple times using a chain of thought technique, allowing us to:

- Quantify potential hallucinations.

- Offer context-based explanations, a crucial feature for RAG systems.

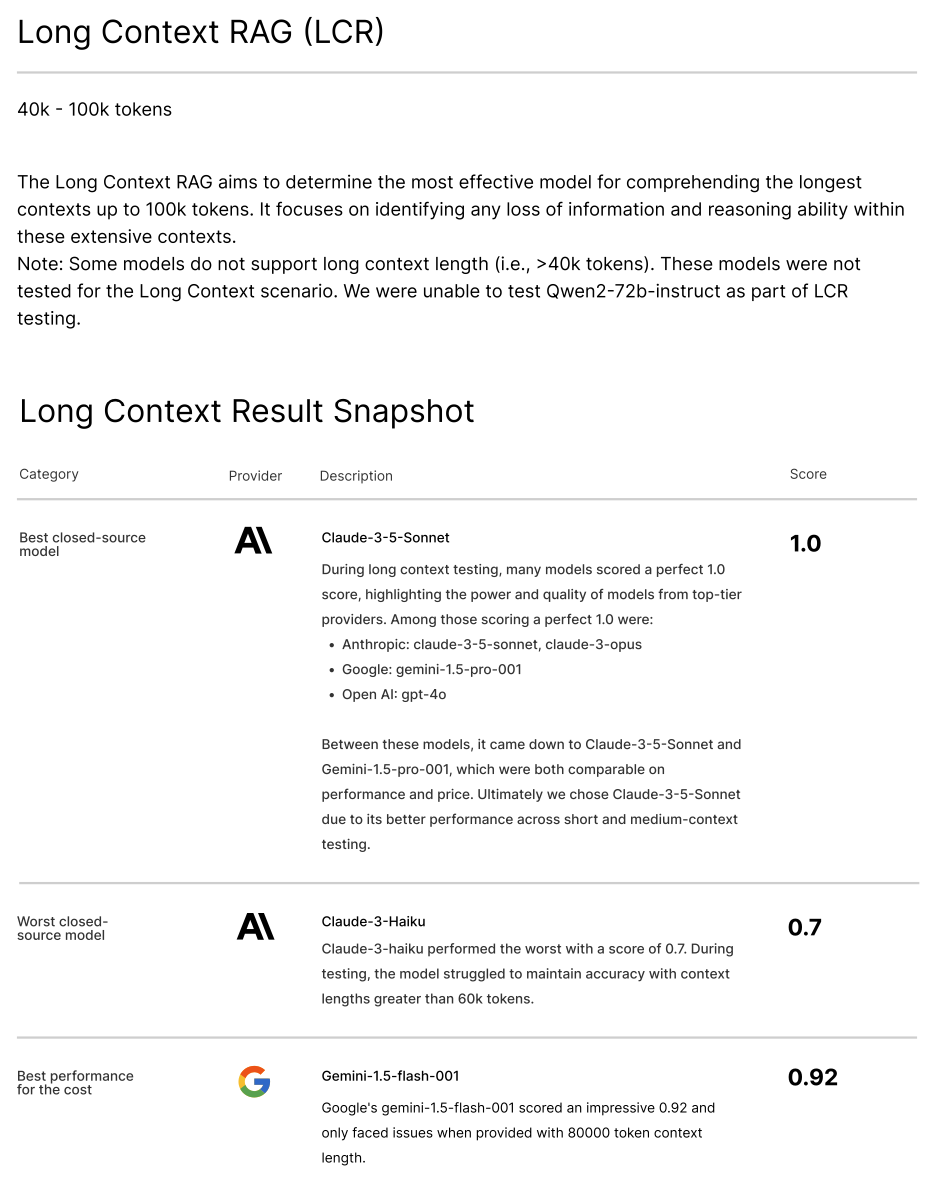

Our focus here was on assessing models’ ability to comprehensively understand extensive texts in medium and long contexts. The procedure involved:

- Extracting text from 10,000 recent documents of a company.

- Dividing the text into chunks and designating one as the "needle chunk."

- Constructing retrieval questions answerable using the needle chunk embedded in the context.

- Medium: 5k, 10k, 15k, 20k, 25k tokens

- Long: 40k, 60k, 80k, 100k tokens

- All text in context must be from a single domain.

- Responses should be correct even with short context, confirming the influence of longer contexts.

- Questions should not be answerable from pre-training memory or general knowledge.

- Measure the influence of information position by keeping everything constant except the location of the needle.

- Avoid standard datasets to prevent test leakage.

We experimented with a prompting technique known as Chain-of-Note, which has shown promise for enhancing performance in short-context scenarios, to see if it similarly benefits medium and long contexts.

Adherence to context was evaluated using a custom LLM-based assessment, checking for the relevant answer within the response.

To evaluate a model’s propensity to hallucinate, we employed a high-performance evaluation technique to assess contextual adherence and factual accuracy. Learn more about Galileo’s Context Adherence and ChainPoll.

For an in-depth understanding, we recommend checking out https://www.rungalileo.io/hallucinationindex.