avail-light is a data availability light client with the following functionalities:

- Listening on the Avail network for finalized blocks

- Random sampling and proof verification of a predetermined number of cells (

{row, col}pairs) on each new block. After successful block verification, confidence is calculated for a number of cells (N) in a matrix, withNdepending on the percentage of certainty the light client wants to achieve. - Data reconstruction through application client.

- HTTP endpoints exposing relevant data, both from the light and application clients

-

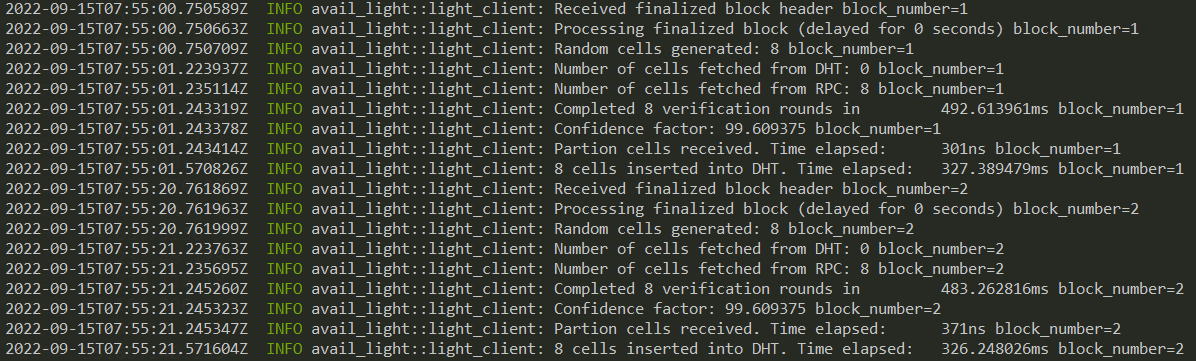

Light-client Mode: The basic mode of operation and is always active no matter the mode selected. If an

App_IDis not provided (or is =0), this mode will commence. On each header received the client does random sampling using two mechanisms:- DHT - client first tries to retrieve cells via Kademlia.

- RPC - if DHT retrieve fails, the client uses RPC calls to Avail nodes to retrieve the needed cells. The cells not already found in the DHT will be uploaded.

Once the data is received, light client verifies individual cells and calculates the confidence, which is then stored locally.

-

App-Specific Mode: If an

App_ID> 0 is given in the config file, the application client (part of the light client) downloads all the relevant app data, reconstructs it and persists it locally. Reconstructed data is then available to accessed via an HTTP endpoint. (WIP) -

Fat-Client Mode: The client retrieves larger contiguous chunks of the matrix on each block via RPC calls to an Avail node, and stores them on the DHT. This mode is activated when the

block_matrix_partitionparameter is set in the config file, and is mainly used with thedisable_proof_verificationflag because of the resource cost of cell validation. IMPORTANT: disabling proof verification introduces a trust assumption towards the node, that the data provided is correct. -

Crawl-Client Mode: Active if the

crawlfeature is enabled, andcrawl_blockparameter is set totrue. The client crawls cells from DHT for entire block, and calculates success rate. Crawled cell proofs are not being verified, nor rows commitment equality check is being performed. Every block crawling is delayed bycrawl_block_delayparameter. Delay should be enough so crawling of large block can be compensated. Success rate is emitted in logs and metrics. Crawler can be run in three modes:cells,rowsandboth. Default mode iscells, and it can be configured bycrawl_block_modeparameter.

Download the Light Client from the releases page.

Light Client can also be built from the source:

git clone https://github.com/availproject/avail-light.git

cd avail-light

cargo build --releaseResulting avail-light binary can be found in the target/release directory.

Alternatively, you can use Docker to build and run the light client locally. Keep in mind that Docker image will

fail unless you have provided a config.yaml during the build process:

docker build -t avail-light .It will cache the dependencies on the first build, after which you can run the image like:

docker run avail-lightFor local development, a couple of prerequisites have to be met.

- Run the Avail node. For this setup, we'll run it in

devmode:

./data-avail --dev --enable-kate-rpc- A bootstrap node is required for deploying the Light Client(s) locally. Once the bootstrap has been downloaded and started, run the following command:

./avail-light --network localConfiguration file can also be used for the local deployment, as was the case for the testnet.

Example configuration file:

# config.yaml

log_level = "info"

http_server_host = "127.0.0.1"

http_server_port = 7007

secret_key = { seed = "avail" }

port = 37000

full_node_ws = ["ws://127.0.0.1:9944"]

app_id = 0

confidence = 92.0

avail_path = "avail_path"

bootstraps = ["/ip4/127.0.0.1/tcp/39000/p2p/12D3KooWMm1c4pzeLPGkkCJMAgFbsfQ8xmVDusg272icWsaNHWzN"]Full configuration reference can be found below.

NOTE

Flags and options take precedence to the configuration file if both are set (i.e. --port option overwrites the port parameter from the config file).

Example identity file:

WARNING: This file contains a private key. Please ensure only authorized access and prefer using encrypted storage.

# identity.toml

avail_secret_seed_phrase = "bottom drive obey lake curtain smoke basket hold race lonely fit walk//Alice"--network <NETWORK>: Select a network for the Light Client to connect. Possible values are:local: Local development

--config: Location of the configuration file--identity: Location of the identity file--app-id: TheappIDparameter for the application client--port: LibP2P listener port--verbosity: Log level. Possible values are:tracedebuginfowarnerror

--avail-suri <SECRET_URI>: Avail secret URI, flag is optional, overrides secret URI from identity file--avail-passphrase <PASSPHRASE>: (DEPRECATED) Avail secret seed phrase password, flag is optional, overrides password from identity file--seed: Seed string for libp2p keypair generation--secret-key: Ed25519 private key for libp2p keypair generation

--version: Light Client version--clean: Remove previous state dir set inavail_pathconfig parameter--finality_sync_enable: Enable finality sync

In the Avail network, a light client's identity can be configured using the identity.toml file. If not specified, a secret URI will be generated and stored in the identity file when the light client starts. To use an existing secret URI, set the avail_secret_uri entry in the identity.toml file. Secret URI will be used to derive Sr25519 key pair for signing. Location of the identity file can be specified using --identity option. Parameter avail_secret_seed_phrase is deprecated and replaced with avail_secret_uri. More info can be found on Substrate URI documentation.

log_level = "info"

# Light client HTTP server host name (default: 127.0.0.1)

http_server_host = "127.0.0.1"

# Light client HTTP server port (default: 7007).

http_server_port = 7007

# Secret key for libp2p keypair. Can be either set to `seed` or to `key`.

# If set to seed, keypair will be generated from that seed.

# If set to key, a valid ed25519 private key must be provided, else the client will fail

# If `secret_key` is not set, random seed will be used.

secret_key = { seed={seed} }

# P2P service port (default: 37000).

port = 37000

# Configures AutoNAT behaviour to reject probes as a server for clients that are observed at a non-global ip address (default: false)

autonat_only_global_ips = false

# AutoNat throttle period for re-using a peer as server for a dial-request. (default: 1s)

autonat_throttle = 2

# Interval in which the NAT status should be re-tried if it is currently unknown or max confidence was not reached yet. (default: 20s)

autonat_retry_interval = 20

# Interval in which the NAT should be tested again if max confidence was reached in a status. (default: 360s)

autonat_refresh_interval = 360

# AutoNat on init delay before starting the first probe. (default: 5s)

autonat_boot_delay = 10

# Vector of Light Client bootstrap nodes, used to bootstrap the DHT (mandatory field).

bootstraps = ["/ip4/13.51.79.255/tcp/39000/p2p/12D3KooWE2xXc6C2JzeaCaEg7jvZLogWyjLsB5dA3iw5o3KcF9ds"]

# Vector of Relay nodes, which are used for hole punching

relays = ["/ip4/13.49.44.246/tcp/39111/12D3KooWBETtE42fN7DZ5QsGgi7qfrN3jeYdXmBPL4peVTDmgG9b"]

# WebSocket endpoint of a full node for subscribing to the latest header, etc (default: ws://127.0.0.1:9944).

full_node_ws = ["ws://127.0.0.1:9944"]

# Genesis hash of the network you are connecting to. The genesis hash will be checked upon connecting to the node(s) and will also be used to identify you on the p2p network. If you wish to skip the check for development purposes, entering DEV{suffix} instead will skip the check and create a separate p2p network with that identifier.

genesis_hash = "DEV123"

# ID of application used to start application client. If app_id is not set, or set to 0, application client is not started (default: 0).

app_id = 0

# Confidence threshold, used to calculate how many cells need to be sampled to achieve desired confidence (default: 99.9).

confidence = 99.9

# File system path where RocksDB used by light client, stores its data. (default: avail_path)

avail_path = "avail_path"

# OpenTelemetry Collector endpoint (default: `http://127.0.0.1:4317`)

ot_collector_endpoint = "http://127.0.0.1:4317"

# If set to true, logs are displayed in JSON format, which is used for structured logging. Otherwise, plain text format is used (default: false).

log_format_json = true

# Fraction and number of the block matrix part to fetch (e.g. 2/20 means second 1/20 part of a matrix). This is the parameter that determines whether the client behaves as fat client or light client (default: None)

block_matrix_partition = "1/20"

# Disables proof verification in general, if set to true, otherwise proof verification is performed. (default: false).

disable_proof_verification = false

# Disables fetching of cells from RPC, set to true if client expects cells to be available in DHT (default: false)

disable_rpc = false

# Number of parallel queries for cell fetching via RPC from node (default: 8).

query_proof_rpc_parallel_tasks = 8

# Maximum number of cells per request for proof queries (default: 30).

max_cells_per_rpc = 30

# Number of seconds to postpone block processing after the block finalized message arrives. (default: 20).

block_processing_delay = 0

# Starting block of the syncing process. Omitting it will disable syncing. (default: None).

sync_start_block = 0

# Enable or disable synchronizing finality. If disabled, finality is assumed to be verified until the

# starting block at the point the LC is started and is only checked for new blocks. (default: false)

sync_finality_enable = false

# Time-to-live for DHT entries in seconds (default: 24h).

# Default value is set for light clients. Due to the heavy duty nature of the fat clients, it is recommended to be set far below this value - not greater than 1hr.

# Record TTL, publication and replication intervals are co-dependent: TTL >> publication_interval >> replication_interval.

record_ttl = 86400

# Enables the automatic Kademlia mode switch from default client to server. (default: true).

automatic_server_mode = true

# Sets the (re-)publication interval of stored records, in seconds. This interval should be significantly shorter than the record TTL, ensure records do not expire prematurely. (default: 12h).

# Default value is set for light clients. Fat client value needs to be inferred from the TTL value.

# This interval should be significantly shorter than the record TTL, to ensure records do not expire prematurely.

publication_interval = 43200

# Sets the (re-)replication interval for stored records, in seconds. This interval should be significantly shorter than the publication interval, to ensure persistence between re-publications. (default: 3h).

# Default value is set for light clients. Fat client value needs to be inferred from the TTL and publication interval values.

# This interval should be significantly shorter than the publication interval, to ensure persistence between re-publications.

replication_interval = 10800

# The replication factor determines to how many closest peers a record is replicated. (default: 5).

replication_factor = 5

# Sets the amount of time to keep connections alive when they're idle. (default: 30s).

# NOTE: libp2p default value is 10s, but because of Avail block time of 20s the value has been increased

connection_idle_timeout = 30

# Sets the timeout for a single Kademlia query. (default: 10s).

query_timeout = 10

# Sets the allowed level of parallelism for iterative Kademlia queries. (default: 3).

query_parallelism = 3

# Sets the Kademlia caching strategy to use for successful lookups. If set to 0, caching is disabled. (default: 1).

caching_max_peers = 1

# Require iterative queries to use disjoint paths for increased resiliency in the presence of potentially adversarial nodes. (default: false).

disjoint_query_paths = false

# The maximum number of records. (default: 2400000).

max_kad_record_number = 2400000

# The maximum size of record values, in bytes. (default: 8192).

max_kad_record_size = 8192

# The maximum number of provider records for which the local node is the provider. (default: 1024).

max_kad_provided_keys = 1024- Immediately after starting a fresh light client, block sync is executed from a starting block set with the

sync_start_blockconfig parameter. The sync process is using both the DHT and RPC for that purpose. - In order to spin up a fat client, config needs to contain the

block_matrix_partitionparameter set to a fraction of matrix. It is recommended to set thedisable_proof_verificationto true, because of the resource costs of proof verification. sync_start_blockneeds to be set correspondingly to the blocks cached on the connected node (if downloading data via RPC).- When an LC is freshly connected to a network, block finality is synced from the first block. If the LC is connected to a non-archive node on a long running network, initial validator sets won't be available and the finality checks will fail. In that case we recommend disabling the

sync_finality_enableflag - When switching between the networks (i.e. local devnet), LC state in the

avail_pathdirectory has to be cleared - OpenTelemetry push metrics are used for light client observability

- In order to use network analyzer, the light client has to be compiled with

--features 'network-analysis'flag; when running the LC with network analyzer, sufficient capabilities have to be given to the client in order for it to have the permissions needed to listen on socket:sudo setcap cap_net_raw,cap_net_admin=eip /path/to/light/client/binary - To use RocksDB as persistent Kademlia store, compile

avail-lightbinary with--features "kademlia-rocksdbon.

API usage and examples can be found on the Avail Docs.

API V2 reference can be found in the V2 README file.

API V1 reference can be found in the V1 README file.

We are using grcov to aggregate code coverage information and generate reports.

To install grcov, run:

cargo install grcovSource code coverage data is generated when running tests with:

env RUSTFLAGS="-C instrument-coverage" \

LLVM_PROFILE_FILE="tests-coverage-%p-%m.profraw" \

cargo testTo generate the report, run:

grcov . -s . \

--binary-path ./target/debug/ \

-t html \

--branch \

--ignore-not-existing -o \

./target/debug/coverage/To clean up generate coverage information files, run:

find . -name \*.profraw -type f -exec rm -f {} +Open index.html from the ./target/debug/coverage/ folder to review coverage data.