Diana Triantafyllidou & Raphael Vorias

This project is divided into four main sections:

- A Convolutional Auto-Encoder

- A Multi-label Classifier

- A Dual AE/Classifier

- An Image Segmentator

Each section consists of build files that constuct a model and save it locally.

Then, pipeline modules use the built models in order to train.

Images and masks are preproccesed and then handled via flow_from_dataframe.

Some sections, such as the AE section, contain visualization files.

While these models are not getting state of the art results, the

Tested models:

| Model | Accuracy | Params |

|---|---|---|

| U-Net - unfrozen | 0.71 | 2 M |

| Squeeze U-Net - unfrozen | 0.78 | 726 K |

| Baseline - frozen | 0.65 | 116 K |

| Baseline - unfrozen | 0.43 | 170 K |

| Baseline - blank | 0.68 | 170 K |

| Dual architecture | 0.66 | 440 K |

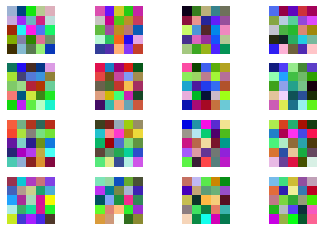

Example reconstruction:

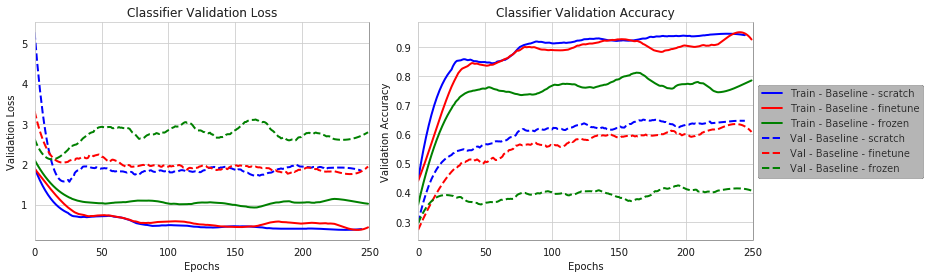

Three variations were tested:

- Baseline Scratch: baseline architecture with reinitialized weights.

- Baseline Finetune: trained encoder part of the AE to finetune these pre-trained weights.

- Baseline Frozen: trained encoder part of the AE which are frozen, only the last dense layers are trained.

Next to self-made models, U-Net architectures were used.

| Model | Accuracy | Params |

|---|---|---|

| U-Net - unfrozen | 0.71 | 2 M |

| Squeeze U-Net - unfrozen | 0.78 | 726 K |

| Baseline - frozen | 0.65 | 116 K |

| Baseline - unfrozen | 0.43 | 170 K |

| Baseline - blank | 0.68 | 170 K |

| Dual architecture | 0.66 | 440 K |

This network has both an AutoEncoder and a Classifier and are trained simultaneously.

Weight plots of the first convolutional layer:

| Untrained | Trained |

|---|---|

|

|

TSNE-plot of the final dense layer:

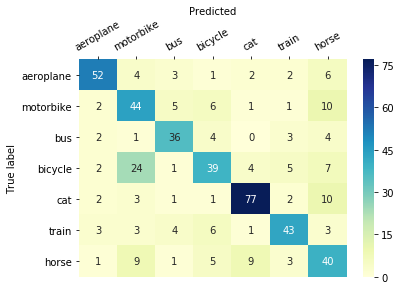

Confusion plot after 250 epochs:

Trained using Dice Loss. Examples: