This repository contains the code for the Spring 2018-COS 598B final project designed by Ryan McCaffrey and Yannis Karakozis. The architecture of the model is heavily inspired by the following paper:

- R. Hu, M. Rohrbach, T. Darrell, Segmentation from Natural Language Expressions. in ECCV, 2016. (PDF)

@article{hu2016segmentation,

title={Segmentation from Natural Language Expressions},

author={Hu, Ronghang and Rohrbach, Marcus and Darrell, Trevor},

journal={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2016}

}

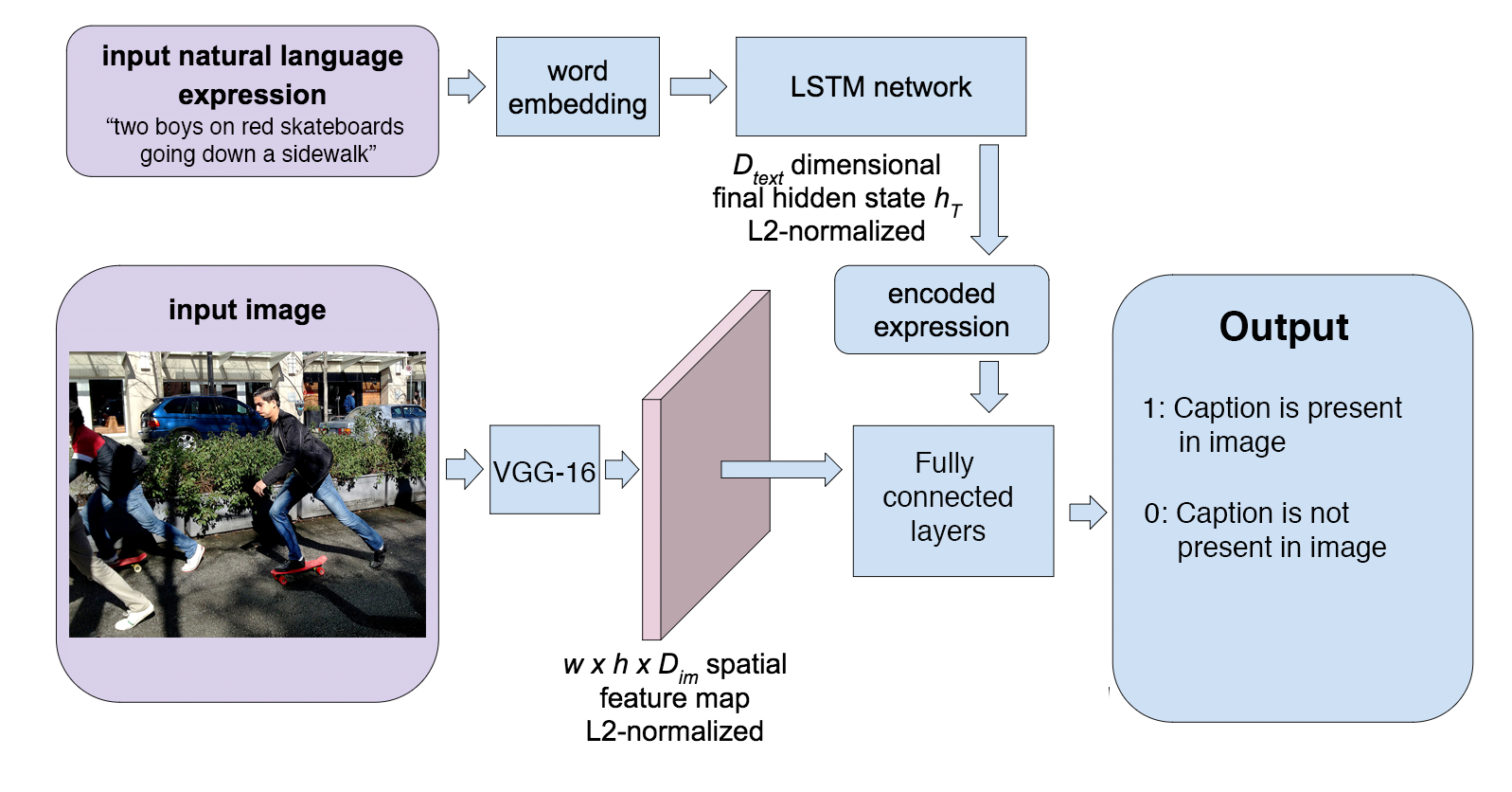

A graphic of the architecture is below:

- Install Google TensorFlow (v1.0.0 or higher) following the instructions here.

- Download this repository or clone with Git, and then

cdinto the root directory of the repository.

- You can request the pre-trained models by contacting the authors at

{rm24,ick}@princeton.edu. - Run the model demo in

./demo/text_objseg_cls_glove_demo.ipynbwith Jupyter Notebook (IPython Notebook). If the demo with the learned word embedding is desired, run the demo in./demo/text_objseg_cls_demo.ipynb.

- Download VGG-16 network parameters trained on ImageNET 1000 classes:

models/convert_caffemodel/params/download_vgg_params.sh. - Download the pre-trained GloVe word embeddings using the chakin Github repository. Follow the repository instructions to download the

glove.6B.50d.txtandglove.6B.300d.txtfiles, and then place both in theexp-referit/datarepository. - Download the testing and training COCO annotations from the download site. Choose the

2017 Train/Val annotationszip, unpack it, and place the files incoco/annotations. Execute the coco/Makefile to setup the MS COCO Python API. - Download the ReferIt dataset:

exp-referit/referit-dataset/download_referit_dataset.sh.

- You may need to add the repository root directory to Python's module path:

export PYTHONPATH=.:$PYTHONPATH. - Build training batches:

python coco/build_training_batches_cls_coco.py. Check the file to see different arguments that can be given to change how files are generated, which will impact how the model trains. - Select the GPU you want to use during training:

export GPU_ID=<gpu id>. Use 0 for<gpu id>if you only have one GPU on your machine. - Train the caption validation model using one of the following commands:

- To train with learned word embeddings:

python train_cls.py $GPU_ID - To train with GloVe embeddings:

python train_cls_glove.py $GPU_ID - See the top of each training script for details on the other command line arguments needed.

- To train with learned word embeddings:

- Select the GPU you want to use during testing:

export GPU_ID=<gpu id>. Use 0 for<gpu id>if you only have one GPU on your machine. Also, you may need to add the repository root directory to Python's module path:export PYTHONPATH=.:$PYTHONPATH. - Run evaluation for the caption validation model:

python coco/test_coco_cls.py $GPU_ID. Look inside the file to see the arguments that can be given to test the model. These arguments should match the ones given to the training batch image file. This should reproduce the results in the paper.