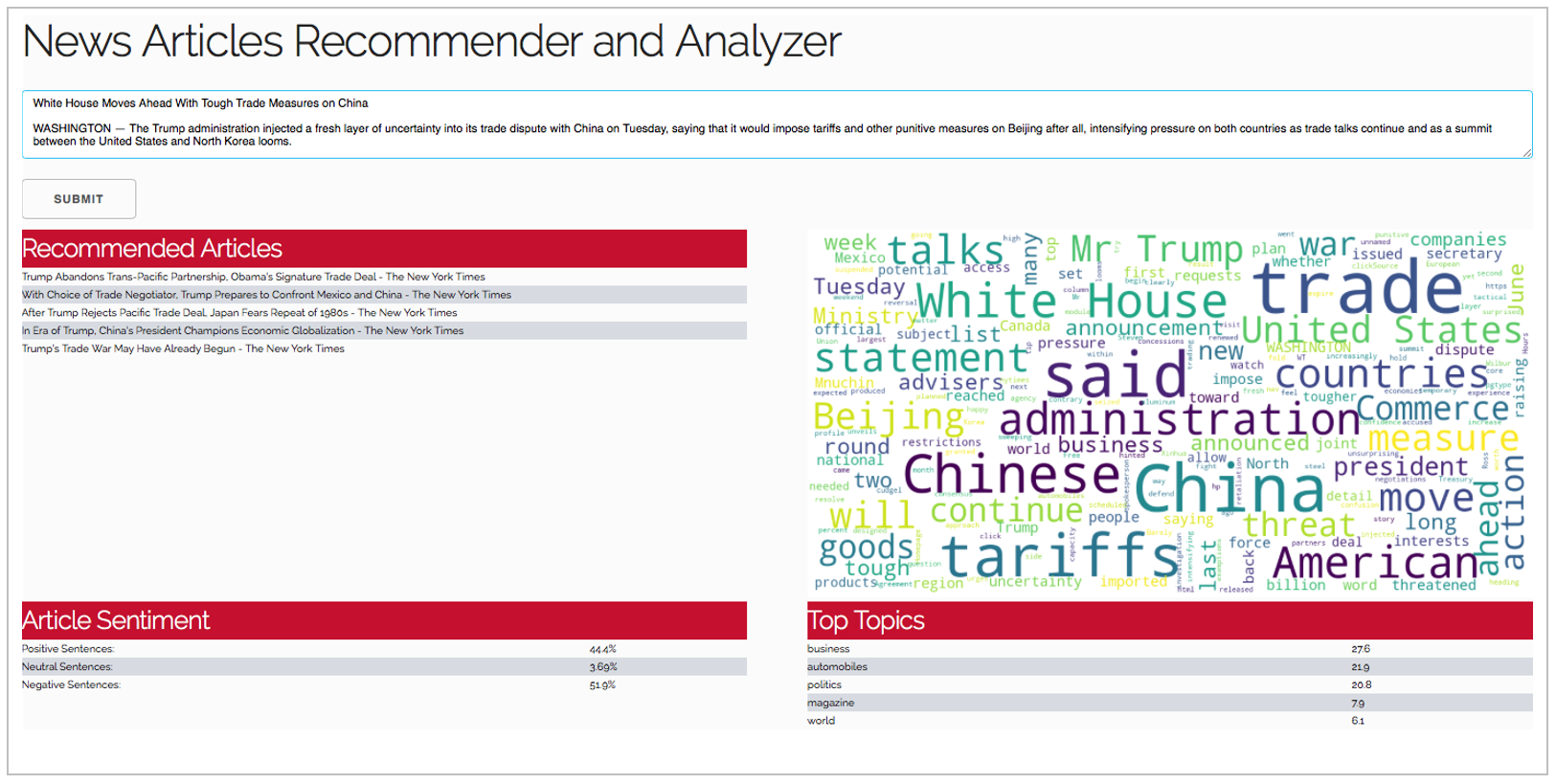

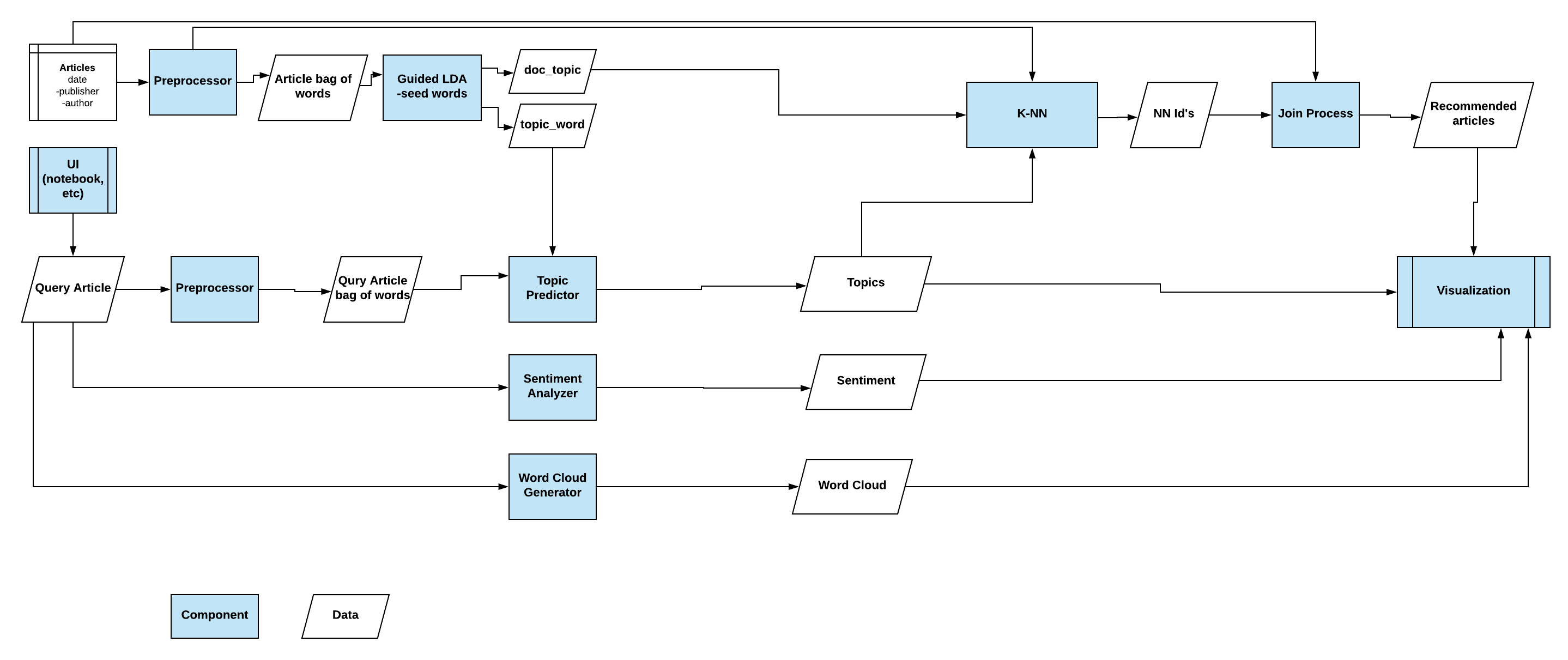

Conventional news recommendation systems use a small set of keywords to identify the top recommended articles to users based on keywords frequency. We built upon this framework by enabling users to find recommended articles by providing an entire news article. The recommendation process uses article topics to evaluate which articles to recommended.

Our system highlights topic insights through two different models: an unguided LDA for identifying topic recommended articles and a guided LDA with seed words from NYTimes to show interpretable topics. Our system also shows sentiment information and word cloud that summarize the query article for the user.

NARA does 3 things:

- Recommends news articles based on topic relevance

- Analyzes sentiment of news articles

- Visualizes news articles

How does it work?

- Takes user input query article or keyword/phrases from UI

- Recommends relevant articles from corpus using LDA

- Lists relevant topics for query article

- Presents sentiment analysis based on number of positive/negative/neutral sentences in input

- Visualizes query article using word cloud

For more details, please see: FunctionalDesign.md

- The corpus comes from All-the-news Kaggle dataset:

It consists of over 140,000 articles from 15 US national publishers between 2015 - 2017. The distribution of the publishers is shown below:

We used labelled article information from the New York Times to seed words for the guided LDA. We aggregated and analyzed the article titles, summaries and categories from a series of days to generate the list of seed words.

- First clone the repo in your local directory. Then in the repo root directory, run the set up file to install the dependencies:

pip install -r requirements.txt

- Now, run the

setup.pyfile as following to download other data dependencies:

python setup.py build

Currently NARA only supports Python 3.4 or newer.

Because the full news articles corpus and resulting topic models are too large to be stored in the repo, we provide a smaller corpus that is subset of the full corpus. This demo section goes over how to run Now to run the user interface to start using NARA.

-

Follow the installations steps above

-

Run the Dash UI

python path_to_libraries/user_interface.py

-

Copy the url that shows up in your command line to your browser to start the UI.

-

Enter any sample article from the examples folder or of your choice

Refer to the NARA_UserGuide.pdf in the examples directory for more detailed information.

The repo includes files for a subset of the Kaggle data. To download and build the topic models based on the full dataset you'll need to register for a Kaggle account and follow instructions in this link under the API Credentials section: https://github.com/Kaggle/kaggle-api.

Once Kaggle credentials are set up, simply run the build_resources.py script as follows from the root directory:

python <path to root folder>/news_analyzer/scripts/build_resources.py --download

The build script will automatically download the csv files using Kaggle's API, and build the topic models. Once complete, you can simply run the UI as shown above in the demo example.

The full build using the entire corpus of 140,000 articles is quite computationally expensive. It is recommended that the build is performed on a cloud machine with at least 48GB of memory. The full build took about ~24 hours on compute optimized AWS machine with 60GB memory.

If the data has already been downloaded and you would like rebuild the topic model with different settings, you can run the build_resources.py script without the --download flag.

For more details, please see: ComponentDesign.md

news_analyzer

├── LICENSE

├── README.md

├── doc

│ ├── ComponentDesign.md

│ ├── Final_presentation.pptx

│ ├── FunctionalDesign.md

│ ├── WeReadTheNews.pptx

│ ├── mockup.png

│ ├── ui_screenshot.png

│ └── news-nlp-flowchart-2.png

├── examples

│ ├── NARA_UserGuide.pdf

│ ├── example_article1.txt

│ ├── example_article2.txt

│ └── example_article3.txt

├── news_analyzer

│ ├── __init__.py

│ ├── libraries

│ │ ├── __init__.py

│ │ ├── article_recommender.py

│ │ ├── configs.py

│ │ ├── handler.py

│ │ ├── nytimes_article_retriever.py

│ │ ├── sentiment_analyzer.py

│ │ ├── temp_wordcloud.png

│ │ ├── text_processing.py

│ │ ├── topic_modeling.py

│ │ ├── user_interface.py

│ │ └── word_cloud_generator.py

│ ├── resources

│ │ ├── articles.csv

│ │ ├── guidedlda_model.pkl

│ │ ├── nytimes_data

│ │ │ ├── NYtimes_data_20180507.csv

│ │ │ ├── NYtimes_data_20180508.csv

│ │ │ ├── NYtimes_data_20180509.csv

│ │ │ ├── NYtimes_data_20180513.csv

│ │ │ ├── NYtimes_data_20180515.csv

│ │ │ ├── NYtimes_data_20180527.csv

│ │ │ ├── NYtimes_data_20180528.csv

│ │ │ ├── NYtimes_data_20180529.csv

│ │ │ ├── NYtimes_data_20180603.csv

│ │ │ └── aggregated_seed_words.txt

│ │ ├── preprocessor.pkl

│ │ └── unguidedlda_model.pkl

│ ├── scripts

│ │ └── build_resources.py

│ └── tests

│ ├── __init__.py

│ ├── test_article_recommender.py

│ ├── test_handler.py

│ ├── test_nytimes_article_retriever.py

│ ├── test_preprocessing.py

│ ├── test_resources

│ │ ├── test_dtm.pkl

│ │ └── test_topics_raw.pkl

│ ├── test_sentiment_analyzer.py

│ ├── test_topic_modeling.py

│ ├── test_user_interface.py

│ └── test_word_cloud_generator.py

├── requirements.txt

└── setup.py

MS Data Science, University of Washington

DATA 515 Software Design for Data Science (Spring 2018)

Team members: