| Page Type | Languages | Key Services | Tools |

|---|---|---|---|

| Sample | HCL YAML |

Azure DevOps (Pipelines) | Terraform |

Deploying infrastructure across multiple Azure tenants and subscriptions with Azure DevOps and Terraform

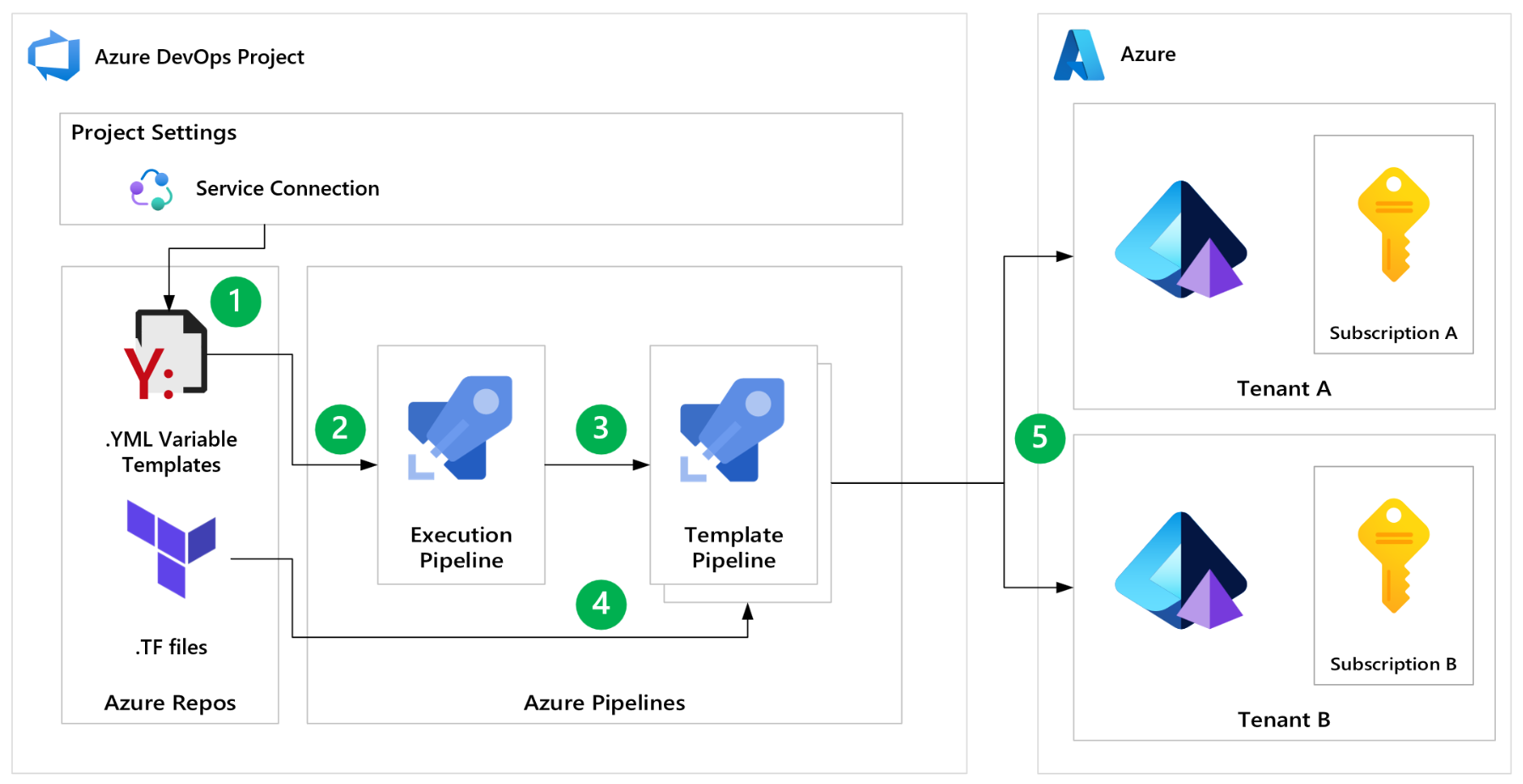

Deploying infrastructure as code via Azure Pipelines is a common use case, especially to a single Azure tenant/subscription. However, there are scenarios where you may need to dynamically deploy infrastructure across multiple tenants and subscriptions. There are several limitations that one might experience when trying to configure and execute this scenario; this codebase demonstrates how to circumvent these limitations and dynamically deploy infrastructure to multiple Azure tenants and subscriptions using repeatable workflows.

This codebase provides a simple use case and starting point that should be modified and expanded into more complex scenarios.

Azure Pipelines provides a mechanism to generate copies of a job, each with a different input, effectively allowing for a "looping" construct. This is referred to as a matrix execution strategy. This is useful for running a repeated set of tasks across different inputs, and it could presumably be used to deploy the same infrastructure as code to multiple tenants and subscriptions; however, the following constraints pose challenges to this approach:

- The matrix execution strategy can only be used when creating a job.

- Service connections, which are required to authenticate to Azure, must be defined prior to runtime and be referred to using template expression syntax. This is a by-design security measure.

- Variables can be dynamically loaded from a template YML file, and the lowest depth in the hierarchy they can be defined at is when a job is being invoked.

So, if attempting to use the matrix execution strategy to act as a looping construct that generates a dynamic input (i.e., a service connection), that service connection cannot be used within the matrix job (see #2), nor can a template YML file be loaded containing a service connection, since the logic to load the file would need to occur after job invocation (see #1, #3).

The lack of readily available solutions to this problem was a primary motivator for creating this codebase.

Note that this codebase not only seeks to prove how to deploy infrastructure as code and work around the matrix execution strategy limitations, but also to provide a solution to the general limitation of using service connections dynamically.

- An Azure Subscription - for hosting cloud infrastructure

- Azure DevOps - for running pipelines

- Terraform Extension for Azure DevOps - for running Terraform commands in Azure Pipelines

- Azure CLI (optional on local machine) - for interacting with Azure and Azure DevOps via the command line

- Terraform (optional on local machine) - for infrastructure as code

-

This codebase assumes that for each subscription, a service connection is created in Azure DevOps. This will allow you to authenticate and deploy resources to that target subscription.

-

Idea for expanded use case: Service connections can be created at a variety of scopes, including at the management group level, which can be useful for managing multiple subscriptions at once. While this codebase does not demonstrate this, this is a viable approach but may require additional code changes to the

.azure-pipelines/tf-execution.ymlpipeline.

-

Given the above assumption that service connections are created for each subscription and the capability described in point #3 of the motivation section, the

.azure-pipelinesdirectory contains aserviceConnectionTemplatesdirectory, which contains a file for each service connection. Each file contains the following:serviceConnectionName: The name of the service connection created in Azure DevOpssubscriptionName: The name of the subscription in Azure (optional, but useful for identifying the subscription in the pipeline logs)subscriptionId: The subscription ID in Azure (optional, but useful for identifying the subscription in the pipeline logs)backendAzureRmResourceGroupName: The name of the resource group where the Terraform state file will be storedbackendAzureRmStorageAccountName: The name of the storage account where the Terraform state file will be storedbackendAzureRmContainerName: The name of the Storage Account container where the Terraform state file will be storedbackendAzureRmKey: The name of Terraform state filebackendTfRegion: The Azure region where the storage account will be created

-

These files provide a mechanism for defining variables before runtime (point #2 of the motivation section), and will be dynamically loaded using the approach described in latter sections of this document.

-

This codebase provides stubs for two configuration files. These files should be modified to reflect your actual service connection details. Any number of configuration files can be added to this directory, and the execution pipeline (described in a below section) will dynamically create a matrix of jobs to deploy infrastructure to each subscription.

-

Idea for expanded use case: You may consider defining a comma-delimited list of subscription IDs in your service connection configuration file as a variable. This may eliminate the need to create a service connection file for each subscription. You can then use the split expression to process the array of subscription IDs.

-

Another idea for expanded use case: If you have a large number of of service connections to manage, you may consider using the Azure DevOps REST API to create and/or read service connections, coupled with a programmatic approach to generating the YML configuration files.

-

In Azure Pipelines, create a pipeline using the

.azure-pipelines/tf-template.ymlfile, and make note of the pipeline ID.-

You can get the pipeline ID by navigating to the pipeline in Azure DevOps and looking at the URL. The pipeline ID is the number at the end of the URL, e.g.:

https://dev.azure.com/<org name>/<project name>/_build?definitionId=<pipeline id> -

You can also get the pipeline ID by running the following command with Azure CLI:

az pipelines show --organization <your org name> --project <project name> --name <pipeline name>

-

-

.azure-pipelines/tf-template.ymlis a pipeline that accepts the name of a configuration file and uses the service connection to deploy infrastructure to the target subscription. -

The steps in the pipeline are as follows:

- (Optional step) Using the Azure CLI, perform a set of idempotent operations to create a resource group, Storage Account, and storage container in the target subscription for remotely storing Terraform state. Read more about this here.

- Print the contents of the configuration file to the pipeline logs for logging purposes.

- Install Terraform and execute the commands to initialize, plan, and apply the infrastructure changes using the service connection from the configuration file.

- In Azure Pipelines, create a pipeline using the

.azure-pipelines/tf-execution.ymlfile. .azure-pipelines/tf-execution.ymlis a pipeline that reads the contents of theserviceConnectionTemplatesdirectory and dynamically creates a matrix of jobs to deploy infrastructure to each subscription.- You need to update the

pipelineIdvariable with the ID of the template pipeline created in the previous step. - The steps in the pipeline are as follows:

- Read the contents of the

serviceConnectionTemplatesdirectory and generate a matrix of jobs, one per service connection configuration file. - Execute the template pipeline for each service connection configuration file, passing the filename as a parameter.

- Read the contents of the

- Note that the

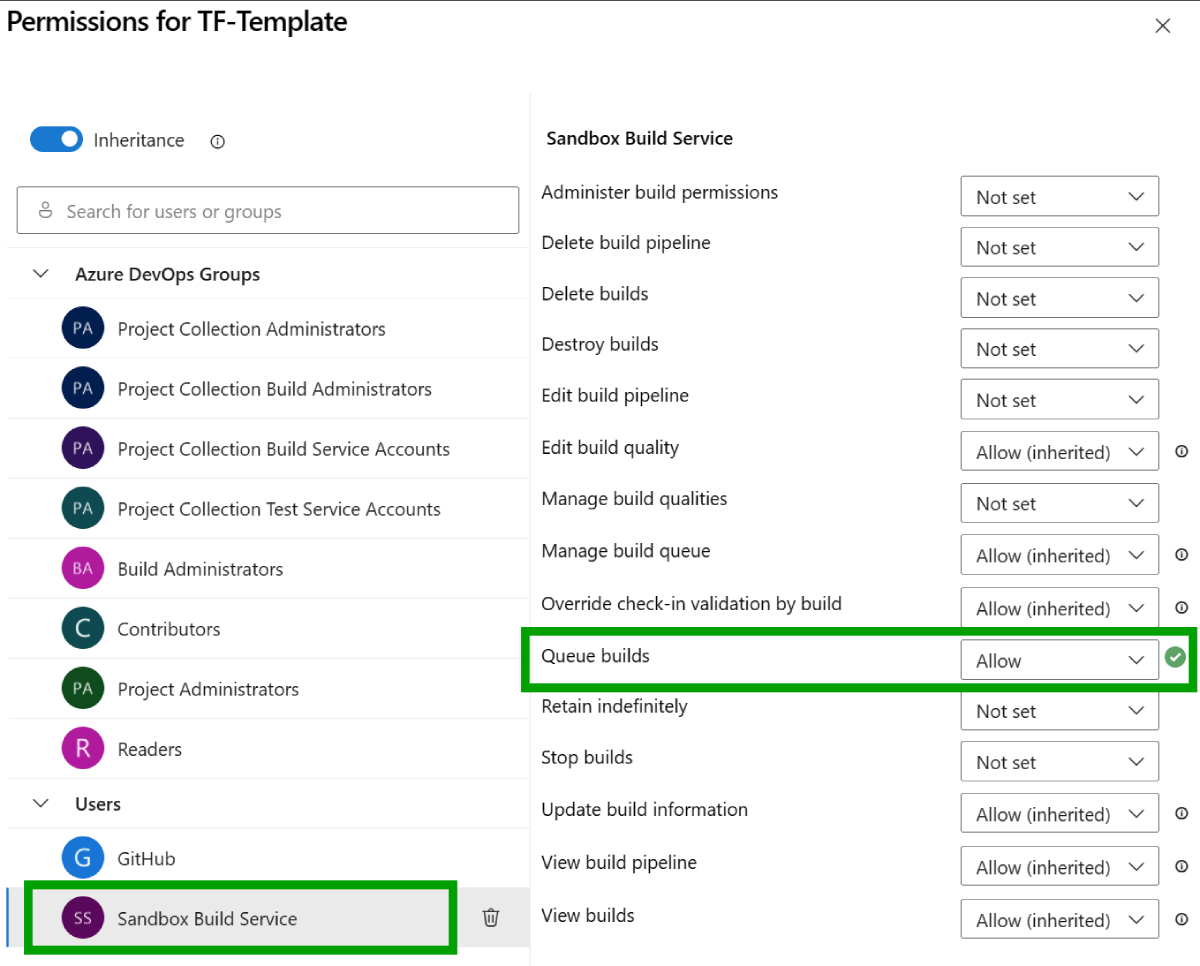

infradirectory contains a simple Terraform configuration. This is a contrived example and should be modified to reflect your actual infrastructure requirements. - Note that you will need to enable the Queue builds permission on the template pipeline to allow the execution pipeline to trigger the template pipeline:

- The matrix execution strategy can be configured to run jobs in parallel, which can be useful for speeding up the execution pipeline runtime.

- Additional information about running jobs in parallel can be found here.

- A configuration file needs to be created for each service connection and stored in the

.azure-pipelines/serviceConnectionTemplatesdirectory. - The execution pipeline reads the contents of the

serviceConnectionTemplatesdirectory and dynamically creates a matrix of jobs to deploy infrastructure to each subscription. - A template pipeline is spun up in the matrix job, and a configuration filename is passed to the template pipeline as a parameter.

- The template pipeline reads the configuration file using the parameter value, uses the service connection within to authenticate with Azure, and uses the Terraform files to initialize and plan the infrastructure deployment.

- The template pipeline uses Terraform to deploy infrastructure to the target subscription using the service connection.

An organization may consider deploying the same infrastructure across multiple tenants and subscriptions for various reasons, including:

- Multi-tenant solutions

- Enterprise resource segregation

- Regulatory compliance

- Deploying consistent boilerplate deployment stamps across tenants